I want to generate a random matrix of shape (1e7, 800). But I find numpy.random.rand() becomes very slow at this scale. Is there a quicker way?

CodePudding user response:

A simple way to do that is to write a multi-threaded implementation using Numba:

import numba as nb

import random

@nb.njit('float64[:,:](int_, int_)', parallel=True)

def genRandom(n, m):

res = np.empty((n, m))

# Parallel loop

for i in nb.prange(n):

for j in range(m):

res[i, j] = np.random.rand()

return res

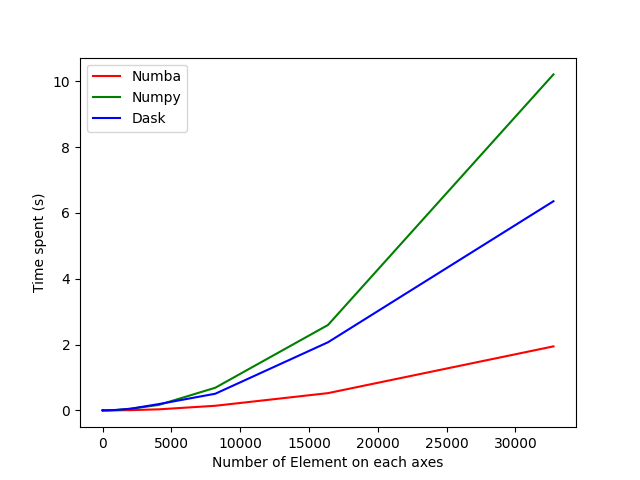

This is 6.4 times faster than np.random.rand() on my 6-core machine.

Note that using 32-bit floats may help to speed up a bit the computation although the precision will be lower.

CodePudding user response:

Numba is a good option, another option that might work well is dask.array, which will create lazy blocks of numpy arrays and perform parallel computations on blocks. On my machine I get a factor of 2 improvement in speed (for 1e6 x 1e3 matrix since I don't have enough memory on my machine).

rows = 10**6

cols = 10**3

import dask.array as da

x = da.random.random(size=(rows, cols)).compute() # takes about 5 seconds

# import numpy as np

# x = np.random.rand(rows, cols) # takes about 10 seconds

Note that .compute at the end is only to bring the computed array into memory, however in general you can continue to exploit the parallel computations with dask to get much faster calculations (that can also scale beyond a single machine), see