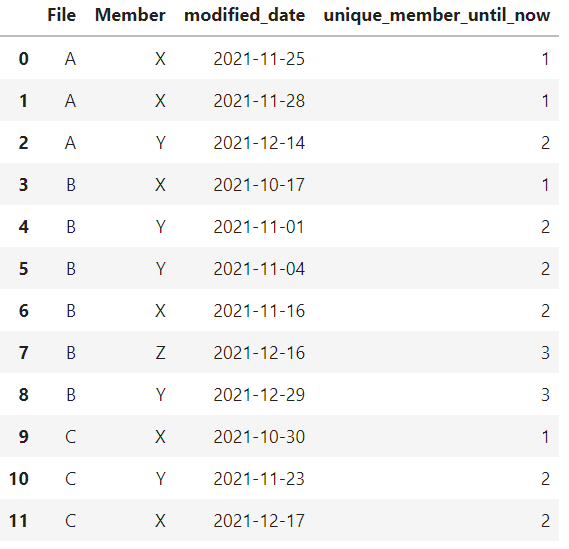

I am trying to build a scalable method to calculate the number of unique members that have modified a certain file up to and including the latest modified_date. The unique_member_until_now column contains expected result for each file.

import pandas as pd

from pandas import Timestamp

# Example Dataset

df = pd.DataFrame({'File': ['A', 'A', 'A', 'B', 'B', 'B', 'B', 'B', 'B', 'C', 'C', 'C'],

'Member': ['X', 'X', 'Y', 'X', 'Y', 'Y', 'X', 'Z', 'Y', 'X', 'Y', 'X'],

'modified_date': [Timestamp('2021-11-25 00:00:00'),

Timestamp('2021-11-28 00:00:00'),

Timestamp('2021-12-14 00:00:00'),

Timestamp('2021-10-17 00:00:00'),

Timestamp('2021-11-01 00:00:00'),

Timestamp('2021-11-04 00:00:00'),

Timestamp('2021-11-16 00:00:00'),

Timestamp('2021-12-16 00:00:00'),

Timestamp('2021-12-29 00:00:00'),

Timestamp('2021-10-30 00:00:00'),

Timestamp('2021-11-23 00:00:00'),

Timestamp('2021-12-17 00:00:00')],

'unique_member_until_now': [1, 1, 2, 1, 2, 2, 2, 3, 3, 1, 2, 2]})

df.groupby("File")["Member"].transform('nunique') ofcourse doesn't give the intended result

The current approach is to iterate over every group and each record in the group, but I am sure that is grossly inefficient and slow when dealing with millions for rows.

CodePudding user response:

An efficient method would be to compute the (non) duplicated on the File Member columns, then groupby File and cumsum:

(~df[['File', 'Member']].duplicated()).groupby(df['File']).cumsum()

Saving as column:

df['unique_member_until_now'] = (~df[['File', 'Member']].duplicated()).groupby(df['File']).cumsum()

output:

File Member modified_date unique_member_until_now

0 A X 2021-11-25 1

1 A X 2021-11-28 1

2 A Y 2021-12-14 2

3 B X 2021-10-17 1

4 B Y 2021-11-01 2

5 B Y 2021-11-04 2

6 B X 2021-11-16 2

7 B Z 2021-12-16 3

8 B Y 2021-12-29 3

9 C X 2021-10-30 1

10 C Y 2021-11-23 2

11 C X 2021-12-17 2

CodePudding user response:

You can group by File, and then use is_duplicated (inverted with ~) cumsum:

df['unique_member_until_now'] = df.groupby('File').apply(lambda g: (~g['Member'].duplicated()).cumsum()).droplevel(0)

Output:

>>> df

File Member modified_date unique_member_until_now

0 A X 2021-11-25 1

1 A X 2021-11-28 1

2 A Y 2021-12-14 2

3 B X 2021-10-17 1

4 B Y 2021-11-01 2

5 B Y 2021-11-04 2

6 B X 2021-11-16 2

7 B Z 2021-12-16 3

8 B Y 2021-12-29 3

9 C X 2021-10-30 1

10 C Y 2021-11-23 2

11 C X 2021-12-17 2