I am trying to put my CSV file from S3 to DynamoDB through the Lambda function. In the first stage, I was uploading my .csv file manually in S3 manually. When uploading the file manually, I know the name of the file, and I put this file name as a key in the test event and it works file.

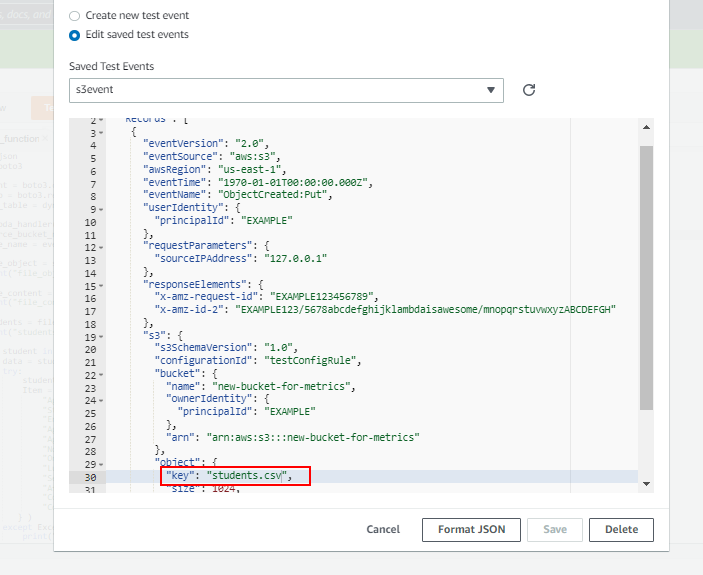

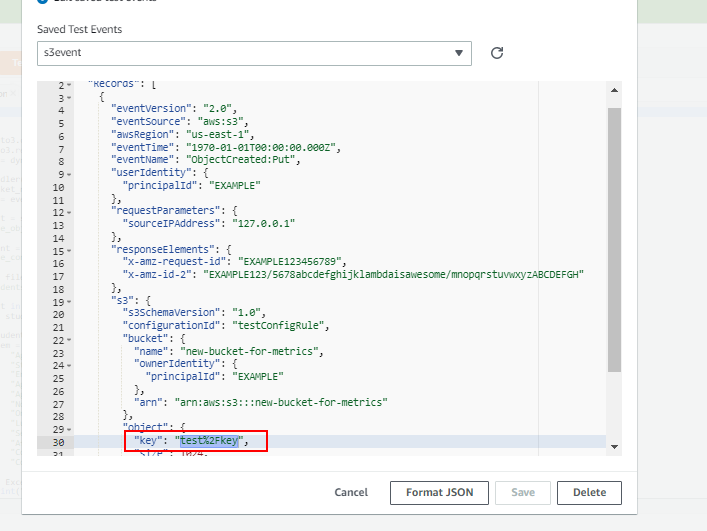

I want to automate things because my .csv files are automatically generated in the S3 and I don't know what will be the name of the next file. Someone suggested me to create trigger in S3 that will invoke your Lambda on every file generation. The only issue I am dealing with is what to put in the test event at the place of "key", where we are supposed to put a file name whose data we want to fetch from S3.

I don't have a file name now. Following is the Lambda code:

import json

import boto3

s3_client = boto3.client("s3")

dynamodb = boto3.resource("dynamodb")

student_table = dynamodb.Table('AgentMetrics')

def lambda_handler(event, context):

source_bucket_name = event['Records'][0]['s3']['bucket']['name']

file_name = event['Records'][0]['s3']['object']['key']

file_object = s3_client.get_object(Bucket=source_bucket_name,Key=file_name)

print("file_object :",file_object)

file_content = file_object['Body'].read().decode("utf-8")

print("file_content :",file_content)

students = file_content.split("\n")

print("students :",students)

for student in students:

data = student.split(",")

try:

student_table.put_item(

Item = {

"Agent" : data[0],

"StartInterval" : data[1],

"EndInterval" : data[2],

"Agent idle time" : data[3],

"Agent on contact time" : data[4],

"Nonproductive time" : data[5],

"Online time" : data[6],

"Lunch Break time" : data[7],

"Service level 120 seconds" : data[8],

"After contact work time" : data[9],

"Contacts handled" : data[10],

"Contacts queued" : data[11]

} )

except Exception as e:

print("File Completed")

Kindly help me here, I am getting frustrated because of this issue. I would really appreciate any help, thanks.

CodePudding user response:

As suggested in your question you have to add trigger to S3 bucket on action POST, PUT OR DELETE whichever action need to track. Here is more details : https://docs.aws.amazon.com/lambda/latest/dg/with-s3-example.html

- Select Lambda either python or nodeJs whichever you prefer from blueprint option

- Then select S3 bucket and action like PUT, POST OR DELETE or all.

- Write your above code make entry in db in this lambda.

CodePudding user response:

The "Test Event" you are using is an example of a message that Amazon S3 will send to your Lambda function when a new object is created in the bucket.

When S3 triggers your AWS Lambda function, it will provide details of the object that trigger the event. Your program will then use the event supplied by S3. It will not use your Test Event.

One more thing...

It is possible that the Lambda function will be triggered with more than one object being passed via the event. Your function should be able to handle this happening. You can do this by adding a for loop:

def lambda_handler(event, context):

for record in event['Records']:

source_bucket_name = record['s3']['bucket']['name']

file_name = record['s3']['object']['key']

...