I wrote a little script that has Neural Network approximate polynomial, and plots the result every epoch, but the problem is that I want that every iteration the new plot will overwrite the previous plot, so I can see how it changes over training.

I searched around the web and found that I need to use either ion() or isinteractive() or clear(), but I tried them all and it still does not work.

Here's my code:

import numpy as np

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

from keras.models import Sequential

from keras.layers import Dense

from numpy import asarray

from matplotlib import pyplot

from tensorflow.keras.layers import Conv1D

import tensorflow

class myCallback(tensorflow.keras.callbacks.Callback):

def on_train_begin(self, logs={}):

pyplot.ion()

def on_epoch_end(self, epoch, logs=None):

yhat = model.predict(x)

# inverse transforms

x_plot = scale_x.inverse_transform(x)

y_plot = scale_y.inverse_transform(y)

yhat_plot = scale_y.inverse_transform(yhat)

# report model error

print('MSE: %.3f' % mean_squared_error(y_plot, yhat_plot))

# plot x vs y

plt = pyplot.scatter(x_plot,y_plot, label='Actual')

# plot x vs yhat

pyplot.scatter(x_plot,yhat_plot, label='Predicted')

pyplot.title('Input (x) versus Output (y)')

pyplot.xlabel('Input Variable (x)')

pyplot.ylabel('Output Variable (y)')

pyplot.legend()

pyplot.show()

# define the dataset

x = asarray([i for i in range(-50,51)])

y = asarray([i**3 for i in x])

print(x.min(), x.max(), y.min(), y.max())

# reshape arrays into into rows and cols

x = x.reshape((len(x), 1))

y = y.reshape((len(y), 1))

# separately scale the input and output variables

scale_x = MinMaxScaler()

x = scale_x.fit_transform(x)

scale_y = MinMaxScaler()

y = scale_y.fit_transform(y)

print(x.min(), x.max(), y.min(), y.max())

# design the neural network model

model = Sequential()

model.add(Dense(10, input_dim=1, activation='relu', kernel_initializer='he_uniform'))

#Conv1D(32, 5, activation='relu')

model.add(Dense(10, activation='relu', kernel_initializer='he_uniform'))

model.add(Dense(1))

opt = tensorflow.keras.optimizers.Adam(learning_rate=0.01)

# define the loss function and optimization algorithm

model.compile(loss='mse', optimizer=opt)

# ft the model on the training dataset

model.fit(x, y, epochs=10, batch_size=10, verbose=0, callbacks=[myCallback()])

# make predictions for the input data

Your help would be highly appreciated!

CodePudding user response:

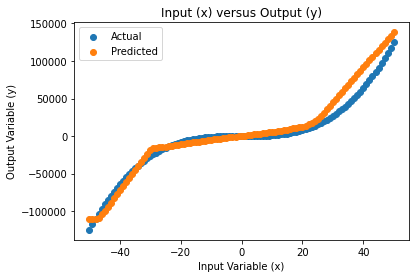

You are getting a new plot after each epoch but the changes are not really visible because your model is too weak. Here is an example with significant differences:

import numpy as np

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

from keras.models import Sequential

from keras.layers import Dense

from numpy import asarray

from matplotlib import pyplot

import tensorflow

from IPython.display import clear_output

class myCallback(tensorflow.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs=None):

clear_output(wait=True)

yhat = model.predict(x)

# inverse transforms

x_plot = scale_x.inverse_transform(x)

y_plot = scale_y.inverse_transform(y)

yhat_plot = scale_y.inverse_transform(yhat)

# report model error

print('MSE: %.3f' % mean_squared_error(y_plot, yhat_plot))

# plot x vs y

plt = pyplot.scatter(x_plot,y_plot, label='Actual')

# plot x vs yhat

pyplot.scatter(x_plot,yhat_plot, label='Predicted')

pyplot.title('Input (x) versus Output (y)')

pyplot.xlabel('Input Variable (x)')

pyplot.ylabel('Output Variable (y)')

pyplot.legend()

pyplot.show()

# define the dataset

x = asarray([i for i in range(-50,51)])

y = asarray([i**3 for i in x])

print(x.shape)

print(x.min(), x.max(), y.min(), y.max())

# reshape arrays into into rows and cols

x = x.reshape((len(x), 1))

y = y.reshape((len(y), 1))

# separately scale the input and output variables

scale_x = MinMaxScaler()

x = scale_x.fit_transform(x)

scale_y = MinMaxScaler()

y = scale_y.fit_transform(y)

print(x.min(), x.max(), y.min(), y.max())

# design the neural network model

model = Sequential()

model.add(Dense(64, input_dim=1, activation='relu'))

model.add(Dense(32, activation='relu'))

model.add(Dense(16, activation='relu'))

model.add(Dense(1))

opt = tensorflow.keras.optimizers.Adam(learning_rate=0.01)

# define the loss function and optimization algorithm

model.compile(loss='mse', optimizer=opt)

# ft the model on the training dataset

model.fit(x, y, epochs=50, batch_size=10, verbose=0, callbacks=[myCallback()])

# make predictions for the input data

Here is the plot of the final epoch: