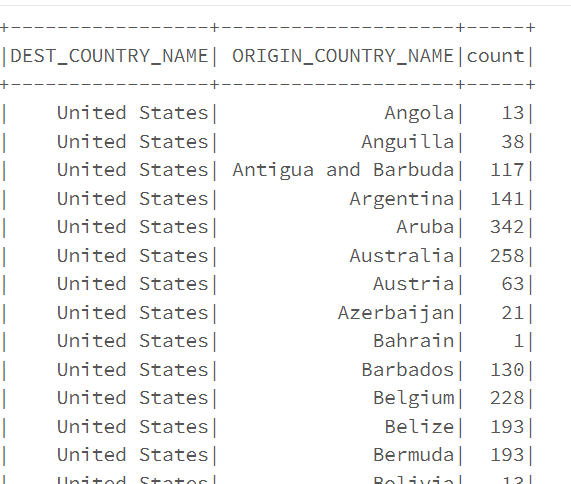

Hi there I want to achieve something like this

SAS SQL: select * from flightData2015 group by DEST_COUNTRY_NAME order by count

This is my spark code:

flightData2015.selectExpr("*").groupBy("DEST_COUNTRY_NAME").orderBy("count").show()

I received this error:

AttributeError: 'GroupedData' object has no attribute 'orderBy'. I am new to pyspark. Pyspark's groupby and orderby are not the same as SAS SQL?

I also try sortflightData2015.selectExpr("*").groupBy("DEST_COUNTRY_NAME").sort("count").show()and I received kind of same error. "AttributeError: 'GroupedData' object has no attribute 'sort'"

Please help!

CodePudding user response:

In Spark, groupBy returns a GroupedData, not a DataFrame. And usually, you'd always have an aggregation after groupBy. In this case, even though the SAS SQL doesn't have any aggregation, you still have to define one (and drop it later if you want).

(flightData2015

.groupBy("DEST_COUNTRY_NAME")

.count() # this is the "dummy" aggregation

.orderBy("count")

.show()

)

CodePudding user response:

There is no need for group by if you want every row. You can order by multiple columns.

from pyspark.sql import functions as F

vals = [("United States", "Angola",13), ("United States","Anguilla" , 38), ("United States","Antigua", 20), ("United Kingdom", "Antigua", 22), ("United Kingdom","Peru", 50), ("United Kingdom", "Russisa",13), ("Argentina", "United Kingdom",13),]

cols = ["destination_country_name","origin_conutry_name", "count"]

df = spark.createDataFrame(vals, cols)

#display(df.orderBy(['destination_country_name', F.col('count').desc()])) If you want count to be descending

display(df.orderBy(['destination_country_name', 'count']))