I wish to read into my environment a large CSV (~ 8Gb) but I am having issues.

My data is a publicly available dataset:

# CREATE A TEMP FILE TO STORE THE DOWNLOADED DATA

temp <- tempfile()

# DOWNLOAD THE FILE FROM THE CMS

download.file("https://download.cms.gov/nppes/NPPES_Data_Dissemination_February_2022.zip",

destfile = temp)

This is where I'm running into difficulty, I am unfamiliar with linux working directories and where temp folders are created.

When I use list.dir() or list.files() I don't see any reference to this temp file.

I am working in an R project and my working director is as follows:

getwd()

[1] "/home/myName/myProjectName"

I'm able to read in the first part of the file but my system crashes after about 4Gb.

# UNZIP THE NPI FILE

npi <- unz(temp, "npidata_pfile_20050523-20220213.csv")

Any pointers to how I can get this file unzipped given it's size and read it into memory would be much appreciated.

CodePudding user response:

temp is the path to the file, not just the directory. By default, tempfile does not add a file extension. It can be done by using tempfile(fileext = ".zip")

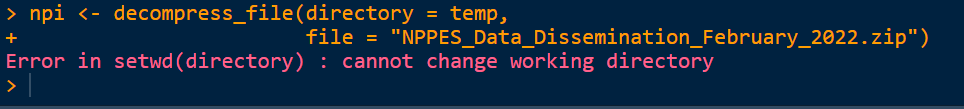

Consequently, decompress_file can not set the working directory to a file. Try this:

x <- decompress_file(directory = dirname(temp), file = basename(temp))

CodePudding user response:

It might be a file permission issue. To get around it work in a directory you're already in, or know you have access to.

# DOWNLOAD THE FILE

# to a directory you can access, and name the file. No need to overcomplicate this.

download.file("https://download.cms.gov/nppes/NPPES_Data_Dissemination_February_2022.zip",

destfile = "/home/myName/myProjectname/npi.csv")

# use the decompress function if you need to, though unzip might work

x <- decompress_file(directory = "/home/myName/myProjectname/",

file = "npi.zip")

# remove .zip file if you need the space back

file.remove("/home/myName/myProjectname/npi.zip")