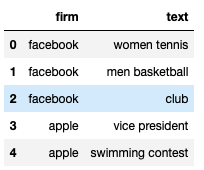

I have a sample dataframe as below

df=pd.DataFrame(np.array([['facebook', "women tennis"], ['facebook', "men basketball"], ['facebook', 'club'],['apple', "vice president"], ['apple', 'swimming contest']]),columns=['firm','text'])

Now I'd like to calculate the degree of text similarity within each firm using word embedding. For example, the average cosine similarity for facebook would be the cosine similarity between row 0, 1, and 2. The final dataframe should have a column ['mean_cos_between_items'] next to each row for each firm. The value will be the same for each company, since it is a within-firm pairwise comparison.

I wrote below code:

import gensim

from gensim import utils

from gensim.models import Word2Vec

from gensim.models import KeyedVectors

from gensim.scripts.glove2word2vec import glove2word2vec

from sklearn.metrics.pairwise import cosine_similarity

# map each word to vector space

def represent(sentence):

vectors = []

for word in sentence:

try:

vector = model.wv[word]

vectors.append(vector)

except KeyError:

pass

return np.array(vectors).mean(axis=0)

# get average if more than 1 word is included in the "text" column

def document_vector(items):

# remove out-of-vocabulary words

doc = [word for word in items if word in model_glove.vocab]

if doc:

doc_vector = model_glove[doc]

mean_vec=np.mean(doc_vector, axis=0)

else:

mean_vec = None

return mean_vec

# get average pairwise cosine distance score

def mean_cos_sim(grp):

output = []

for i,j in combinations(grp.index.tolist(),2 ):

doc_vec=document_vector(grp.iloc[i]['text'])

if doc_vec is not None and len(doc_vec) > 0:

sim = cosine_similarity(document_vector(grp.iloc[i]['text']).reshape(1,-1),document_vector(grp.iloc[j]['text']).reshape(1,-1))

output.append([i, j, sim])

return np.mean(np.array(output), axis=0)

# save the result to a new column

df['mean_cos_between_items']=df.groupby(['firm']).apply(mean_cos_sim)

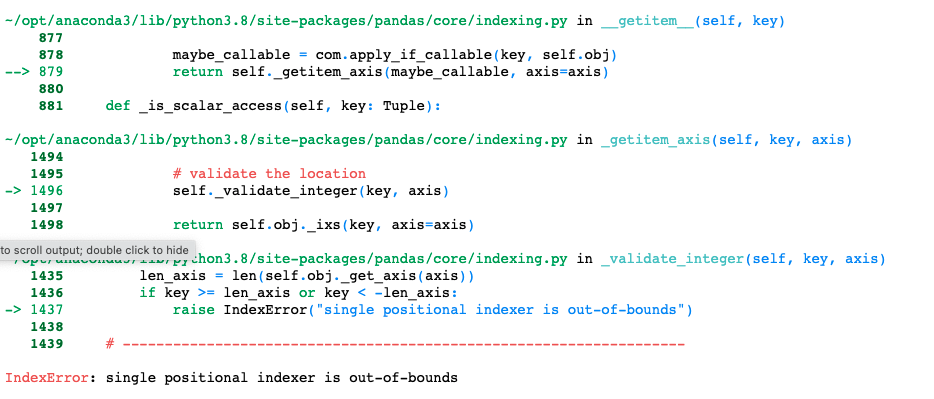

However, I got below error:

Could you kindly help? Thanks!

CodePudding user response:

Remove the .vocab here in model_glove.vocab, this is not supported in the current version of gensim any more: Edit: also needs split() to iterate over words and not characters here.

# get average if more than 1 word is included in the "text" column

def document_vector(items):

# remove out-of-vocabulary words

doc = [word for word in items.split() if word in model_glove]

if doc:

doc_vector = model_glove[doc]

mean_vec = np.mean(doc_vector, axis=0)

else:

mean_vec = None

return mean_vec

Here you iterate over tuples of indices when you want to iterate over the values, so drop the .index. Also you put all values in output including the words (/indices) i and j, so if you want to get their average you would have to specify what exactly you want the average over. Since you seem to not need i and j you can just put only the resulting sims in a list and then take the lists average:

# get pairwise cosine similarity score

def mean_cos_sim(grp):

output = []

for i, j in combinations(grp.tolist(), 2):

if document_vector(i) is not None and len(document_vector(i)) > 0:

sim = cosine_similarity(document_vector(i).reshape(1, -1), document_vector(j).reshape(1, -1))

output.append(sim)

return np.mean(output, axis=0)

Here you try to add the results as a column but the number of rows is going to be different as the result DataFrame only has one row per firm while the original DataFrame has one per text. So you have to create a new DataFrame (which you can optionally then merge/join with the original DataFrame based on the firm column):

df = pd.DataFrame(np.array(

[['facebook', "women tennis"], ['facebook', "men basketball"], ['facebook', 'club'],

['apple', "vice president"], ['apple', 'swimming contest']]), columns=['firm', 'text'])

df_grpd = df.groupby(['firm'])["text"].apply(mean_cos_sim)

Which overall will give you (Edit: updated):

print(df_grpd)

> firm

apple [[0.53190523]]

facebook [[0.83989316]]

Name: text, dtype: object

Edit:

I just noticed that the reason for the super high score is that this is missing a tokenization, see the changed part. Without the split() this just compares character similarities which tend to be super high.

CodePudding user response:

Note that sklearn.metrics.pairwise.cosine_similarity, when passed a single matrix X, automatically returns the pairwise similarities between all samples in X. I.e., it isn't necessary to manually construct pairs.

Say you construct your average embeddings with something like this (I'm using glove-twitter-25 here),

def mean_embeddings(s):

"""Transfer a list of words into mean embedding"""

return np.mean([model_glove.get_vector(x) for x in s], axis=0)

df["embeddings"] = df.text.str.split().apply(mean_embeddings)

so df.embeddings turns out

>>> df.embeddings

0 [-0.2597, -0.153495, -0.5106895, -1.070115, 0....

1 [0.0600965, 0.39806002, -0.45810497, -1.375365...

2 [-0.43819, 0.66232, 0.04611, -0.91103, 0.32231...

3 [0.1912625, 0.0066999793, -0.500785, -0.529915...

4 [-0.82556, 0.24555385, 0.38557374, -0.78941, 0...

Name: embeddings, dtype: object

You can get the mean pairwise cosine similarity like so, with the main point being that you can directly apply cosine_similarity to the adequately prepared matrix for each group:

(

df.groupby("firm").embeddings # extract 'embeddings' for each group

.apply(np.stack) # turns sequence of arrays into proper matrix

.apply(cosine_similarity) # the magic: compute pairwise similarity matrix

.apply(np.mean) # get the mean

)

which, for the model I used, results in:

firm

apple 0.765953

facebook 0.893262

Name: embeddings, dtype: float32