I trained two models having same trainable parameters and same structure. But the Functional model performs better compared to Sequential model. Trying to predict a vector from a given image. The image output is from vgg16 model. excludes top layer. When compared the original vector with the predicted vector. Functional model tends to have greater similarity with original vector. Can someone explain why does this happen?

Code below -

from keras.models import Sequential

import numpy as np

from keras.models import Model

from keras.layers import Input

from keras.layers import Dense

from tensorflow import keras

from numpy import random

from sklearn.metrics.pairwise import cosine_similarity

epochs=2000

x = random.random_sample((1, 4096))

y = np.array([ 0.01897711, 0.00196044, -0.0100884 , 0.08048831, 0.07945059, -0.13450155, -0.00228113, 0.30315322, -0.2170798 , 0.12462355, -0.12226178, -0.19237731, -0.14406398, 0.11556922, 0.04466464, -0.22505943, -0.07492258, -0.05925079, 0.02871693, -0.32403016, 0.16885516, -0.01677704, 0.03490563, 0.08720589, -0.03105724, -0.10850648, 0.04820024, -0.1348836 , -0.26358405, 0.08388387, 0.13177398, 0.00133367, -0.01074621, -0.01703981, 0.14912938, 0.13562258, 0.12910905, -0.02097122, -0.05823291, -0.21523051, -0.1051832 , -0.0112495 , -0.02306462, 0.30883443, 0.24211378, -0.01332151, -0.04171557, -0.07624041, 0.05742156, 0.17561561, -0.05971769, -0.22914584, -0.2354534 , -0.12413627, -0.02892042, -0.08661073, 0.14135012, -0.15514424, -0.09965582, -0.13770337, 0.09548005, 0.0925705 , -0.10030732, 0.16057852, -0.17537649, 0.23076315, -0.12471516, 0.2811343 , -0.1576465 , 0.17364068, 0.0658261 , 0.044597 , 0.27390295, -0.04520088, 0.00317772, 0.05926268, 0.06897669, -0.2579084 , -0.30417407, -0.08170868, -0.10205928, -0.14339833, -0.2291172 , 0.1584655 , -0.108877 , 0.03841971, -0.02097263, -0.00477816, -0.08784705, 0.00944081, 0.01409219, 0.1655657 , 0.09393094, 0.233216 , 0.28611556, -0.00573498, 0.1374636 , -0.19641444, 0.14472656, 0.254758 , -0.26166946, 0.30998066, 0.1026804 , -0.0578127 , -0.0882837 , -0.25514072, 0.12337176, 0.1786545 , 0.04052542, -0.17535737, -0.05401937, -0.27649277, -0.04952267, 0.08122452, 0.04374097, -0.07044917, 0.0653659 , -0.36983526, -0.02356564, -0.01144519, 0.1440273 , 0.12321867, 0.10163002, -0.13444787, -0.06148207, 0.11309719, -0.24679276, -0.04028287, -0.0930292 , -0.06392674, 0.10477038, 0.00828285, -0.11968364, -0.16145884, -0.08808196, 0.14231506, -0.02768413, -0.24046096, 0.02477906, -0.3868386 , 0.08224358, -0.30728677, -0.31634584, -0.24805053, -0.19289431, -0.04890246, -0.23479757, 0.13149938, 0.02801071, 0.12761658, 0.02897108, -0.14499697, 0.05322106, 0.06153642, -0.21517622, 0.255269 , 0.08573797, 0.09940388, -0.10590497, 0.13063994, 0.11253715, 0.15636472, -0.19782121, 0.01258014, -0.04391019, 0.16168897, -0.05669969, -0.17957021, -0.04841055, -0.00175814, -0.25425357, 0.14485207, 0.08319512, -0.20990393, 0.04344559, 0.20995931, -0.16608813, 0.28736553, 0.12240092, 0.12146739, 0.05718496, 0.01994314, 0.09686041, 0.13452487, 0.1052431 , 0.10266875, -0.01051683, 0.01536175, 0.25623122, 0.11273847, 0.06577922, -0.09992851, -0.02046986, -0.11516961, 0.12051879, 0.00518495, 0.0988002 , -0.279763 , -0.09997523, -0.04474135])

y = y.reshape(1,-1)

inputs = Input(shape=(4096,))

decoder = Dense(256, activation="sigmoid")(inputs)

decoder = Dense(256, activation="sigmoid")(decoder)

decoder = Dense(256, activation="sigmoid")(decoder)

outputs = Dense(200, activation="sigmoid")(decoder)

functional = Model(inputs=inputs, outputs=outputs)

opt = keras.optimizers.Adam(learning_rate=0.01)

functional.compile(loss="mse", optimizer=opt)

sequen = Sequential()

sequen.add(Dense(256,input_shape=(4096,),activation="sigmoid"))

sequen.add(Dense(256,activation="sigmoid"))

sequen.add(Dense(256,activation="sigmoid"))

sequen.add(Dense(200,activation="sigmoid"))

sequen.compile(loss="mse", optimizer=opt)

functional.fit(x,y,verbose=1,validation_data=(x, y),epochs=epochs)

sequen.fit(x,y,verbose=1,validation_data=(x, y),epochs=epochs)

functional_output = cosine_similarity(functional.predict(x),y)

sequential_output = cosine_similarity(sequen.predict(x),y)

print(functional_output,sequential_output)

#Calculating cosine_similarity between both outputs. Functional api gives gives better output.

#output - array([[0.65056009]]), array([[0.19631703]])

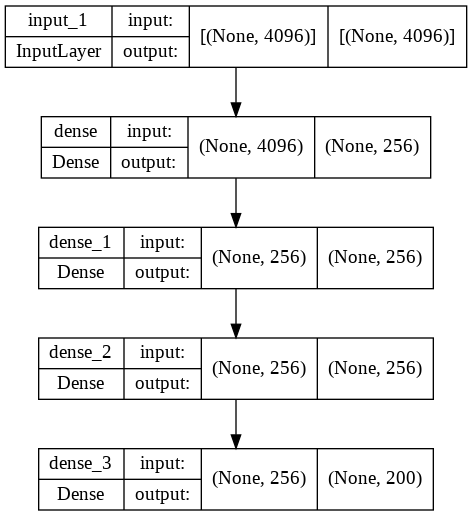

Functional Model Structure

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 4096)] 0

dense (Dense) (None, 256) 1048832

dense_1 (Dense) (None, 256) 65792

dense_2 (Dense) (None, 256) 65792

dense_3 (Dense) (None, 200) 51400

=================================================================

Total params: 1,231,816

Trainable params: 1,231,816

Non-trainable params: 0

_________________________________________________________________

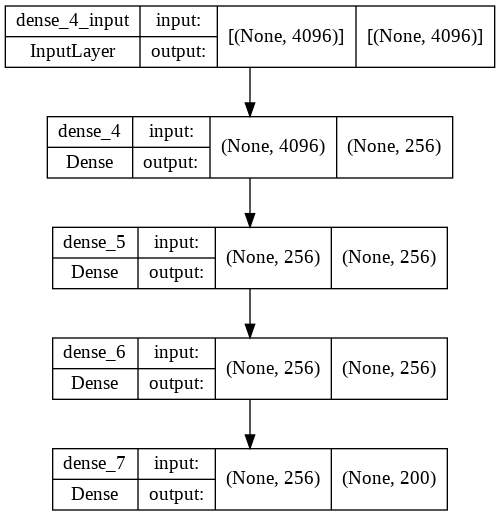

Sequential Model Structure

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_4 (Dense) (None, 256) 1048832

dense_5 (Dense) (None, 256) 65792

dense_6 (Dense) (None, 256) 65792

dense_7 (Dense) (None, 200) 51400

=================================================================

Total params: 1,231,816

Trainable params: 1,231,816

Non-trainable params: 0

_________________________________________________________________

CodePudding user response:

I think the main problem is that you use the same optimizer to train your models, and after training your first model, the optimizer already has an internal state. Using two separate optimizers seems to yield (almost) identical results:

...

opt1 = keras.optimizers.Adam(learning_rate=0.01)

opt2 = keras.optimizers.Adam(learning_rate=0.01)

...

...

[[0.65034289]] [[0.65033581]]

CodePudding user response:

The two models are initialized with different weights and biases. You can initialize the weights and biases of the model as a zeros matrix by adding the parameters, kernel_initializer=tf.keras.initializers.Zeros() and bias_initializer=tf.keras.initializers.Zeros(). And if you run this code, you will see similar results, but not identical.

As pointed out by @AloneTogether, after training your first model, the optimizer already has an internal state. So, initializing that optimizer again would fix this issue.

So, if you run this code, you will get identical results:

from keras.models import Sequential

import numpy as np

from keras.models import Model

from keras.layers import Input

from keras.layers import Dense

from tensorflow import keras

import tensorflow as tf

from numpy import random

from sklearn.metrics.pairwise import cosine_similarity

epochs=200

x = random.random_sample((1, 4096))

y = np.array([ 0.01897711, 0.00196044, -0.0100884 , 0.08048831, 0.07945059, -0.13450155, -0.00228113, 0.30315322, -0.2170798 , 0.12462355, -0.12226178, -0.19237731, -0.14406398, 0.11556922, 0.04466464, -0.22505943, -0.07492258, -0.05925079, 0.02871693, -0.32403016, 0.16885516, -0.01677704, 0.03490563, 0.08720589, -0.03105724, -0.10850648, 0.04820024, -0.1348836 , -0.26358405, 0.08388387, 0.13177398, 0.00133367, -0.01074621, -0.01703981, 0.14912938, 0.13562258, 0.12910905, -0.02097122, -0.05823291, -0.21523051, -0.1051832 , -0.0112495 , -0.02306462, 0.30883443, 0.24211378, -0.01332151, -0.04171557, -0.07624041, 0.05742156, 0.17561561, -0.05971769, -0.22914584, -0.2354534 , -0.12413627, -0.02892042, -0.08661073, 0.14135012, -0.15514424, -0.09965582, -0.13770337, 0.09548005, 0.0925705 , -0.10030732, 0.16057852, -0.17537649, 0.23076315, -0.12471516, 0.2811343 , -0.1576465 , 0.17364068, 0.0658261 , 0.044597 , 0.27390295, -0.04520088, 0.00317772, 0.05926268, 0.06897669, -0.2579084 , -0.30417407, -0.08170868, -0.10205928, -0.14339833, -0.2291172 , 0.1584655 , -0.108877 , 0.03841971, -0.02097263, -0.00477816, -0.08784705, 0.00944081, 0.01409219, 0.1655657 , 0.09393094, 0.233216 , 0.28611556, -0.00573498, 0.1374636 , -0.19641444, 0.14472656, 0.254758 , -0.26166946, 0.30998066, 0.1026804 , -0.0578127 , -0.0882837 , -0.25514072, 0.12337176, 0.1786545 , 0.04052542, -0.17535737, -0.05401937, -0.27649277, -0.04952267, 0.08122452, 0.04374097, -0.07044917, 0.0653659 , -0.36983526, -0.02356564, -0.01144519, 0.1440273 , 0.12321867, 0.10163002, -0.13444787, -0.06148207, 0.11309719, -0.24679276, -0.04028287, -0.0930292 , -0.06392674, 0.10477038, 0.00828285, -0.11968364, -0.16145884, -0.08808196, 0.14231506, -0.02768413, -0.24046096, 0.02477906, -0.3868386 , 0.08224358, -0.30728677, -0.31634584, -0.24805053, -0.19289431, -0.04890246, -0.23479757, 0.13149938, 0.02801071, 0.12761658, 0.02897108, -0.14499697, 0.05322106, 0.06153642, -0.21517622, 0.255269 , 0.08573797, 0.09940388, -0.10590497, 0.13063994, 0.11253715, 0.15636472, -0.19782121, 0.01258014, -0.04391019, 0.16168897, -0.05669969, -0.17957021, -0.04841055, -0.00175814, -0.25425357, 0.14485207, 0.08319512, -0.20990393, 0.04344559, 0.20995931, -0.16608813, 0.28736553, 0.12240092, 0.12146739, 0.05718496, 0.01994314, 0.09686041, 0.13452487, 0.1052431 , 0.10266875, -0.01051683, 0.01536175, 0.25623122, 0.11273847, 0.06577922, -0.09992851, -0.02046986, -0.11516961, 0.12051879, 0.00518495, 0.0988002 , -0.279763 , -0.09997523, -0.04474135])

y = y.reshape(1,-1)

inputs = Input(shape=(4096,))

decoder = Dense(256, activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros())(inputs)

decoder = Dense(256, activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros())(decoder)

decoder = Dense(256, activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros())(decoder)

outputs = Dense(200, activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros())(decoder)

functional = Model(inputs=inputs, outputs=outputs)

opt = keras.optimizers.Adam(learning_rate=0.01)

functional.compile(loss="mse", optimizer=opt)

sequen = Sequential()

sequen.add(Input(shape=(4096,)))

sequen.add(Dense(256,activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros()))

sequen.add(Dense(256,activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros()))

sequen.add(Dense(256,activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros()))

sequen.add(Dense(200,activation="sigmoid", kernel_initializer=tf.keras.initializers.Zeros(), bias_initializer=tf.keras.initializers.Zeros()))

opt2 = keras.optimizers.Adam(learning_rate=0.01)

sequen.compile(loss="mse", optimizer=opt2)

functional.fit(x,y,verbose=1,validation_data=(x, y),epochs=epochs)

sequen.fit(x,y,verbose=1,validation_data=(x, y),epochs=epochs)

functional_output = cosine_similarity(functional.predict(x),y)

sequential_output = cosine_similarity(sequen.predict(x),y)

print(functional_output,sequential_output)