I try to get daily informations about some investment funds from site

Thanks for your help!!

CodePudding user response:

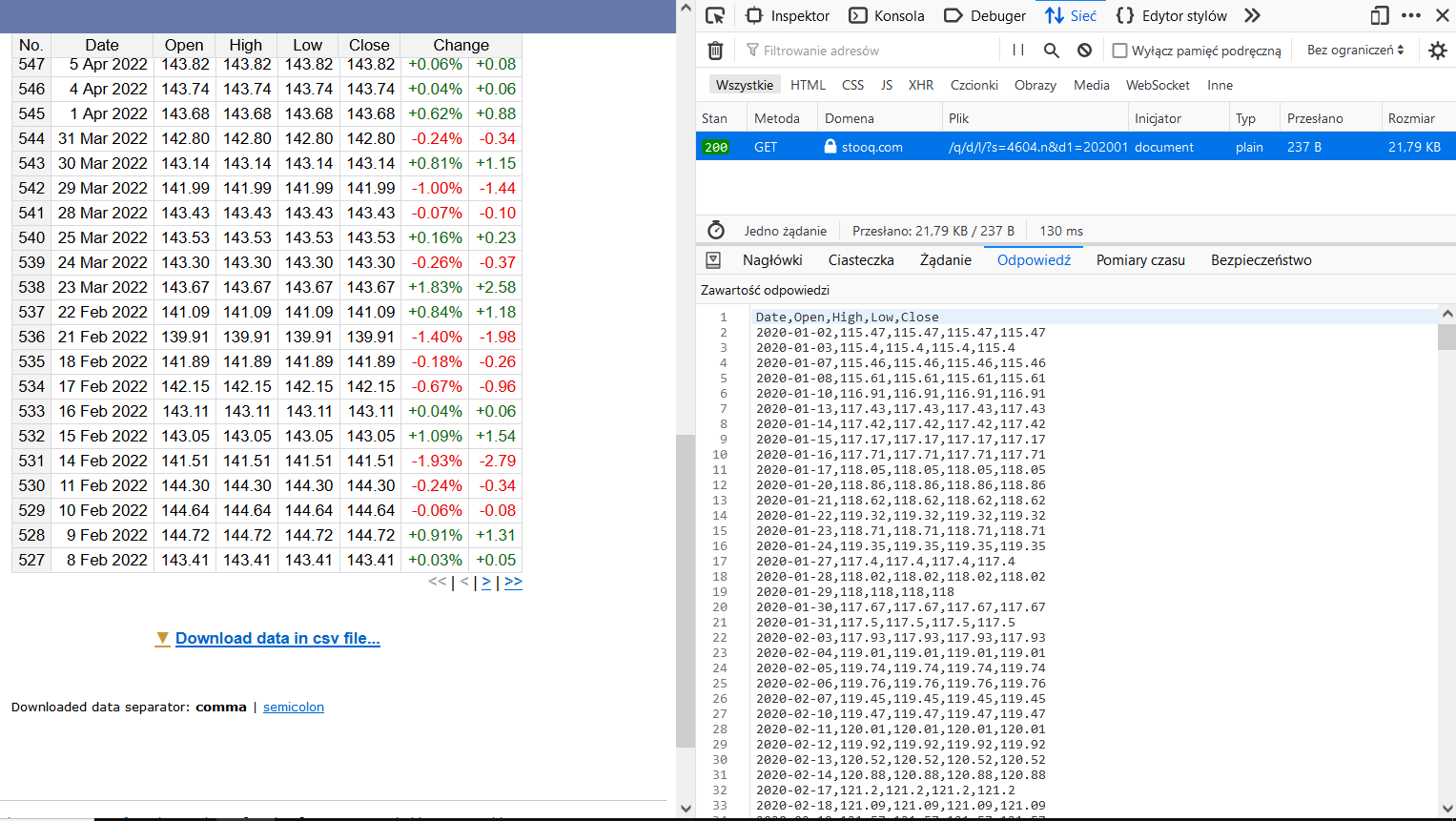

You can download the data in CSV format for example using pandas. Just modify the URL:

import pandas as pd

url = "https://stooq.com/q/d/l/?s=4604.n&d1=20200101&d2=20220507&i=d"

df = pd.read_csv(url)

print(df.head().to_markdown())

Prints first 5 rows:

| Date | Open | High | Low | Close | |

|---|---|---|---|---|---|

| 0 | 2020-01-02 | 115.47 | 115.47 | 115.47 | 115.47 |

| 1 | 2020-01-03 | 115.4 | 115.4 | 115.4 | 115.4 |

| 2 | 2020-01-07 | 115.46 | 115.46 | 115.46 | 115.46 |

| 3 | 2020-01-08 | 115.61 | 115.61 | 115.61 | 115.61 |

| 4 | 2020-01-10 | 116.91 | 116.91 | 116.91 | 116.91 |

CodePudding user response:

Now my full code is:

from bs4 import BeautifulSoup

import requests

import datetime as dt

# the investment funds list

number_to_symbol = {

'1': '2711.n',

'2': '3187.n',

'3': '1472.n',

'4': '4604.n',

'5': '2735.n'

}

def url_get(num):

## Make URL address with the investment fund symbol and dates range (01.01.2020 - today)

symbol = number_to_symbol[str(num)]

end_time = f"20{dt.date.today().strftime('%y%m%d')}"

return f'https://stooq.com/q/d/l/?s={symbol}&d1=20200101&d2={end_time}&i=d'

url_name = url_get(4)

print(f'URL to web-scraping: {url_name}')

text = requests.get(url_name, headers={'User-Agent':'Custom user agent'}).text

print(text)