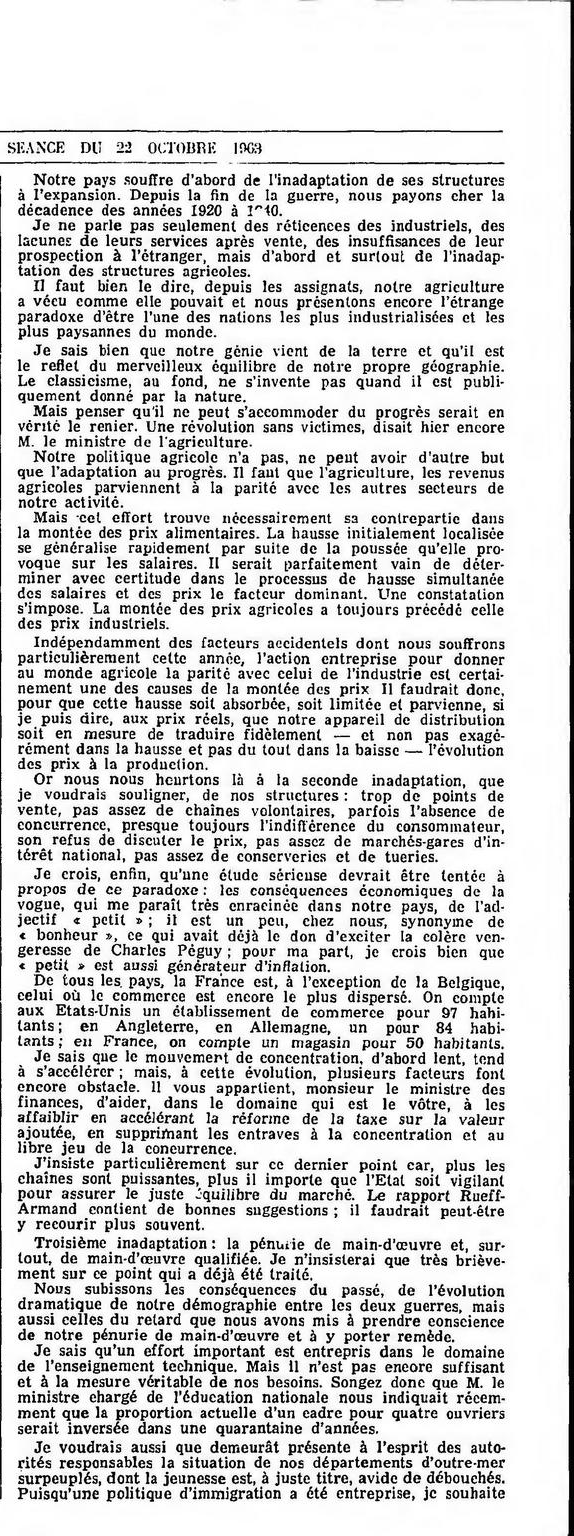

I'm trying to crop both columns from several pages like this in order to later OCR, looking at splitting the page along the vertical line

What I've got so far is finding the header, so that it can be cropped out:

image = cv2.imread('014-page1.jpg')

im_h, im_w, im_d = image.shape

base_image = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (7,7), 0)

thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# Create rectangular structuring element and dilate

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (50,10))

dilate = cv2.dilate(thresh, kernel, iterations=1)

# Find contours and draw rectangle

cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

cnts = sorted(cnts, key=lambda x: cv2.boundingRect(x)[1])

for c in cnts:

x,y,w,h = cv2.boundingRect(c)

if h < 20 and w > 250:

cv2.rectangle(image, (x, y), (x w, y h), (36,255,12), 2)

How could I split the page vertically, and grab the text in sequence from the columns? Or alternatively, is there a better way to go about this?

CodePudding user response:

In order to separate out the two columns you have to find the dividing line in the center.

You can use Sobel derivative filter in the x-axis to find the black vertical line. Follow this tutorial for more details on the Sobel filter operator.

sobel_vertical_lines = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3) # (1,0) for x direction derivatives

Extract the line position by thresholding the sobel result and then scanning the center columns for a column with high concentration of white values.

One way to do this would be to do a column-wise sum and then find the column with the maximum values. But there are other ways to do it.

sum_cols = np.add.reduce(sobel_thresh, axis = 1)

max_col = np.argmax(sum_cols)

In a case where there is no black dividing line you can skip the sobel. Just resize aggressively and search for the columns in the center with high concentration of white pixels.

CodePudding user response:

Here's my take on the problem. It involves selecting a middle portion of the image, assuming the vertical line is present through all the image (or at least passes through the middle of the page). I process this ROI and then reduce it to a row. Then, I get the starting and ending horizontal coordinates of the crop. With this information and then produce the final cropped images.

I tried to made the algorithm general. It can split all the columns if you have more than two columns in the original image. Let's check out the code:

# Imports:

import numpy as np

import cv2

Image path

path = "D://opencvImages//"

fileName = "pmALU.jpg"

# Reading an image in default mode:

inputImage = cv2.imread(path fileName)

# To grayscale:

grayImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

# Otsu Threshold:

_, binaryImage = cv2.threshold(grayImage, 0, 255, cv2.THRESH_OTSU)

# Get image dimensions:

(imageHeight, imageWidth) = binaryImage.shape[:2]

# Set middle ROI dimensions:

middleVertical = 0.5 * imageHeight

roiWidth = imageWidth

roiHeight = int(0.1 * imageHeight)

middleRoiVertical = 0.5 * roiHeight

roiY = int(0.5 * imageHeight - middleRoiVertical)

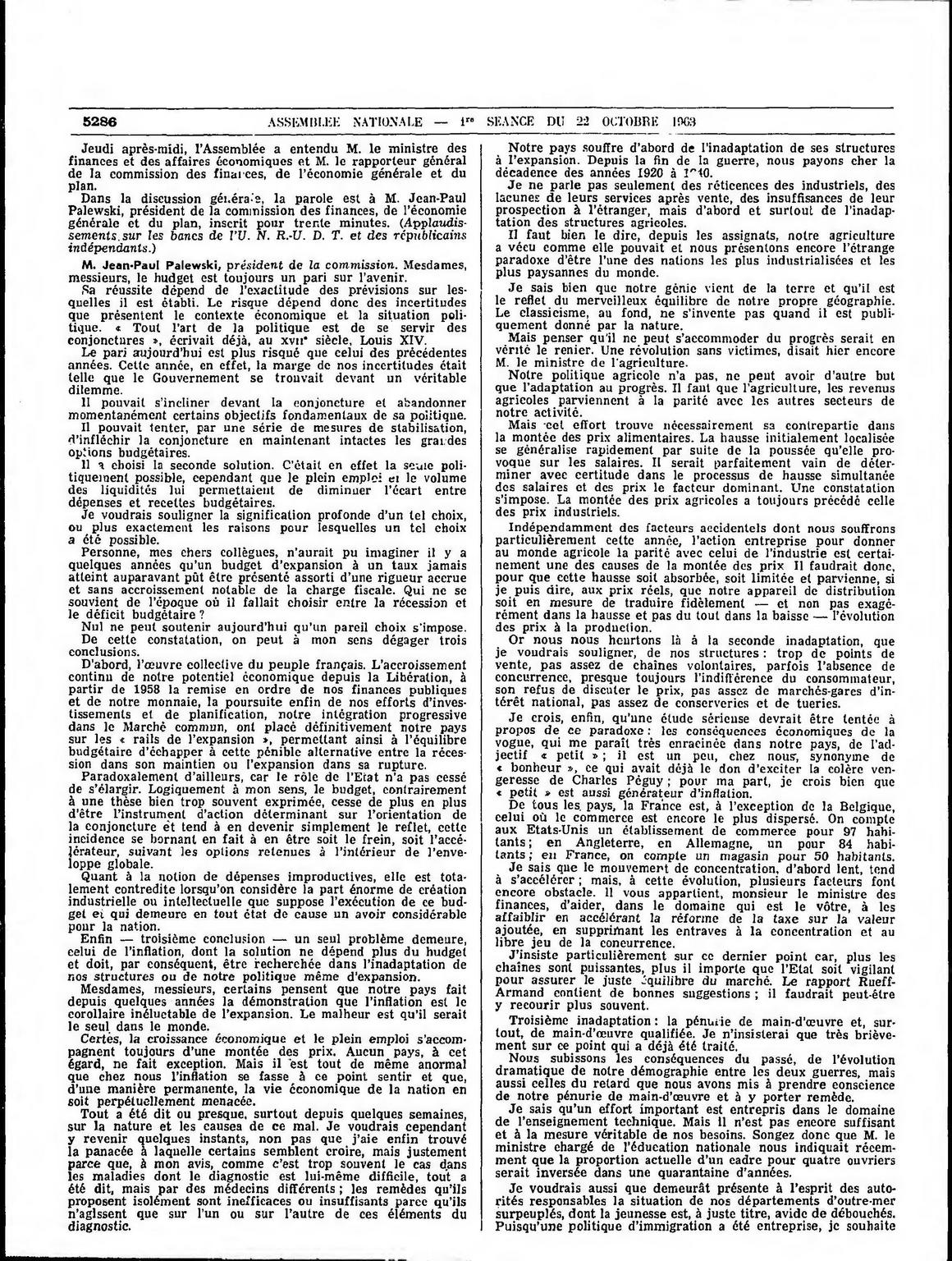

The first portion of the code gets the ROI. I set it to crop around the middle of the image. Let's just visualize the ROI that will be used for processing:

The next step is to crop this:

# Slice the ROI:

middleRoi = binaryImage[roiY:roiY roiHeight, 0:imageWidth]

showImage("middleRoi", middleRoi)

writeImage(path "middleRoi", middleRoi)

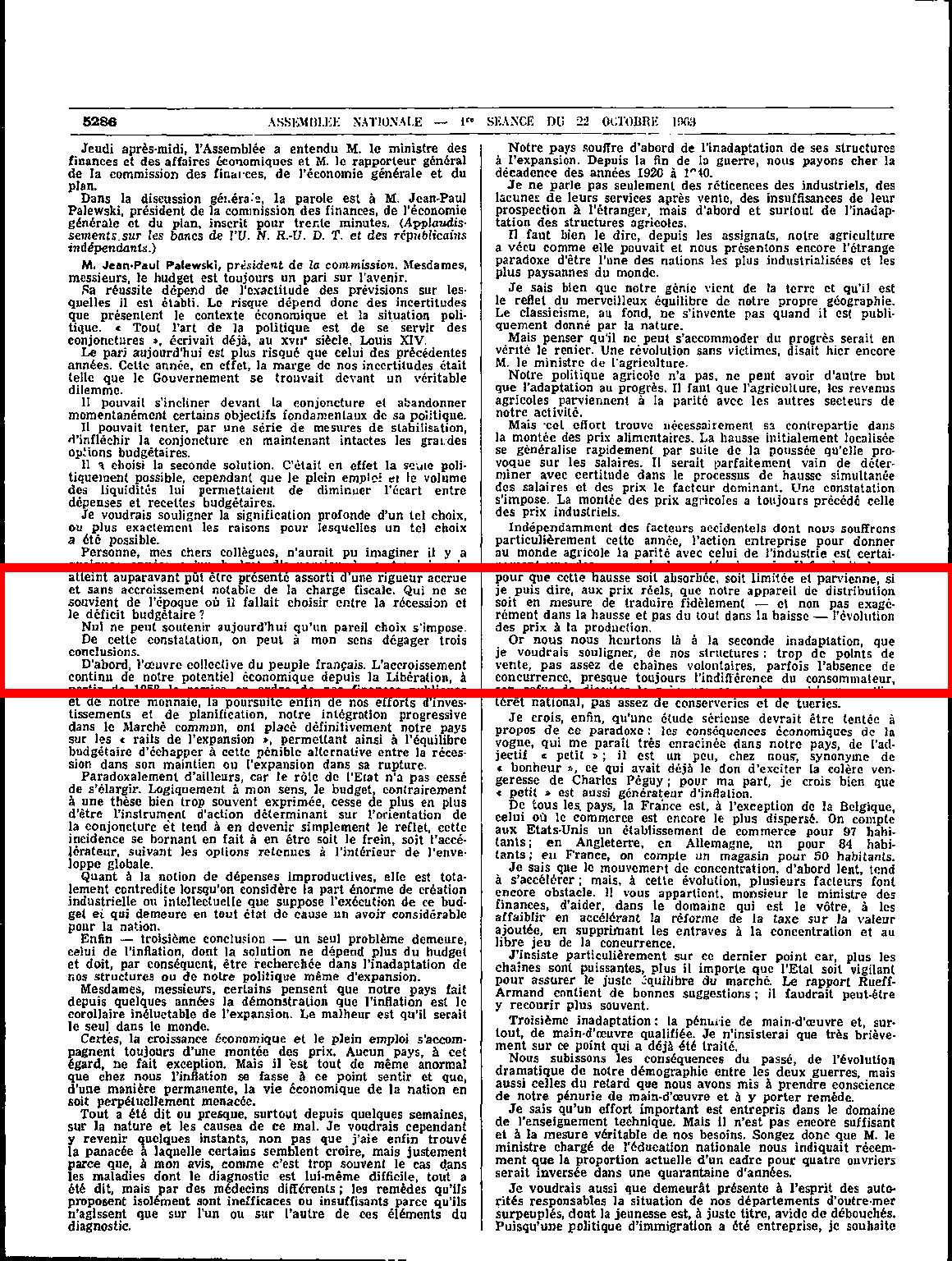

This produces the following crop:

Alright. The idea is to reduce this image to one row. If I get the maximum value of all columns and store them in one row, I should get a big white portion where the vertical line passes through.

Now, there's a problem here. If I directly reduce this image, this would be the result (the following is an image of the reduced row):

The image is a little bit small, but you can see the row produces two black columns at the sides, followed by two white blobs. That's because the image has been scanned, additionally the text seems to be justified and some margins are produced at the sides. I only need the central white blob with everything else in black.

I can solve this in two steps: draw a white rectangle around the image before reducing it - this will take care of the black columns. After this, I can Flood-filling with black again at both sides of the reduced image:

# White rectangle around ROI:

rectangleThickness = int(0.01 * imageHeight)

cv2.rectangle(middleRoi, (0, 0), (roiWidth, roiHeight), 255, rectangleThickness)

# Image reduction to a row:

reducedImage = cv2.reduce(middleRoi, 0, cv2.REDUCE_MIN)

# Flood fill at the extreme corners:

fillPositions = [0, imageWidth - 1]

for i in range(len(fillPositions)):

# Get flood-fill coordinate:

x = fillPositions[i]

currentCorner = (x, 0)

fillColor = 0

cv2.floodFill(reducedImage, None, currentCorner, fillColor)

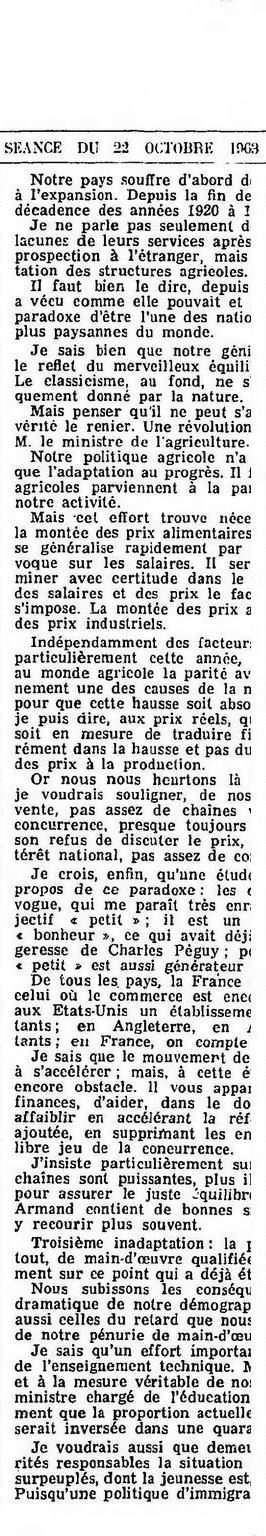

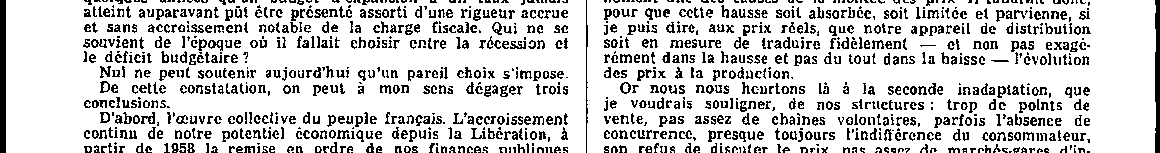

Now, the reduced image looks like this:

Nice. But there's another problem. The central black line produced a "gap" at the center of the row. Not a problem really, because I can fill that gap with an opening:

# Apply Opening:

kernel = np.ones((3, 3), np.uint8)

reducedImage = cv2.morphologyEx(reducedImage, cv2.MORPH_CLOSE, kernel, iterations=2)

This is the result. No more central gap:

Cool. Let's get the vertical positions (indices) where the transitions from black to white and vice versa occur, starting at 0:

# Get horizontal transitions:

whiteSpaces = np.where(np.diff(reducedImage, prepend=np.nan))[1]

I now know where to crop. Let's see:

# Crop the image:

colWidth = len(whiteSpaces)

spaceMargin = 0

for x in range(0, colWidth, 2):

# Get horizontal cropping coordinates:

if x != colWidth - 1:

x2 = whiteSpaces[x 1]

spaceMargin = (whiteSpaces[x 2] - whiteSpaces[x 1]) // 2

else:

x2 = imageWidth

# Set horizontal cropping coordinates:

x1 = whiteSpaces[x] - spaceMargin

x2 = x2 spaceMargin

# Clamp and Crop original input:

x1 = clamp(x1, 0, imageWidth)

x2 = clamp(x2, 0, imageWidth)

currentCrop = inputImage[0:imageHeight, x1:x2]

cv2.imshow("currentCrop", currentCrop)

cv2.waitKey(0)

You'll note I calculate a margin. This is to crop the margins of the columns. I also use a clamp function to make sure the horizontal cropping points are always within image dimensions. This is the definition of that function:

# Clamps an integer to a valid range:

def clamp(val, minval, maxval):

if val < minval: return minval

if val > maxval: return maxval

return val

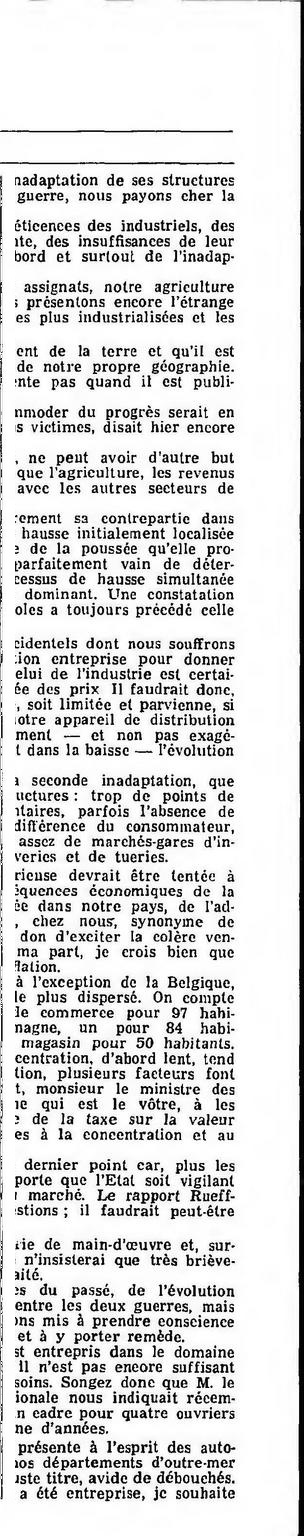

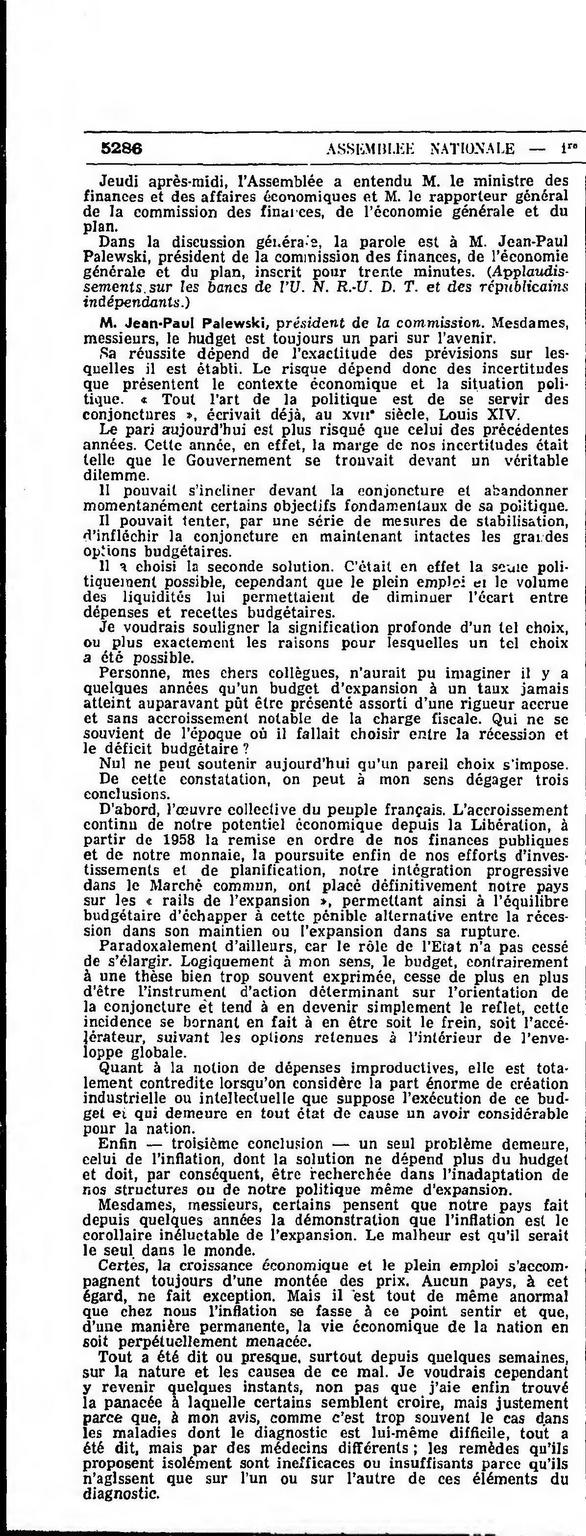

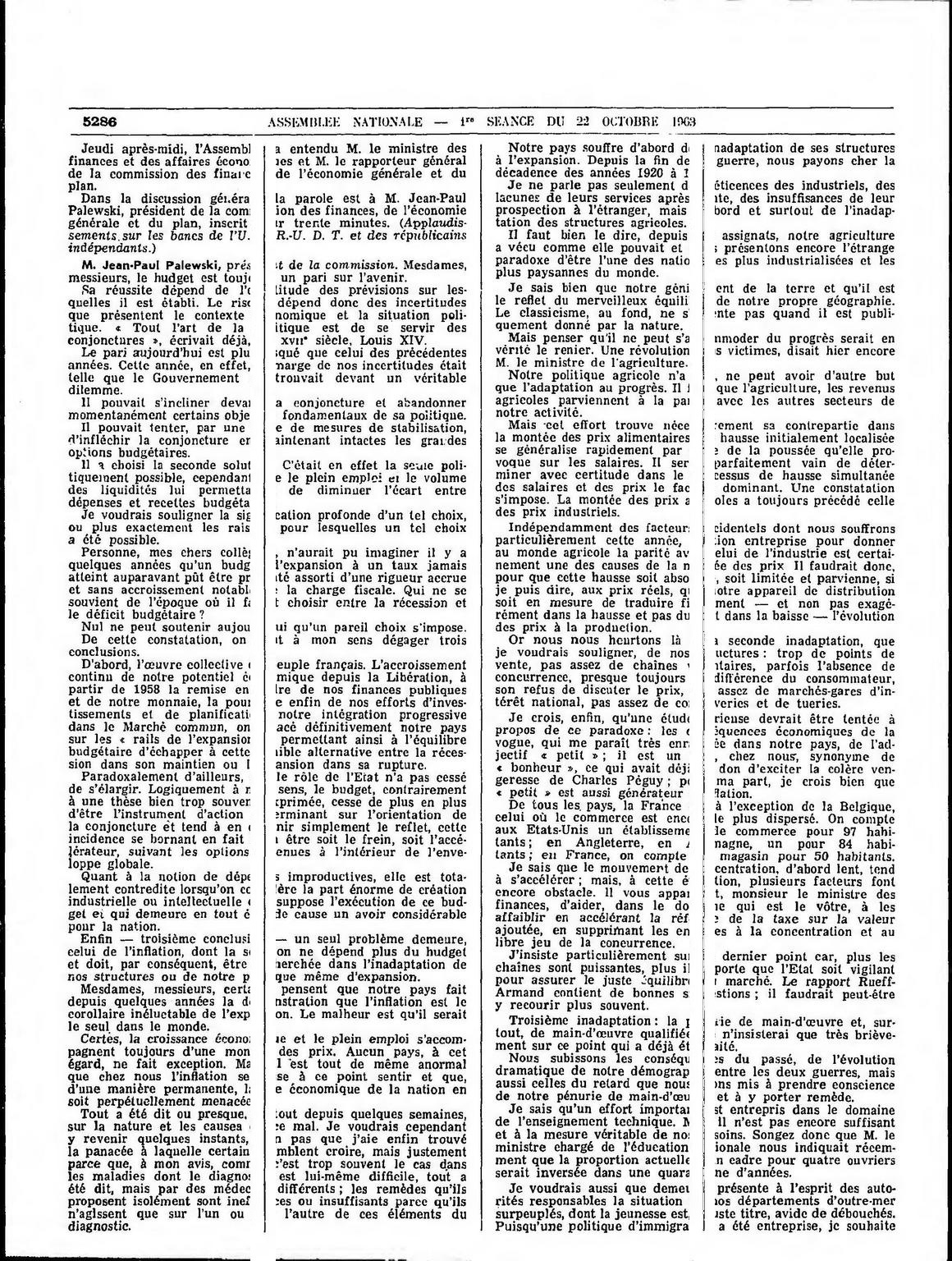

These are the results (resized for the post, open them in a new tab to see the full image):

Let's check out how this scales to more than two columns. This is a modification of the original input, with more columns added manually, just to check out the results:

These are the four images produced: