I'm having trouble trying to web scraping using BeautifulSoup and Selenium. The problem I have is i want to try pulling data from pages 1-20. But somehow the data that was successfully pulled was only up to page 10. It is possible that the number of the last page limit that I would take could be more than 20, but the results of the code I made could only pull 10 pages. Does anyone have an understanding for the problem to be able to pull a lot of data without page limit?

options = webdriver.ChromeOptions()

options.add_argument('-headless')

options.add_argument('-no-sandbox')

options.add_argument('-disable-dev-shm-usage')

driver = webdriver.Chrome('chromedriver',options=options)

apartment_urls = []

try:

for page in range(1,20):

print(f"Extraction Page# {page}")

page="https://www.99.co/id/sewa/apartemen/jakarta?kamar_tidur_min=1&kamar_tidur_maks=4&kamar_mandi_min=1&kamar_mandi_maks=4&tipe_sewa=bulanan&hlmn=" str(page)

driver.get(page)

time.sleep(5)

soup = BeautifulSoup(driver.page_source, 'html.parser')

apart_info_list = soup.select('h2.search-card-redesign__address a[href]')

for link in apart_info_list:

get_url = '{0}{1}'.format('https://www.99.co', link['href'])

print(get_url)

apartment_urls.append(get_url)

except:

print("Good Bye!")

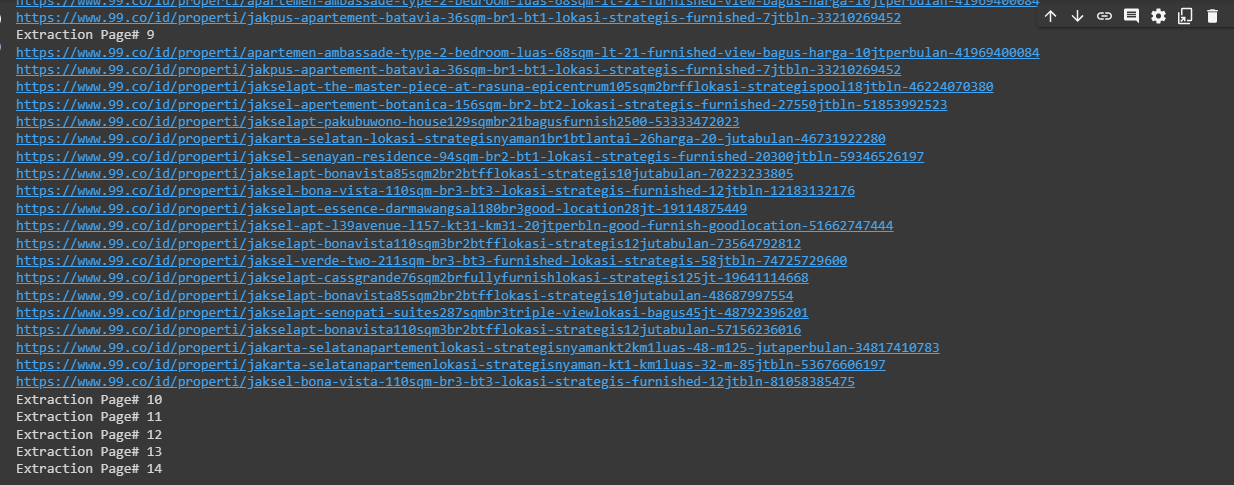

This is the output of the code. When pages 10,11,12 and so on I can't get the data

CodePudding user response:

Now, pagination is working fine without page limit.

import time

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()))

driver.get('https://www.99.co/id/sewa/apartemen/jakarta?kamar_tidur_min=1&kamar_tidur_maks=4&kamar_mandi_min=1&kamar_mandi_maks=4&tipe_sewa=bulanan')

time.sleep(5)

driver.maximize_window()

while True:

soup = BeautifulSoup(driver.page_source, 'html.parser')

apart_info_list = soup.select('h2.search-card-redesign__address a')

for link in apart_info_list:

get_url = '{0}{1}'.format('https://www.99.co', link['href'])

print(get_url)

next_button = driver.find_element(By.CSS_SELECTOR,'li.next > a ')

if next_button:

button = next_button.click()

time.sleep(3)

else:

break

If you would prefer to use: webdriverManager

Alternative solution: As the next page url isn't dynamic, It's also working fine.

import time

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()))

driver.get('https://www.99.co/id/sewa/apartemen/jakarta?kamar_tidur_min=1&kamar_tidur_maks=4&kamar_mandi_min=1&kamar_mandi_maks=4&tipe_sewa=bulanan')

time.sleep(5)

driver.maximize_window()

while True:

soup = BeautifulSoup(driver.page_source, 'html.parser')

apart_info_list = soup.select('h2.search-card-redesign__address a')

for link in apart_info_list:

get_url = '{0}{1}'.format('https://www.99.co', link['href'])

print(get_url)

# next_button = driver.find_element(By.CSS_SELECTOR,'li.next > a ')

# if next_button:

# button = next_button.click()

# time.sleep(3)

next_page = soup.select_one('li.next > a ')

if next_page:

next_page = f'https://www.99.co{next_page}'

else:

break