I am developing a software which has multi-language support. I have to use one byte character sets. That means I cannot use UTF-8 encoding format. My Encoding formats are these:

- ENG: ASCII

- UKR: KOI8-U

- ARA: ISO8859-6

- SPA: ISO8859-1

I use notepad as my editor. When I receive the translation for a new language, I just simply increase my array size and change encoding format of the C file to new language encoding format. For example, my array looks like this for different encoding types:

#define MAX_CHAR_PER_LINE 10

enum Langs {

en,

uk,

es,

ar

MAX_LANG

};

// ASCII

const char settingStr[][MAX_LANG][MAX_CHAR_PER_LINE] = {

//...

{ "SETTINGS", "îáìáûôõ÷áîîñ", "AJUSTES", "ÇÙÏÇÏÇÊ" },

//...

};

// KOI8-U

const char settingStr[][MAX_LANG][MAX_CHAR_PER_LINE] = {

//...

{ "SETTINGS", "НАЛАШТУВАННЯ", "AJUSTES", "гыогогй" },

//...

};

// ISO8859-6

const char settingStr[][MAX_LANG][MAX_CHAR_PER_LINE] = {

//...

{ "SETTINGS", "ففََّ", "AJUSTES", "اعدادات" },

//...

};

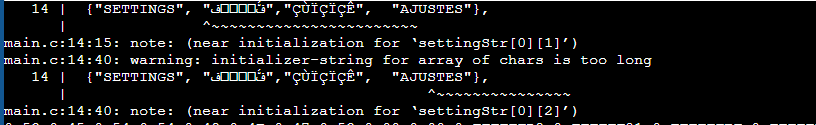

When I check the C file with hex viewer, I then be sure that binary values of characters are correct according to specified encoding standards. My problems are I am getting compile warnings as:

Also logical errors in run time.

Sample code for online gdb is:

#include <stdio.h>

const char settingStr[][4][10] = {

//...

{ "SETTINGS", "ففََّ", "ÇÙÏÇÏÇÊ", "AJUSTES" },

//...

};

int main() {

for (int i = 0; i < 4; i ) {

for (int j = 0; j < 10; j )

printf("0xX,", settingStr[0][i][j]);

printf("\n");

}

return 0;

}

I suspect that gcc preprocessor cannot parse these strings. Should I add some kind of compiler flag? I do not want to fill my array with hex values.

CodePudding user response:

The compiler error message is misleading: the string "ÇÙÏÇÏÇÊ" is probably encoded in UTF-8 (by your editor or some other tool during transmission) and uses 14 bytes (plus a null terminator). The compiler points to the error (the 6th character in the string) but the terminal supports UTF-8 and the 14 bytes of "ÇÙÏÇÏÇÊ" only appear as 7 characters, misaligning the ^~~~~~~ output on the next line. The other string "ففََّ" is probably misencoded too, causing extra misalignment.

The problem is your editing environment: The translation was given back to you encoded in UTF-8, which is the de facto standard today, more precisely, it may have been encoded twice: the original ISO8859-6 1-byte encoding for arabic and reencoded in UTF-8 from ISO8859-1 by mistake.

You cannot easily mix different encodings in the same file. It is very confusing for everyone: the translator, the programmer, the compiler, the users...

Here are different options to avoid these issues:

You should seriously reconsider the design choice and use UTF-8. The source code with all translations will be readable in all languages, which is safer and simpler to audit. Depending on the runtime environment, this might simplify or complicate the display.

you could store the strings in a separate file for each translation, each encoded with the appropriate encoding and retrieve them at run time. This is more friendly for translators but requires substantial changes in the software.

you could encode the translated strings in ASCII with octal or hexadecimal escape sequences to avoid re-encoding issues. This will avoid re-encoding problems and any compiler misinterpretations with historic encodings used in Far-Eastern countries. You can use a small program to encode strings in as C source code.

CodePudding user response:

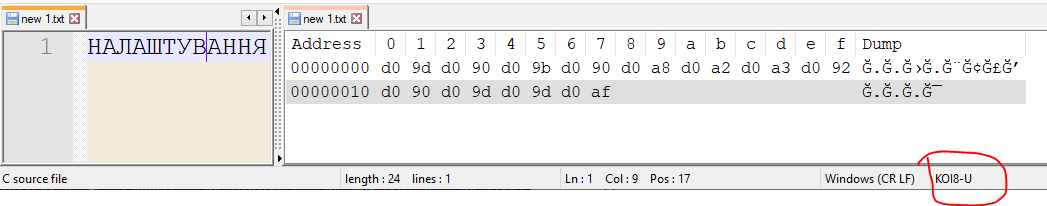

I shouldn't be so sure with notepad . Here is the picture of sample 12 characters long Ukrainian word. But it is stored as 24 bytes in file even if the file encoding format is KOI8-U.

I would expect to see these hex values according to this

xE9 xE1 xF0 xE1 xFA xF4 xF5 xF7 xE1 xE9 xE9 xF1

By the way, to clarify, I don't print these string. I just send these values via TCP, and receiver microprocessor displays these values by the current language of the system (on a dot matrix type screen). I cannot use UTF-8 because the microprocessor expects 1 byte per character. It knows current language too.