I have the following scenario. On day o1, I have the balance, and day by day it is subtracting the transactions. I need to calculate the balance at the beginning and end of the day. I'm trying to use the lag function.

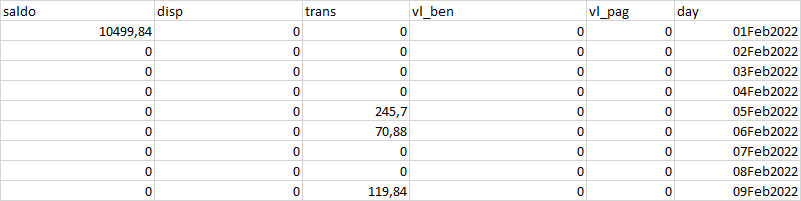

Input:

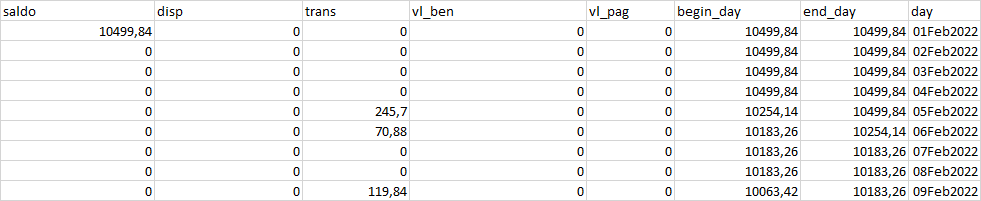

Expected output:

CodePudding user response:

Hopefully, the logic is correct. You're right thinking about the lag window function. But I think it's best to use it after you already gave calculated the end_date.

from pyspark.sql import functions as F, Window as W

df = spark.createDataFrame(

[(10499.84, 0.00, '2022-02-01'),

( 0.00, 0.00, '2022-02-02'),

( 0.00, 0.00, '2022-02-03'),

( 0.00, 0.00, '2022-02-04'),

( 0.00, 245.70, '2022-02-05'),

( 0.00, 70.88, '2022-02-06'),

( 0.00, 0.00, '2022-02-07'),

( 0.00, 0.00, '2022-02-08'),

( 0.00, 119.84, '2022-02-09')],

['saldo', 'trans', 'day']

)

w = W.orderBy('day')

df = df.withColumn('end_day', F.sum(F.col('saldo') - F.col('trans')).over(w))

df = df.withColumn('begin_day', F.coalesce(F.lag('end_day').over(w), F.sum('saldo').over(w)))

df = df.select('saldo', 'trans', 'begin_day', 'end_day', 'day')

df.show()

# -------- ------ --------- -------- ----------

# | saldo| trans|begin_day| end_day| day|

# -------- ------ --------- -------- ----------

# |10499.84| 0.0| 10499.84|10499.84|2022-02-01|

# | 0.0| 0.0| 10499.84|10499.84|2022-02-02|

# | 0.0| 0.0| 10499.84|10499.84|2022-02-03|

# | 0.0| 0.0| 10499.84|10499.84|2022-02-04|

# | 0.0| 245.7| 10499.84|10254.14|2022-02-05|

# | 0.0| 70.88| 10254.14|10183.26|2022-02-06|

# | 0.0| 0.0| 10183.26|10183.26|2022-02-07|

# | 0.0| 0.0| 10183.26|10183.26|2022-02-08|

# | 0.0|119.84| 10183.26|10063.42|2022-02-09|

# -------- ------ --------- -------- ----------

If you restart every month, you should use this window:

w = W.partitionBy(F.year('day'), F.month('day')).orderBy('day')

You may also need to round your end_day.