I wanted to duplicate the list by itself , so found these two approaches for doing this. This below code is running faster than the other statement. can you explain why first one executing so much faster than the second one.

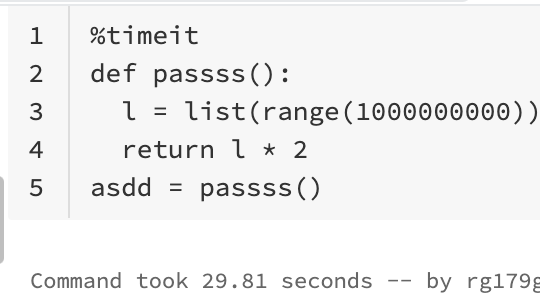

First Approach :

l = [1,2,3,...1000000]

return l * 2

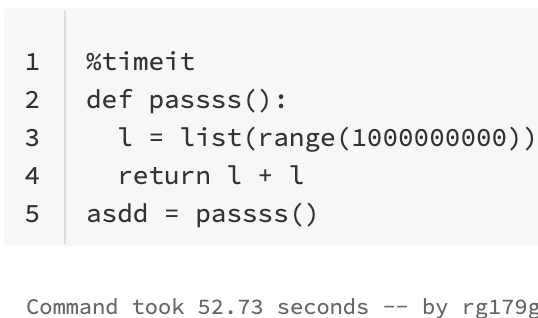

Second Approach :

l = [1,2,3,...1000000]

return l l

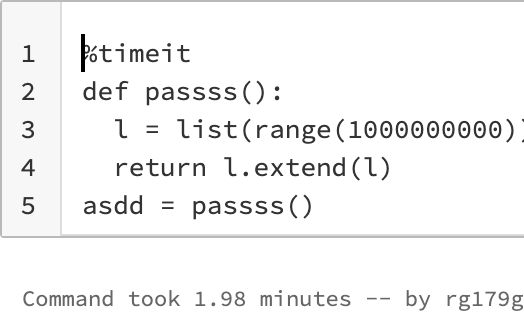

edit : even this below code running slow as well

l = [1,2,3,...1000000]

l.extend(l)

return l

CodePudding user response:

I believe most of the timing differences are due to improper timing. In particular, your last version using extend returns not the extended list but the result of the extend call, which is None. That means the list loses all references and gets deleted. And all its elements as well. And you include that deletion time. You also include the creation time. That's the same for all three, but it's an overhead that dilutes actual differences. I also suspect that you run your tests sequentially so that later ones suffer from having to delete the result of the previous one (and possibly from having less memory to work with).

I did three cycles where I measured the time components separately (with 10^7 elements and Python 3.10.2, see the full code at the end of this answer):

315 ms create

157 ms multiply

158 ms add

80 ms extend

156 ms delete

307 ms create

157 ms multiply

157 ms add

78 ms extend

158 ms delete

316 ms create

168 ms multiply

156 ms add

92 ms extend

190 ms delete

Your start list has a billion elements. They take 32 GB of memory. The list and the doubled list add 24 GB. If you indeed run them sequentially, the previous result is still in memory, so you have (32 16) (32 24) = 104 GB of data in memory. Unless you have that much actual free memory, you likely suffer from page faults. That would reduce the impact of the creation of the int objects and explain why that part is so much more significant in my measurements than in yours.

The *= has also been optimized recently, but only in Python 3.11, which hasn't been released yet, so I doubt you're using it. Also, that could only help explain why *= is faster for you than . Not why extend is so much slower for you than the others, when it should be faster. So I believe your time differences are simply mainly due to memory issues and improperly including deletion times (your snippet having to delete the result of your *= snippet, and your extend snippet having to delete both the result of the snippet and its own result).

Code (Try it online!):

n = 10**7

def create():

global l

l = list(range(n))

def multiply():

return l * 2

def add():

return l l

def extend():

l.extend(l)

return l

def delete():

global l

del l

from timeit import default_timer as time

for _ in range(3):

for f in create, multiply, add, extend, delete:

t = time()

r = f()

t = time() - t

del r

print('= ms ' % (t * 1e3), f.__name__)

print()

CodePudding user response:

To understand the differences between the different possibilities mentioned in the question you could use the dis to look at the corresponding bytecode operations.

Deduction concerning speed from bytecode is not trivial but could give a qualitative idea of what's going on behind the scenes.

from dis import dis

def by_scalar_multiplcation(lst, k):

return lst * k

def by_addition(lst, lst2):

return lst lst2

def by_extension(lst, lst2):

return lst.extend(lst2)

funcs = (by_scalar_multiplcation, by_addition, by_extension)

for f in funcs:

print(f.__name__)

print('='*len(f.__name__))

dis(f)

Output

by_scalar_multiplcation

=======================

9 0 LOAD_FAST 0 (lst)

2 LOAD_FAST 1 (k)

4 BINARY_MULTIPLY

6 RETURN_VALUE

by_addition

===========

12 0 LOAD_FAST 0 (lst)

2 LOAD_FAST 1 (lst2)

4 BINARY_ADD

6 RETURN_VALUE

by_extension

============

15 0 LOAD_FAST 0 (lst)

2 LOAD_METHOD 0 (extend)

4 LOAD_FAST 1 (lst2)

6 CALL_METHOD 1

8 RETURN_VALUE