I have written a script where I am taking the input of URLs hardcoded and giving their filenames also hardcoded, whereas I want to take the URLs from a saved text file and save their names automatically in a chronological order to a specific folder.

My code (works) :

import requests

#input urls and filenames

urls = ['https://www.northwestknowledge.net/metdata/data/pr_1979.nc',

'https://www.northwestknowledge.net/metdata/data/pr_1980.nc',

'https://www.northwestknowledge.net/metdata/data/pr_1981.nc']

fns = [r'C:\Users\HBI8\Downloads\pr_1979.nc',

r'C:\Users\HBI8\Downloads\pr_1980.nc',

r'C:\Users\HBI8\Downloads\pr_1981.nc']

#defining the inputs

inputs= zip(urls,fns)

#define download function

def download_url(args):

url, fn = args[0], args[1]

try:

r = requests.get(url)

with open(fn, 'wb') as f:

f.write(r.content)

except Exception as e:

print('Failed:', e)

#loop through all inputs and run download function

for i in inputs :

result = download_url(i)

Trying to fetch the links from text (error in code):

import requests

# getting all URLS from textfile

file = open('C:\\Users\\HBI8\\Downloads\\testing.txt','r')

#for each_url in enumerate(f):

list_of_urls = [(line.strip()).split() for line in file]

file.close()

#input urls and filenames

urls = list_of_urls

fns = [r'C:\Users\HBI8\Downloads\pr_1979.nc',

r'C:\Users\HBI8\Downloads\pr_1980.nc',

r'C:\Users\HBI8\Downloads\pr_1981.nc']

#defining the inputs

inputs= zip(urls,fns)

#define download function

def download_url(args):

url, fn = args[0], args[1]

try:

r = requests.get(url)

with open(fn, 'wb') as f:

f.write(r.content)

except Exception as e:

print('Failed:', e)

#loop through all inputs and run download fupdftion

for i in inputs :

result = download_url(i)

testing.txt has those 3 links pasted in it on each line.

Error :

Failed: No connection adapters were found for "['https://www.northwestknowledge.net/metdata/data/pr_1979.nc']"

Failed: No connection adapters were found for "['https://www.northwestknowledge.net/metdata/data/pr_1980.nc']"

Failed: No connection adapters were found for "['https://www.northwestknowledge.net/metdata/data/pr_1981.nc']"

PS : I am new to python and it would be helpful if someone could advice me on how to loop or go through files from a text file and save them indivually in a chronological order as opposed to hardcoding the names(as I have done).

CodePudding user response:

When you do list_of_urls = [(line.strip()).split() for line in file], you produce a list of lists. (For each line of the file, you produce the list of urls in this line, and then you make a list of these lists)

What you want is a list of urls.

You could do

list_of_urls = [url for line in file for url in (line.strip()).split()]

Or :

list_of_urls = []

for line in file:

list_of_urls.extend((line.strip()).split())

CodePudding user response:

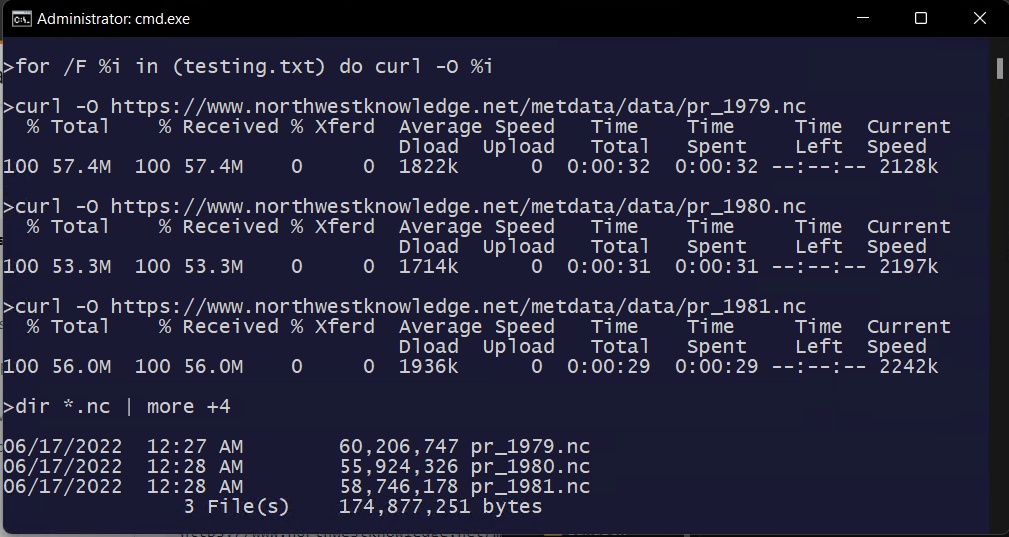

By far the simplest method in this simple case is use the OS command

so go to the work directory C:\Users\HBI8\Downloads

invoke cmd (you can simply put that in the address bar)

write/paste your list using >notepad testing.txt (if you don't already have it there)