I am expecting UST to be always 6 hours ahead of CST. Apparently spark disagrees.

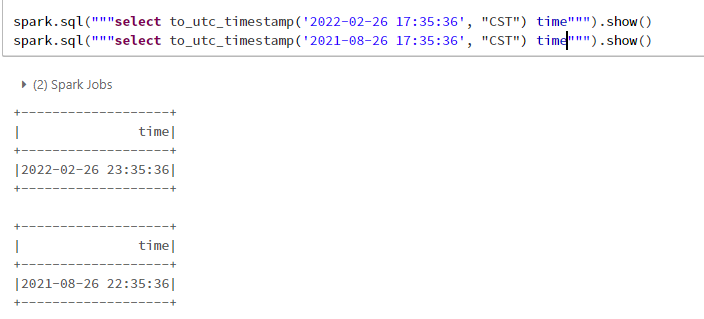

spark.sql("""select to_utc_timestamp('2022-02-26 17:35:36', "CST") """).show()

------------------- | time| ------------------- |2022-02-26 23:35:36| -------------------

spark.sql("""select to_utc_timestamp('2021-08-26 17:35:36', "CST") """).show()

------------------- | time| ------------------- |2021-08-26 22:35:36| -------------------

Is there some documentation that explains exactly how which timezone is calculated ? I could only find the

CodePudding user response:

Generally speaking, the best way to understand exactly how Spark-SQL functions work is to dig through the source code, starting from org.apache.spark.sql.functions and following the breadcrumbs until you wind up here, which will show you that Spark is using the standard java.time.* classes under the hood.

This isn't going to help in your case, however, because the problem is your assumption. Java's TimeZone for CST respects daylight savings time, which will give you a different offset depending upon the DateTime you give it. You can provide a fixed offset yourself, e.g.

spark.sql("""select to_utc_timestamp('2022-02-26 17:35:36', "-0600") """).show()

but I'm unaware of any way to automatically ignore daylight savings in this context.