New to Swift so the following code may in fact be apocryphal. I'm trying to create a swift process that receives the path for an existing video file from the React Native process, parses it into frames and processes each frame with a CoreML model. I've added the model to the project and it created the ObjectiveC model as you'd expect.

However, when I added the code to call the model, the Swift compiler complains saying: "Cannot find 'MyModel' in scope"

I've used the same model in another project and it works fine. There must be something about the structure of the code that prevents it being accessed? Need some expert guidance.

TIA for your help.

import Foundation

import AVFoundation

import AssetsLibrary

import CoreML

import Vision

@available(iOS 13.0, *)

@objc(VideoProcessor)

public class VideoProcessor: NSObject {

private var generator:AVAssetImageGenerator!

var videoUrl = URL(string: "")

var labels = [FrameLabel]()

let encoder = JSONEncoder()

let model = try! VNCoreMLModel(for: MyModel(configuration: MLModelConfiguration()).model)

struct FrameLabel: Codable {

var label: String

var confidence: Float

var boundingBox: Bbox

}

struct Bbox: Codable {

var x: CGFloat

var y: CGFloat

var width: CGFloat

var height: CGFloat

}

@objc

static func requiresMainQueueSetup() -> Bool {

return true // true for run on MainThread, false for allowing background. Not sure.

}

@objc // can this be integrated into processVideo? Need to figure out how to pass another argument along with callback

func setVideoPath(_ path: String) {

let videoPath = URL(fileURLWithPath: path)

videoUrl = videoPath

}

@objc

func processVideo(_ callback: RCTResponseSenderBlock) {

let asset:AVAsset = AVAsset(url: videoUrl!)

let duration:Float64 = CMTimeGetSeconds(asset.duration)

generator = AVAssetImageGenerator(asset:asset)

// generator.appliesPreferredTrackTransform = true // false is default, unclear if this is necessary

for index:Int in 0 ..< Int(duration) {

processFrame(fromTime:Float64(index))

}

generator = nil

let data = try! encoder.encode(labels)

let jsonLabels = String(data: data, encoding: .utf8)

callback([jsonLabels as Any]) //callback only accepts array

}

private func processFrame(fromTime:Float64) {

let time:CMTime = CMTimeMakeWithSeconds(fromTime, preferredTimescale:600) // WHAT IS THIS 600 about??

let frame:CGImage

do {

try frame = generator.copyCGImage(at:time, actualTime:nil)

runModel(frame: frame)

} catch {

return

}

}

private func runModel(frame: CGImage) {

let handler = VNImageRequestHandler(cgImage: frame)

let request = VNCoreMLRequest(model: model, completionHandler: { (request, error) in

if let results = request.results as? [VNRecognizedObjectObservation] {

self.processResults(results: results)

}

})

request.preferBackgroundProcessing = true // can speed up processing by allowing access to GPU when true

request.imageCropAndScaleOption = VNImageCropAndScaleOption.centerCrop // need to decide what to do here

do {

try handler.perform([request])

} catch {

print("failed to perform")

}

}

private func processResults(results: [VNRecognizedObjectObservation]) {

for (result) in results {

let boundingBox = Bbox(x: result.boundingBox.minX, y: result.boundingBox.minY, width: result.boundingBox.width, height: result.boundingBox.height)

let frameLabel = FrameLabel(label: result.labels[0].identifier, confidence: result.confidence, boundingBox: boundingBox)

labels.append(frameLabel)

}

}

}

CodePudding user response:

Sigh, I spent I don't know how many useless hours fighting this and it turned out to be an app configuration issue.

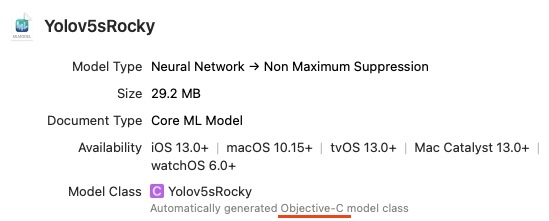

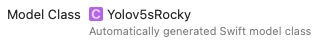

For anyone who finds themselves in this situation, first have a look at the type of class that Xcode has built for your CoreML model. In my case, it had built an Objective-C class which was incompatible with using it in the code snippet above.

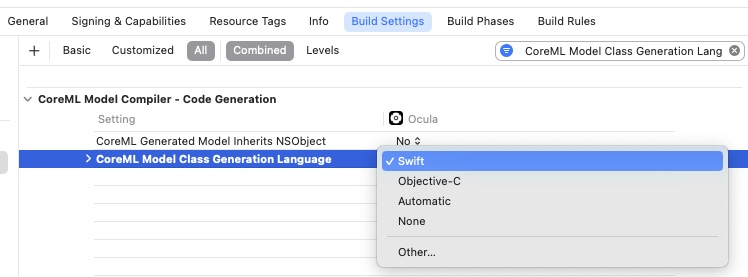

You can change this behaviour by altering the apps Build settings.

Search for "CoreML Model Class Generation Language" and choose "Swift" in the dropdown.

In my case it was initially set to "Automatic" which resulted in the generation of an Objective-C class. I have no clue how Xcode decides which to build, but when I set this to "Swift" it compiled just fine.