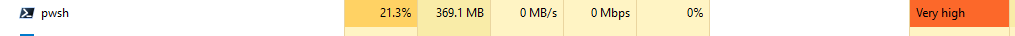

I have a Powershell script which reads a 4000 KB text file (88,500 lines approx) This is the first time I have had my code do this much work. The script below took over 2 minutes to run and consumed around 20% CPU (see Task Manager screenshot below)

Can I improve performance using different code choices?

Can I improve performance using different code choices?

# extractUniqueBaseNames.ps1 --- copy first UPPERCASE word in each line of text, remove duplicates & store

$listing = 'C:\roll-conversion dump\LINZ Place Street Index\StreetIndexOutput.txt'

[array]$tempStorage = $null

[array]$Storage = $null

# select only CAPITALISED first string (at least two chars or longer) from listings

Select-String -Pattern '(\b[A-Z]{2,}\b[$\s])' -Path $listing -CaseSensitive |

ForEach-Object {$newStringValue = $_.Matches.Value -replace '$\s', '\n'

$tempStorage = $newStringValue

}

$Storage = $tempStorage | Select-Object -Unique

I have also added the following line to output results to a new text file (this was not included for the previous Task Manager reading):

$Storage | Out-File -Append atest.txt

Since I am at an early stage of my development I would appreciate any suggestions that would improve the performance of this kind of Powershell script.

CodePudding user response:

If I understand correctly your code, this should do the same but faster and more efficient.

Reference documentations:

using namespace System.IO

using namespace System.Collections.Generic

try {

$re = [regex] '(\b[A-Z]{2,}\b[$\s])'

$reader = [StreamReader] 'some\path\to\inputfile.txt'

$stream = [File]::Open('some\path\to\outputfile.txt', [FileMode]::Append, [FileAccess]::Write)

$writer = [StreamWriter]::new($stream)

$storage = [HashSet[string]]::new()

while(-not $reader.EndOfStream) {

# if the line matches the regex

if($match = $re.Match($reader.ReadLine())) {

$line = $match.Value -replace '$\s', '\n'

# if the line hasn't been found before

if($storage.Add($line)) {

$writer.WriteLine($line)

}

}

}

}

finally {

($reader, $writer, $stream).ForEach('Dispose')

}