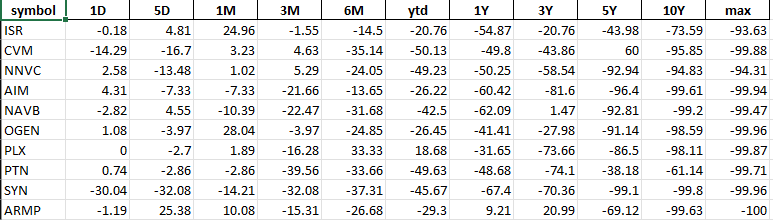

I have an excel file with 5 columns and 4500 rows of data. The image is a sample of 5 rows from the file that will be iterated through in this code.

' allstockdata.xlsx

import pandas as pd

import json

import requests

file = pd.read_excel('allstockdata.xlsx')

data = []

tickers = list(file["Symbol"])

for i in tickers:

i = str(i)

# adds symbol between 2 halves of an incomplete url to form a valid url to request data from

alldata = json.loads(requests.get(urlhalf1 i urlhalf2).text)

# nests dataset in list

data.append(alldata)

df = pd.DataFrame(data, columns = ['data'])

df = pd.concat([df, df.pop('data').apply(pd.Series)], axis = 1)

df.to_excel('allstockdata.xlsx')

Error: columns = _validate_or_indexify_columns(contents, columns)

Error: f"{len(columns)} columns passed, passed data had " AssertionError: 1 columns passed, passed data had 5 columns

Question: How do I fix these errors in my code? How can I skip iterations that form invalid urls(no available data on specific symbol)? Considering the size of the excel file (5 columns, 4500 rows), should I iterate in increments?

Please ask for clarification if necessary

CodePudding user response:

You need to append allData to the data list. For testing purpose you can try my code for first 10 rows, I have edited accordingly.

import pandas as pd

import json

import requests

file = pd.read_excel('allstockdata.xlsx')

file = file.iloc[:10] # For testing purpose try with first 10 rows

data = []

tickers = list(file["Symbol"])

for i in tickers:

response = requests.get('https://financialmodelingprep.com/api/v3/stock-price-change/' str(i) '?apikey=YOURAPIKEY')

alldata = json.loads(response.text)

data.append(alldata)

df = pd.DataFrame(data, columns = ['data'])

df = pd.concat([df, df.pop('data').apply(pd.Series)], axis = 1)

df.to_excel('allstockdata.xlsx')

This gives us the expected output