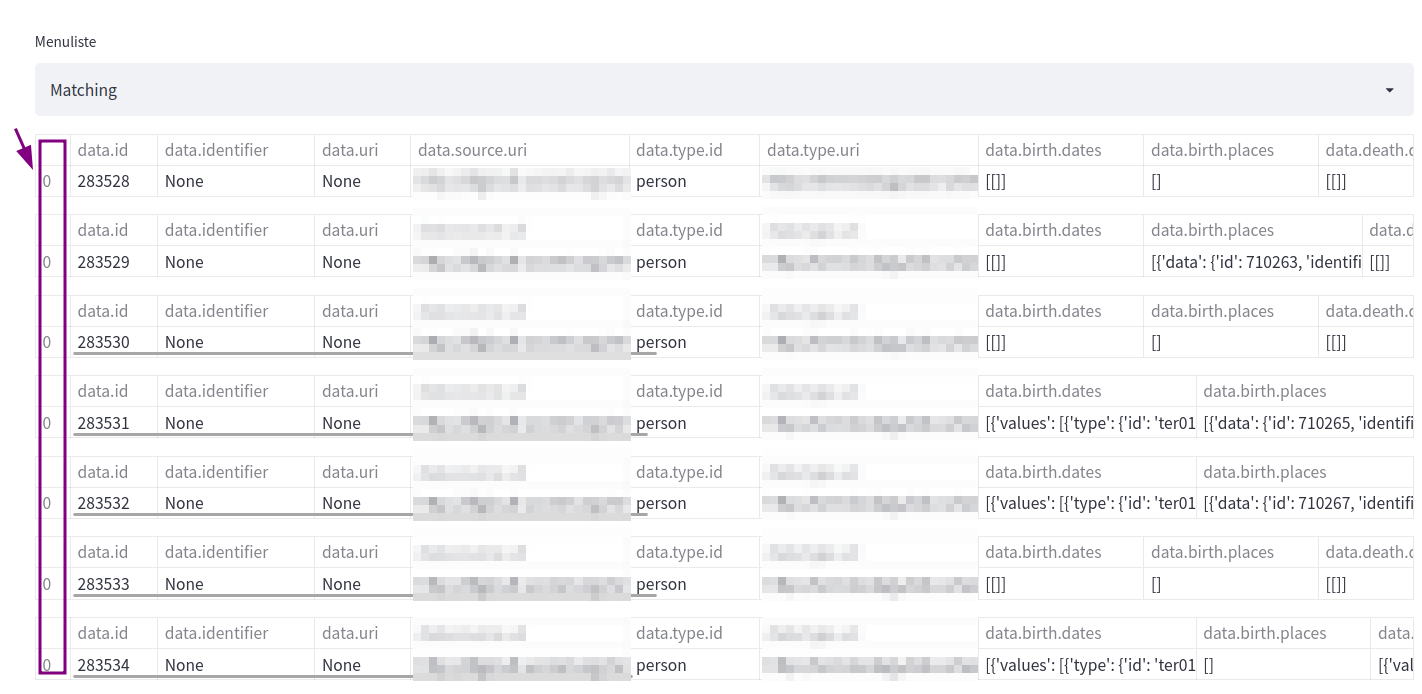

I have got a normalized .json data from a .json Api, in which .json data were represented in a paginated way. I can simply import the data and list as pandas dataframe with the following for loop, but it seems it produces separate dataframes with the same index [0]. What I want is to merge all dataframes under the same header columns with a new index value beginning with [0].

for links in url:

url_data=session.get(links).json()

df_nested_list = pd.json_normalize(url_data)

st.write(df_nested_list)

CodePudding user response:

Make a list of dataframes, and concat them at the end.

dfs = []

for links in url:

url_data = session.get(links).json()

df = pd.json_normalize(url_data)

dfs.append(df)

df = pd.concat(dfs, ignore_index=True)

df = pd.concat([pd.json_normalize(session.get(l).json()) for l in url], ignore_index=True)

CodePudding user response:

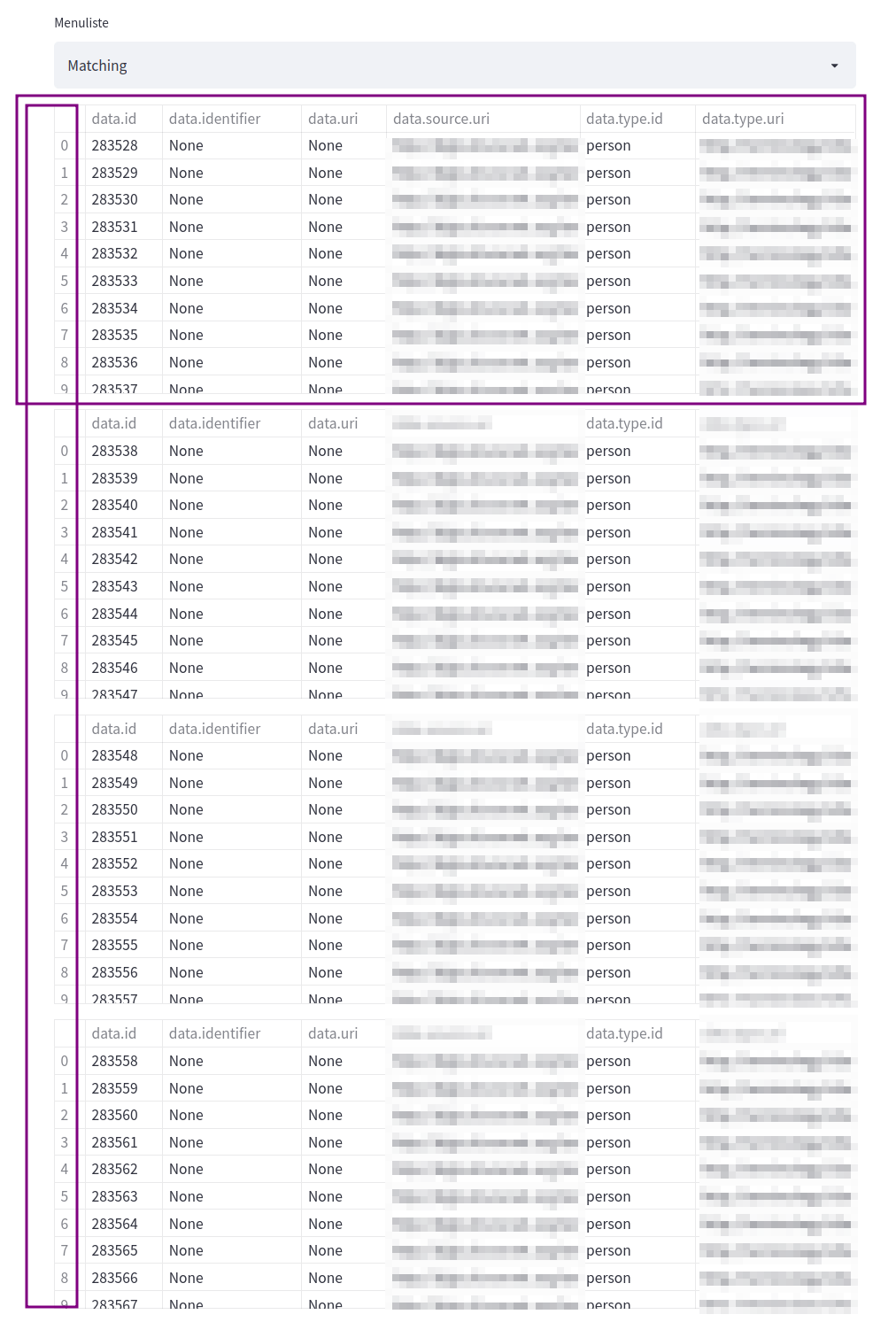

thanks for the response @BeRTme.It is almost the correct answer. I have recently tested and it seems it works to a certain extend and can not merge all dataframes coming from the paginated .json. but creates block of dataframes.