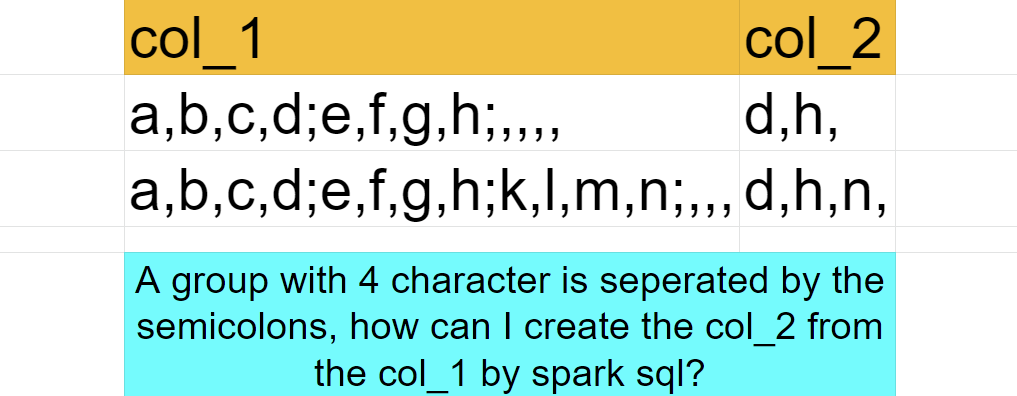

I want to extract some certain values in many arrays and then put them in the other array

CodePudding user response:

If it's a homework task, good luck explaining this to your professor ;)

from pyspark.sql import functions as F

df = spark.createDataFrame(

[("a,b,c,d;e,f,g,h;,,,,",),

("a,b,c,d;e,f,g,h;k,l,m,n;,,,,",)],

["col_1"])

df = df.withColumn("col_2", F.expr(

"""

aggregate(

regexp_extract_all(col_1, '(\\\\w);', 1),

'',

(acc, x) -> concat(acc, rpad(x, 2, ','))

)

"""

))

df.show(truncate=0)

# ---------------------------- ------

# |col_1 |col_2 |

# ---------------------------- ------

# |a,b,c,d;e,f,g,h;,,,, |d,h, |

# |a,b,c,d;e,f,g,h;k,l,m,n;,,,,|d,h,n,|

# ---------------------------- ------

CodePudding user response:

A way which is probably easier to explain to your professor;

Input dataset:

-----------------------

|col_1 |

-----------------------

|a,b,c,d;e,f,g,h;,,, |

|a,b,c,d;e,f,g,h;k,l,m,n|

-----------------------

Transformations:

df1 = df1

// explode in ; first

.withColumn("col_2", split(col("col_1"), ";"))

// map each element to the third element of the exploded array

.withColumn("col_2", expr("transform(col_2, x -> split(x, ',')[3])"))

// join the result with commas

.withColumn("col_2", array_join(col("col_2"), ","))

Final output:

----------------------- -----

|col_1 |col_2|

----------------------- -----

|a,b,c,d;e,f,g,h;,,, |d,h, |

|a,b,c,d;e,f,g,h;k,l,m,n|d,h,n|

----------------------- -----