I want to transfer data from csv file which is in an azure blob storage with the correct data types to SQL server table. How can I get the structure for the table in the CSV file? ( I mean like when we do script table to new query in SSMS). Note that the CSV file is not available on premise.

CodePudding user response:

If your target table is already created in SSMS, copy activity will take care of the schema of source and target tables.

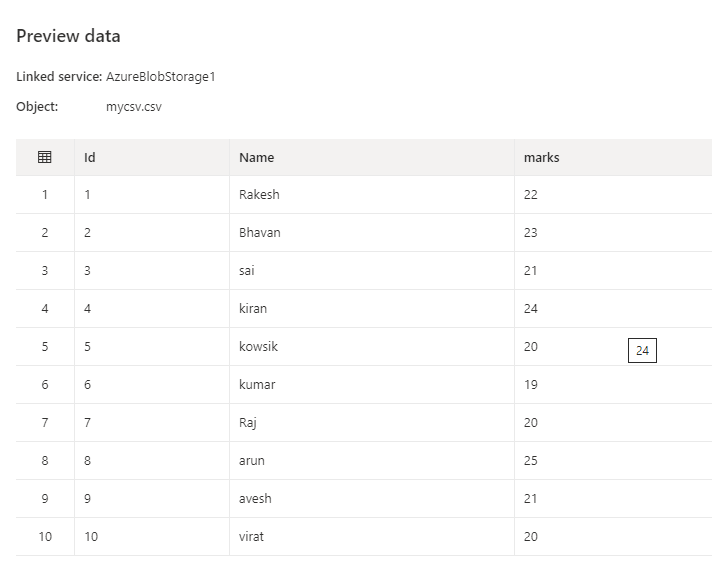

This is my sample csv file from blob:

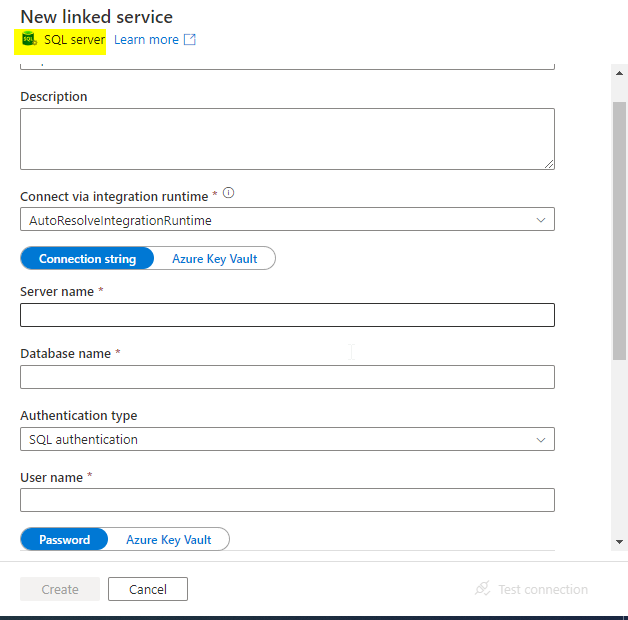

In the sink I have used a table from Azure SQL database. For you, you can create SQL server dataset by SQL server linked service.

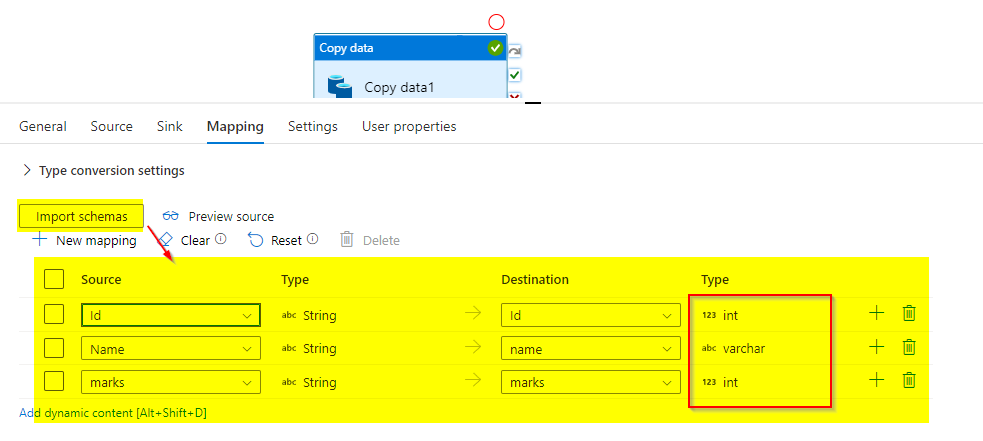

You can see the schema of csv and target tables and their mapping.

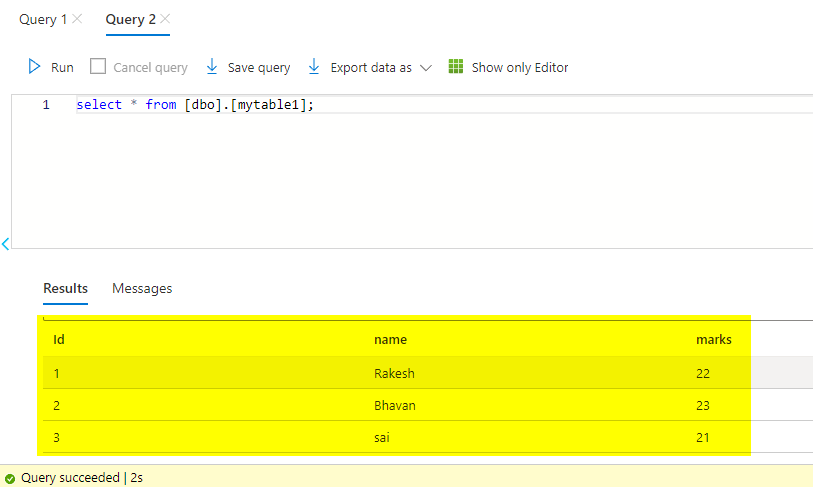

Result:

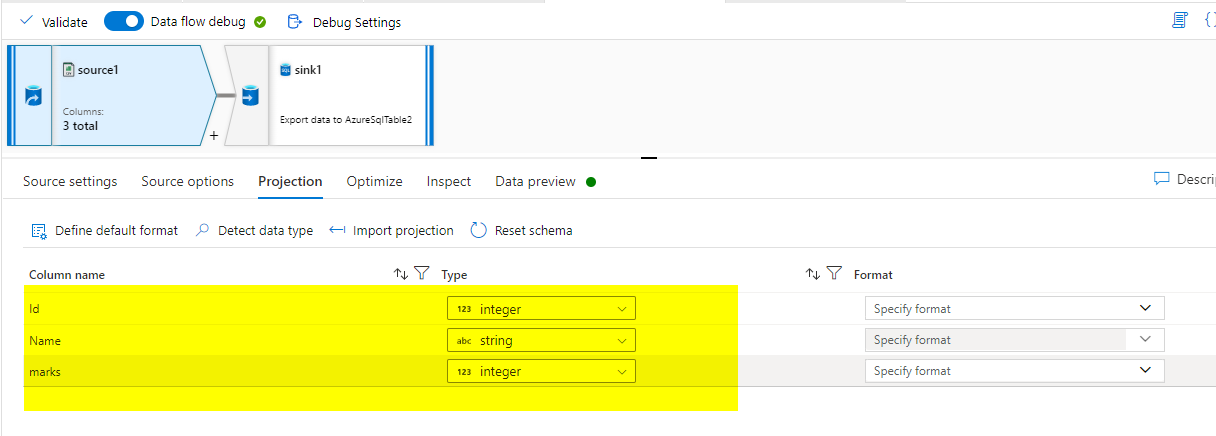

if your target table is not created in SSMS, you can use dataflows and can define the schema that you want in the Projection.

Create a data flow and in the sink give our blob csv file. In the projection of sink, we can give the datatypes that we want for the csv file.

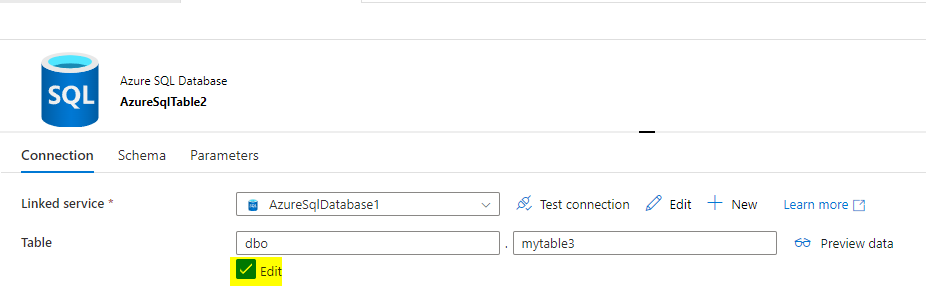

As our target table is not created before, check on edit in the dataset and give the name for the table.

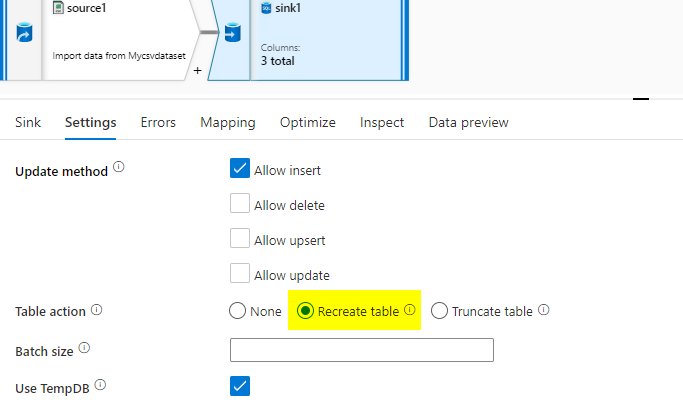

In the sink, give this dataset (SQL server dataset in your case) and make sure you check on the Recreate table in the sink Settings, so that a new table with that name will be created.

Execute this Dataflow, your target table will be created with your user defined data types.