I am currently trying to cut a region of interest out of an image, do some calculations based on the information inside the snippet, and then either transform the snippet back into the original position or transform some coordinates from the calculation done on the snippet back into the original image.

Here are some code snippets:

x, y, w, h = cv2.boundingRect(localized_mask)

p1 = [x, y h]

p4 = [x, y]

p3 = [x w, y]

p2 = [x w, y h]

w1 = int(np.linalg.norm(np.array(p2) - np.array(p3)))

w2 = int(np.linalg.norm(np.array(p4) - np.array(p1)))

h1 = int(np.linalg.norm(np.array(p1) - np.array(p2)))

h2 = int(np.linalg.norm(np.array(p3) - np.array(p4)))

maxWidth = max(w1, w2)

maxHeight = max(h1, h2)

neighbor_points = [p1, p2, p3, p4]

output_poins = np.float32(

[

[0, 0],

[0, maxHeight],

[maxWidth, maxHeight],

[maxWidth, 0],

]

)

matrix = cv2.getPerspectiveTransform(np.float32(neighbor_points), output_poins)

result = cv2.warpPerspective(

mask, matrix, (maxWidth, maxHeight), cv2.INTER_LINEAR

)

Here are some images to illustrate this problem:

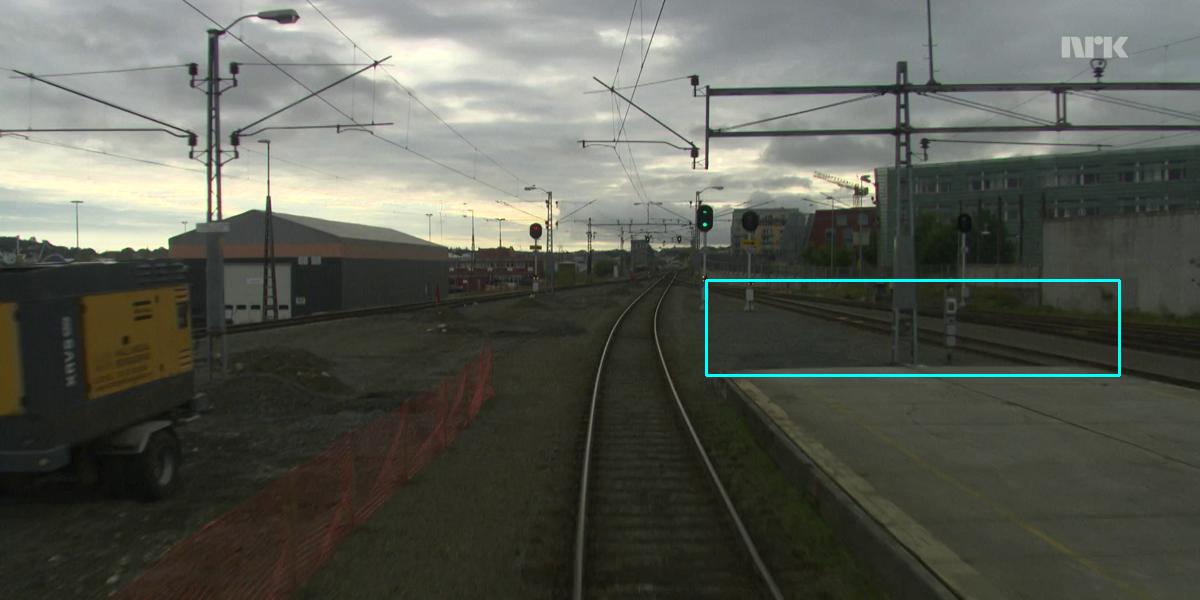

Original with marked RoI:

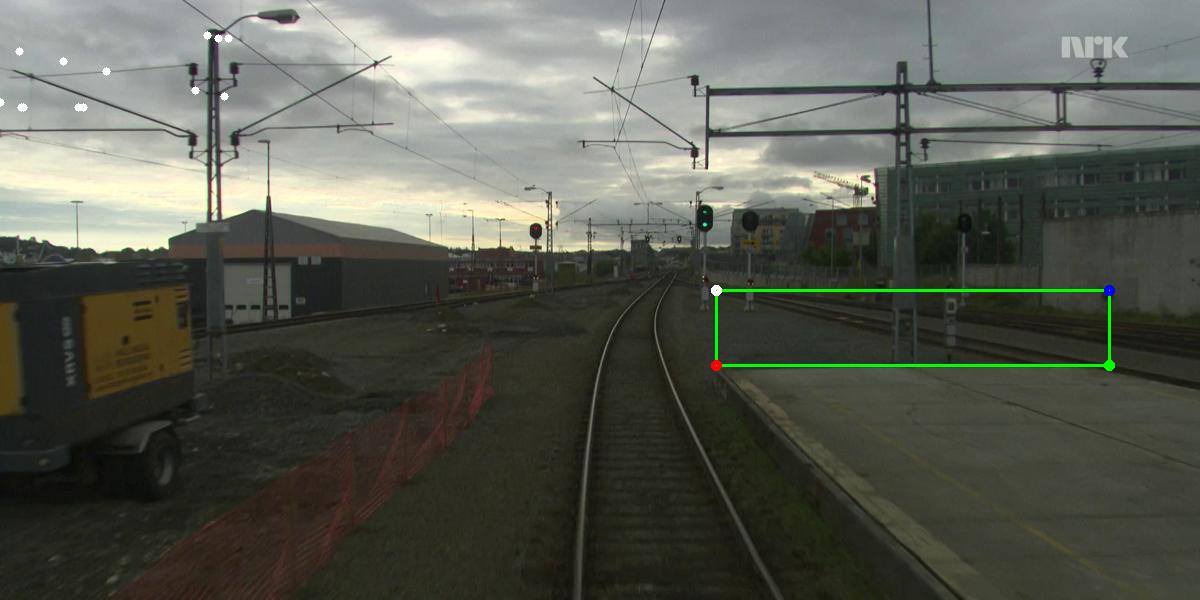

Transformed snippet with markings:

I tried to transform the snippet back into the original position with the following code snippets:

test2 = cv2.warpPerspective(

result, matrix, (maxHeight, maxWidth), cv2.WARP_INVERSE_MAP

)

test3 = cv2.warpPerspective(

result, matrix, (img.shape[1], img.shape[0]), cv2.WARP_INVERSE_MAP

)

Both resulted in a black image with either the shape of the snippet or a black image with the shape of the original image.

But I am honestly more interested in the white markings inside the snippet, so I tried to transform these by hand with the following code snippet:

inverse_matrix = cv2.invert(matrix)[1]

inverse_left=[]

for point in output_dict["left"]["knots"]:

trans_point = [point.x, point.y] [1]

trans_point = np.float32(trans_point)

x, y, z = np.dot(inverse_matrix, trans_point)

new_x = np.uint8(x/z)

new_y = np.uint8(y/z)

inverse_left.append([new_x, new_y])

But I didn't account for the position of the RoI inside the image and the resulting coordinates (white dots in the upper left half) didn't end up where I wanted them.

Does anybody have an idea what I am doing wrong or know a better solution to this problem? Thanks.

CodePudding user response:

Finally found a solution and it was as simple as i thought it would be...

I first inverted the transformation matrix I used to get my image snippet and looped and transformed every single coordinate that I got out of my calculation based on the snippet.

The code looks something like this:

inv_matrix = cv2.invert(matrix)

for point in points:

x, y = (cv2.transform(np.array([[[point.x, point.y]]]), inv_matrix[1]).squeeze())[:2]