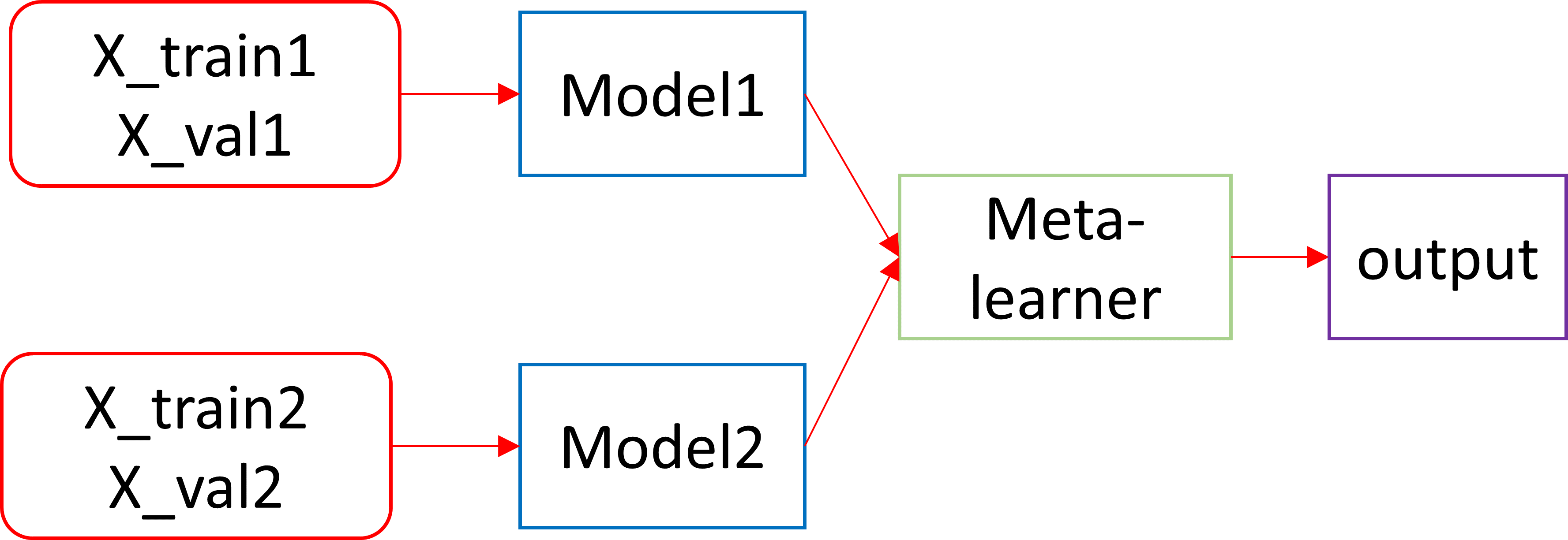

I an stacking two models trained on different inputs from two data collections as shown below using Tensorflow Keras 2.6.2. The stacking is performed with a convolutional meta-learner to predict on a common hold out test set. Given below is the code and he model architecture.

#load data

#datase-1

X_tr1 = np.load('data/X_tr1.npy') #shape (200, 224,224,3)

Y_tr1 = np.load('data/Y_tr1.npy') #shape (200, 224,224,1)

X_val1 = np.load('data/X_val1.npy') #shape (100, 224,224,3)

Y_val1 = np.load('data/Y_val1.npy') #shape (100, 224,224,1)

#dataset-2

X_tr2 = np.load('data/X_tr2.npy') #shape (200, 224,224,3)

Y_tr2 = np.load('data/Y_tr2.npy') #shape (200, 224,224,1)

X_val2 = np.load('data/X_val2.npy') #shape (100, 224,224,3)

Y_val2 = np.load('data/Y_val2.npy') #shape (100, 224,224,1)

#common hold-out test set

X_ts = np.load('data/X_ts.npy') #shape (50, 224,224,3)

Y_ts = np.load('data/Y_ts.npy') #shape (50, 224,224,1)

#%%

#instantiate the models

img_width, img_height = 224,224

input_shape = (img_width, img_height, 3) #RGB inputs

model_input1 = Input(shape=input_shape) #input to model1

model_input2 = Input(shape=input_shape) #input to model2

n_classes=1 #grayscale mask output

activation='sigmoid'

batch_size = 8

n_epochs = 256

BACKBONE = 'vgg16'

# define model

model1 = sm.Unet(BACKBONE, encoder_weights='imagenet',

classes=n_classes, activation=activation)

model2 = sm.Unet(BACKBONE, encoder_weights='imagenet',

classes=n_classes, activation=activation)

#%%

# constructing a stacking ensemble of the two models

# A second-level fully-convolutional meta-learner is used to learn

# the features extracted from the penultimate layers of the models

n_models = 2

def load_all_models(n_models):

all_models = list()

model1.load_weights('weights/vgg16_1.hdf5') # path to model1

model_loss1a=Model(inputs=model1.input,

outputs=model1.get_layer('decoder_stage4b_relu').output) #name of the penultimate layer

x1 = model_loss1a.output

model1a = Model(inputs=model1.input, outputs=x1, name='model1')

all_models.append(model1a)

model2.load_weights('weights/vgg16_2.hdf5') #path to model2

model_loss2a=Model(inputs=model2.input,

outputs=model2.get_layer('decoder_stage4b_relu').output)

x2 = model_loss2a.output

model2a = Model(inputs=model2.input, outputs=x2, name='model2')

all_models.append(model2a)

return all_models

# load models

n_members = 2

members = load_all_models(n_members)

print('Loaded %d models' % len(members))

def define_stacked_model(members):

# update all layers in all models to not be trainable

for i in range(len(members)):

model = members[i]

for layer in model.layers [1:]:

# make not trainable

layer.trainable = False

layer._name = 'ensemble_' str(i 1) '_' layer.name

ensemble_outputs = [model(model_input1, model_input2) for model in members]

merge = Concatenate()(ensemble_outputs)

# meta-learner, fully-convolutional

x4 = Conv2D(128, (3,3), activation='relu',

name = 'NewConv1', padding='same')(merge)

x5 = Conv2D(1, (1,1), activation='sigmoid',

name = 'NewConvfinal')(x4)

model= Model(inputs=[model_input1,model_input2],

outputs=x4)

return model

print("Creating Ensemble")

ensemble = define_stacked_model(members)

print("Ensemble architecture: ")

print(ensemble.summary())

Shown below is the architecture of the stacked model:

Model: "model_4"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

input_2 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

model1 (Functional) (None, None, None, 1 23752128 input_1[0][0]

input_2[0][0]

__________________________________________________________________________________________________

model2 (Functional) (None, None, None, 1 23752128 input_1[0][0]

input_2[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 224, 224, 32) 0 model1[0][0]

model2[0][0]

__________________________________________________________________________________________________

NewConv1 (Conv2D) (None, 224, 224, 128 36992 concatenate[0][0]

__________________________________________________________________________________________________

NewConv2 (Conv2D) (None, 224, 224, 64) 73792 NewConv1[0][0]

__________________________________________________________________________________________________

NewConv3 (Conv2D) (None, 224, 224, 32) 18464 NewConv2[0][0]

__________________________________________________________________________________________________

NewConvfinal (Conv2D) (None, 224, 224, 1) 33 NewConv3[0][0]

==================================================================================================

Total params: 47,633,537

Trainable params: 129,281

Non-trainable params: 47,504,256

I compile and train the model as shown below:

opt = keras.optimizers.Adam(lr=0.001)

loss_func='binary_crossentropy'

ensemble.compile(optimizer=opt,

loss=loss_func,

metrics=['binary_accuracy'])

results_ensemble = ensemble.fit((X_tr1, Y_tr1, X_tr2, Y_tr2),

batch_size=batch_size,

epochs=n_epochs,

verbose=1,

validation_data=(X_val1, Y_val1, X_val2, Y_val2))

I get the following error:

Traceback (most recent call last):

File "/home/codes/untitled5.py", line 563, in <module>

validation_data=(X_val1, Y_val1, X_val2, Y_val2))

File "/home/anaconda3/envs/tf262/lib/python3.7/site-packages/keras/engine/training.py", line 1125, in fit

data_adapter.unpack_x_y_sample_weight(validation_data))

File "/home/anaconda3/envs/tf262/lib/python3.7/site-packages/keras/engine/data_adapter.py", line 1574, in unpack_x_y_sample_weight

raise ValueError(error_msg)

ValueError: Data is expected to be in format `x`, `(x,)`, `(x, y)`, or `(x, y, sample_weight)`, found: (array([[[[0.09803922, 0.09803922, 0.09803922],

[0.09803922, 0.09803922, 0.09803922],

[0.09803922, 0.09803922, 0.09803922],

...,

[0.08627451, 0.08627451, 0.08627451],

[0.08627451, 0.08627451, 0.08627451],

[0.05098039, 0.05098039, 0.05098039]],...

Also how do I predict with a single X_ts provided the ensemble model now has two separate inputs?

New error after trying to implement the suggestions:

File "/home/codes/untitled5.py", line 595, in <module>

validation_data=outputs)

File "/home/anaconda3/envs/tf262/lib/python3.7/site-packages/keras/engine/training.py", line 1184, in fit

tmp_logs = self.train_function(iterator)

ValueError: Layer model_4 expects 2 input(s), but it received 4 input tensors. Inputs received: [<tf.Tensor 'IteratorGetNext:0' shape=(None, 224, 224, 3) dtype=float32>, <tf.Tensor 'IteratorGetNext:1' shape=(None, 224, 224, 1) dtype=float32>, <tf.Tensor 'IteratorGetNext:2' shape=(None, 224, 224, 3) dtype=float32>, <tf.Tensor 'IteratorGetNext:3' shape=(None, 224, 224, 1) dtype=float32>]

CodePudding user response:

Answer based on comment. Multi-inputs need to be passed as a list, not a tuple.

Change:

results_ensemble = ensemble.fit((X_tr1, Y_tr1, X_tr2, Y_tr2),

batch_size=batch_size,

epochs=n_epochs,

verbose=1,

validation_data=(X_val1, Y_val1, X_val2, Y_val2))

To:

inputs = [X_tr1, Y_tr1, X_tr2, Y_tr2] # you can pass the list itself or the variable

results_ensemble = ensemble.fit(inputs,

batch_size=batch_size,

epochs=n_epochs,

verbose=1,

validation_data=([X_val1, X_val2], y_val))

# test_inputs_diff = [x_test1, x_test2] # different input

# test_inputs_same = [x_test1, x_test1] # same input

# preds_diff = ensemble.predict(test_inputs_diff)

# preds_same = ensemble.predict(test_inputs_same)