Let's say I am using COPY to stream data into my database.

COPY some_table FROM STDIN

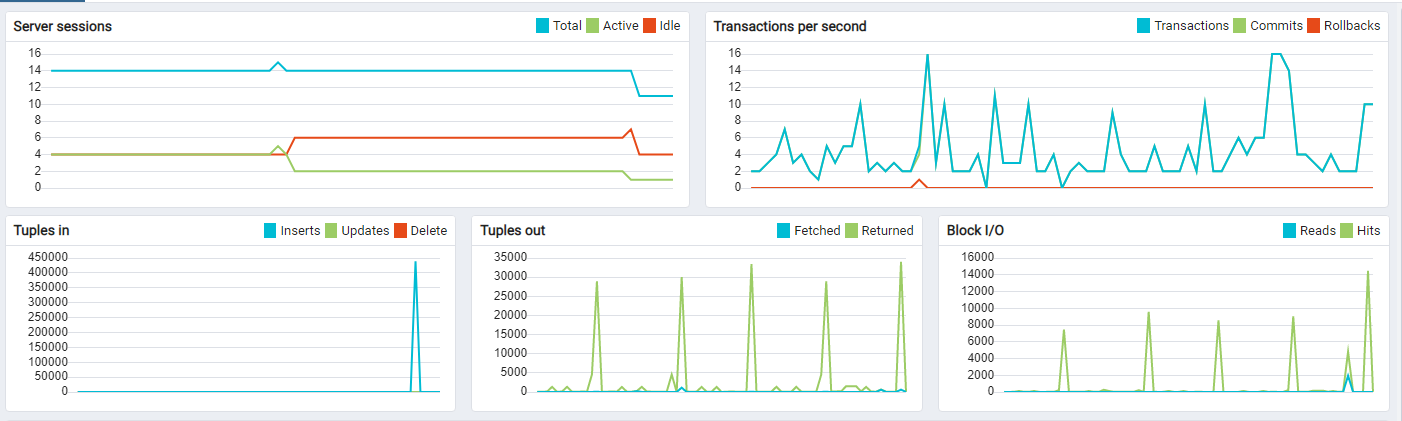

I noticed that AFTER stream had finished, database needs significant amount of time to process this data and input these variables into the table. In PgAdmin's monitoring I can see that there are nearly 0 table writes throughout streaming process and then suddenly everything writes in 1 peak.

Some statistics:

- I am inserting 450k rows into one table without indexes or keys,

- table has 28 fields,

- I am sending all

NULLs to every field

I am worried that there are problems with my implementation of streams. Is it how streaming works? Database is waiting to gather all text to then execute one gigantic command?

CodePudding user response:

COPY inserts the rows as they are sent, so the data are really streamed. But PostgreSQL doesn't write them to disk immediately: rather, it only writes transaction log (WAL) information to disk, and the actual rows are written to the shared memory cache. The data are persisted later, during the next checkpoint. There is a delay between the start of COPY and actual writing to disk, which could explain what you observe.

CodePudding user response:

The monitoring charts provided in pgAdmin are not fit for the purpose you are putting them to. Most of that data is coming from the stats collector, and that is generally only updated once per statement or transaction, at the end of the statement or transaction. So they are pretty much useless for monitoring rare, large, ongoing operations.

The type of monitoring you want to do is best done with OS tools, like top, vmstat, or sar.