I have a pyspark job that is distributed in multiple code files in this structure:

flexible_clendar

- Cache

- redis_main.py

- Helpers

- helpers.py

- Spark

- spark_main.py

- main.py

In the 'main.py' I'm using the functions from 'helpers.py', 'redis_main.py', etc...

The 'flexible_calendar' folder is uploaded in S3 bucket, so that the EMR could run the code from it.

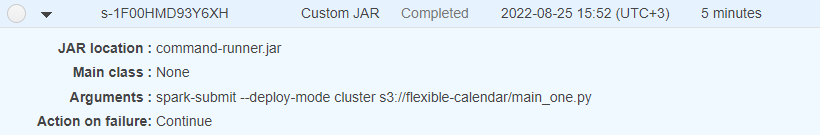

Iv'e created an EMR cluster that is bootstraped with all the needed packages and it is working if I'm running a simple-one file code (from s3) with all the functions in it:

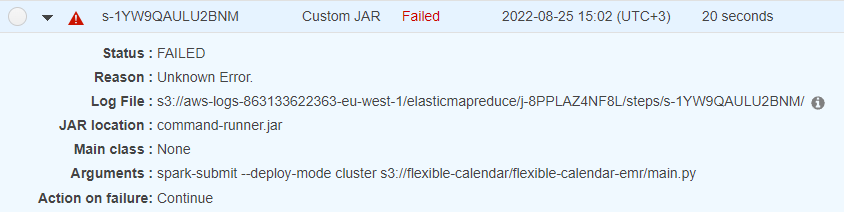

The problem is when I'm trying to use the distributed file structure the code fails, because it doesn't recognize the files from 'helpers.py', 'spark_main', etc... like so:

I've tried multiple configurations in the 'Step Arguments' field which none of them worked, such as:

Arguments: spark-submit --deploy-mode cluster s3://flexible-calendar/flexible-calendar-emr

Arguments: spark-submit --deploy-mode cluster s3://flexible-calendar/flexible-calendar-emr/Cache/redis_main.py s3://flexible-calendar/flexible-calendar-emr/Helpers/helpers.py s3://flexible-calendar/flexible-calendar-emr/Spark/spark_main.py s3://flexible-calendar/flexible-calendar-emr/main.py

Arguments: spark-submit --deploy-mode cluster --class s3://flexible-calendar/flexible-calendar-emr s3://flexible-calendar/flexible-calendar-emr/main.py

Arguments: spark-submit --deploy-mode cluster --class s3://flexible-calendar/main_one.py

Also:

Arguments: spark-submit --py-files s3://flexible-calendar/flexible-calendar-emr.zip

Arguments: spark-submit --deploy-mode --py-files s3://flexible-calendar/flexible-calendar-emr.zip

Arguments: spark-submit --py-files s3://flexible-calendar/flexible-calendar-emr.zip --deploy-mode cluster s3://flexible-calendar/flexible-calendar-emr/Spark/spark_main.py

Arguments: spark-submit --deploy-mode cluster s3://flexible-calendar/flexible-calendar-emr/Spark/spark_main.py --py-files s3://flexible-calendar/flexible-calendar-emr.zip

and more...

Hope someone could help,

Thanks.

CodePudding user response:

Quoting from Spark Documentation:

For Python, you can use the --py-files argument of spark-submit to add .py, .zip or .egg files to be distributed with your application. If you depend on multiple Python files we recommend packaging them into a .zip or .egg.

CodePudding user response:

So the zip file you have created needs to be added to the sys path inside the main.py.

if os.path.exists('flexible-calendar-emr.zip'):

sys.path.insert(0, 'flexible-calendar-emr.zip')

Let me know of this helps!