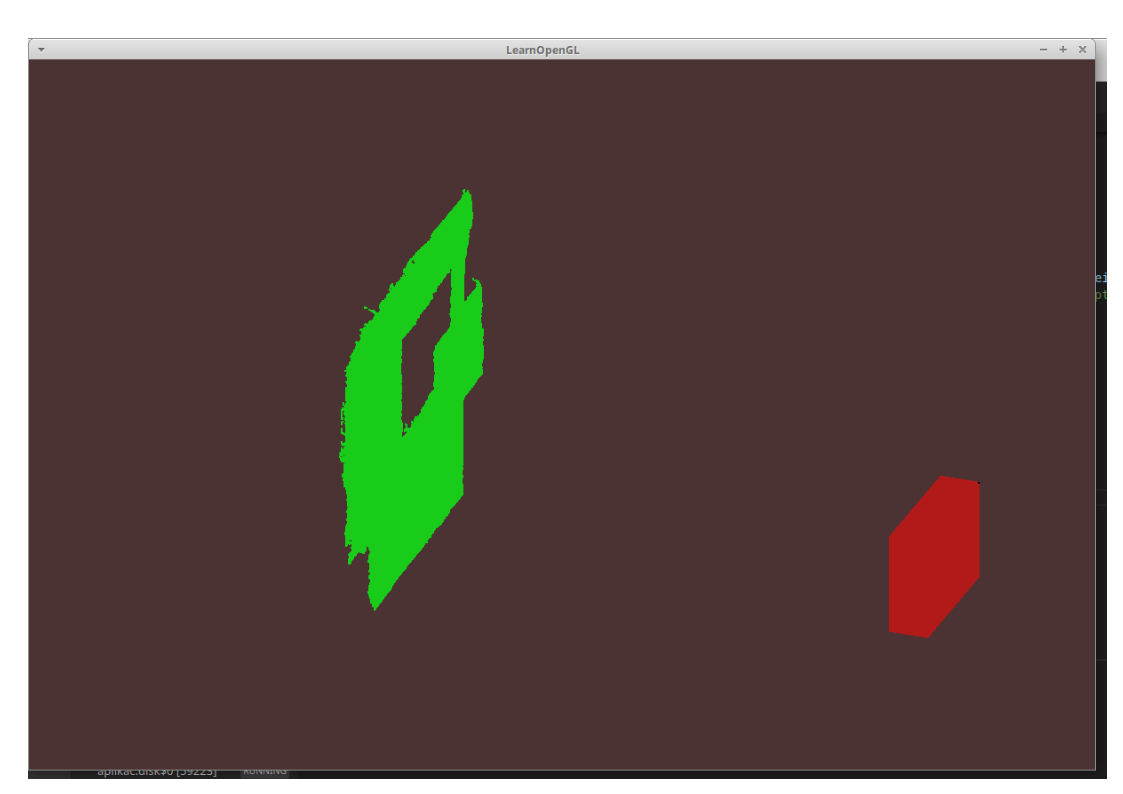

I am a new at Point cloud library. I'm trying to do example from website

Here is a export from terminal...

Failed to find match for field 'rgba'. Failed to find match for field 'rgba'. Model resolution: 0.999988 Model sampling size: 9.99988 Scene sampling size: 19.9998 LRF support radius: 15.4998 SHOT descriptor radius: 19.9998 Clustering bin size: 9.99988

Model total points: 256804; Selected Keypoints: 2528 Scene total points: 573194; Selected Keypoints: 2178 [pcl::SHOTEstimation::createBinDistanceShape] Point 4 has 4 (1.328904%) NaN normals in its neighbourhood [pcl::SHOTEstimation::createBinDistanceShape] Point 16 has 4 (1.365188%) NaN normals in its neighbourhood [pcl::SHOTEstimation::createBinDistanceShape] Point 17 has 2 (0.651466%) NaN normals in its neighbourhood [pcl::SHOTEstimation::computeFeature] The local reference frame is not valid! Aborting description of point with index 31 [pcl::SHOTEstimation::computeFeature] The local reference frame is not valid! Aborting description of point with index 34 [pcl::SHOTEstimation::computeFeature] The local reference frame is not valid! Aborting description of point with index 348 [pcl::SHOTEstimation::computeFeature] The local reference frame is not valid! Aborting description of point with index 656 [pcl::SHOTEstimation::computeFeature] The local reference frame is not valid! Aborting description of point with index 204 Correspondences found: 4 Model instances found: 0

I tunned pararameters of each part of program, but nothing helped me. I'm not really sure that only 1 plane of model fits to scene. Maybe better scene with others sides of box, but it is more complicated because I must match (register) more scene views.

Here is a few lines of code...

std::string model_filename_ = "model.ply";

std::string scene_filename_ = "scene.ply";

//Algorithm params

bool show_keypoints_ (false);

bool show_correspondences_ (false);

bool use_cloud_resolution_ (true);

bool use_hough_ (true);

float model_ss_ (10.0f);

float scene_ss_ (20.0f);

float rf_rad_ (15.5f);

float descr_rad_ (20.0f);

float cg_size_ (10.0f);

float cg_thresh_ (5.0f);

double computeCloudResolution (const pcl::PointCloud<PointType>::ConstPtr &cloud)

{

double res = 0.0;

int n_points = 0;

int nres;

std::vector<int> indices (2);

std::vector<float> sqr_distances (2);

pcl::search::KdTree<PointType> tree;

tree.setInputCloud (cloud);

for (std::size_t i = 0; i < cloud->size (); i)

{

if (! std::isfinite ((*cloud)[i].x))

{

continue;

}

//Considering the second neighbor since the first is the point itself.

nres = tree.nearestKSearch (i, 2, indices, sqr_distances);

if (nres == 2)

{

res = sqrt (sqr_distances[1]);

n_points;

}

}

if (n_points != 0)

{

res /= n_points;

}

return res;

}

int main (int argc, char *argv[])

{

n_body = 10000000;

zasobnikGL = new float[n_body];

pcl::PointCloud<PointType>::Ptr model (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr model_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<NormalType>::Ptr model_normals (new pcl::PointCloud<NormalType> ());

pcl::PointCloud<NormalType>::Ptr scene_normals (new pcl::PointCloud<NormalType> ());

pcl::PointCloud<DescriptorType>::Ptr model_descriptors (new pcl::PointCloud<DescriptorType> ());

pcl::PointCloud<DescriptorType>::Ptr scene_descriptors (new pcl::PointCloud<DescriptorType> ());

//

// Load clouds

//

//if (pcl::io::loadPCDFile (model_filename_, *model) < 0)

if(pcl::io::loadPLYFile(model_filename_, *model) < 0)

{

std::cout << "Error loading model cloud." << std::endl;

return (-1);

}

//if (pcl::io::loadPCDFile (scene_filename_, *scene) < 0)

if(pcl::io::loadPLYFile(scene_filename_, *scene) < 0)

{

std::cout << "Error loading scene cloud." << std::endl;

return (-1);

}

pcl::PointCloud<PointType>::Ptr scene_copy (new pcl::PointCloud<PointType> ());

*scene_copy = *scene;

//

// Set up resolution invariance

//

if (use_cloud_resolution_)

{

float resolution = static_cast<float> (computeCloudResolution (model));

if (resolution != 0.0f)

{

model_ss_ *= resolution;

scene_ss_ *= resolution;

rf_rad_ *= resolution;

descr_rad_ *= resolution;

cg_size_ *= resolution;

}

std::cout << "Model resolution: " << resolution << std::endl;

std::cout << "Model sampling size: " << model_ss_ << std::endl;

std::cout << "Scene sampling size: " << scene_ss_ << std::endl;

std::cout << "LRF support radius: " << rf_rad_ << std::endl;

std::cout << "SHOT descriptor radius: " << descr_rad_ << std::endl;

std::cout << "Clustering bin size: " << cg_size_ << std::endl << std::endl;

}

//

// Compute Normals

//

pcl::NormalEstimationOMP<PointType, NormalType> norm_est;

norm_est.setKSearch (10); //10

norm_est.setInputCloud (model);

norm_est.compute (*model_normals);

norm_est.setInputCloud (scene);

norm_est.compute (*scene_normals);

//

// Downsample Clouds to Extract keypoints

//

pcl::UniformSampling<PointType> uniform_sampling;

uniform_sampling.setInputCloud (model);

uniform_sampling.setRadiusSearch (model_ss_);

uniform_sampling.filter (*model_keypoints);

std::cout << "Model total points: " << model->size () << "; Selected Keypoints: " << model_keypoints->size () << std::endl;

uniform_sampling.setInputCloud (scene);

uniform_sampling.setRadiusSearch (scene_ss_);

uniform_sampling.filter (*scene_keypoints);

std::cout << "Scene total points: " << scene->size () << "; Selected Keypoints: " << scene_keypoints->size () << std::endl;

//

// Compute Descriptor for keypoints

//

pcl::SHOTEstimationOMP<PointType, NormalType, DescriptorType> descr_est;

descr_est.setRadiusSearch (descr_rad_);

descr_est.setInputCloud (model_keypoints);

descr_est.setInputNormals (model_normals);

descr_est.setSearchSurface (model);

descr_est.compute (*model_descriptors);

descr_est.setInputCloud (scene_keypoints);

descr_est.setInputNormals (scene_normals);

descr_est.setSearchSurface (scene);

descr_est.compute (*scene_descriptors);

//

// Find Model-Scene Correspondences with KdTree

//

pcl::CorrespondencesPtr model_scene_corrs (new pcl::Correspondences ());

pcl::KdTreeFLANN<DescriptorType> match_search;

match_search.setInputCloud (model_descriptors);

// For each scene keypoint descriptor, find nearest neighbor into the model keypoints descriptor cloud and add it to the correspondences vector.

for (std::size_t i = 0; i < scene_descriptors->size (); i)

{

std::vector<int> neigh_indices (1);

std::vector<float> neigh_sqr_dists (1);

if (!std::isfinite (scene_descriptors->at (i).descriptor[0])) //skipping NaNs

{

continue;

}

int found_neighs = match_search.nearestKSearch (scene_descriptors->at (i), 1, neigh_indices, neigh_sqr_dists);

if(found_neighs == 1 && neigh_sqr_dists[0] < 0.25f) // add match only if the squared descriptor distance is less than 0.25 (SHOT descriptor distances are between 0 and 1 by design)

{

pcl::Correspondence corr (neigh_indices[0], static_cast<int> (i), neigh_sqr_dists[0]);

model_scene_corrs->push_back (corr);

}

}

std::cout << "Correspondences found: " << model_scene_corrs->size () << std::endl;

//

// Actual Clustering

//

std::vector<Eigen::Matrix4f, Eigen::aligned_allocator<Eigen::Matrix4f> > rototranslations;

std::vector<pcl::Correspondences> clustered_corrs;

// Using Hough3D

if (use_hough_)

{

//

// Compute (Keypoints) Reference Frames only for Hough

//

pcl::PointCloud<RFType>::Ptr model_rf (new pcl::PointCloud<RFType> ());

pcl::PointCloud<RFType>::Ptr scene_rf (new pcl::PointCloud<RFType> ());

pcl::BOARDLocalReferenceFrameEstimation<PointType, NormalType, RFType> rf_est;

rf_est.setFindHoles (true);

rf_est.setRadiusSearch (rf_rad_);

rf_est.setInputCloud (model_keypoints);

rf_est.setInputNormals (model_normals);

rf_est.setSearchSurface (model);

rf_est.compute (*model_rf);

rf_est.setInputCloud (scene_keypoints);

rf_est.setInputNormals (scene_normals);

rf_est.setSearchSurface (scene);

rf_est.compute (*scene_rf);

// Clustering

pcl::Hough3DGrouping<PointType, PointType, RFType, RFType> clusterer;

clusterer.setHoughBinSize (cg_size_);

clusterer.setHoughThreshold (cg_thresh_);

clusterer.setUseInterpolation (true);

clusterer.setUseDistanceWeight (false);

clusterer.setInputCloud (model_keypoints);

clusterer.setInputRf (model_rf);

clusterer.setSceneCloud (scene_keypoints);

clusterer.setSceneRf (scene_rf);

clusterer.setModelSceneCorrespondences (model_scene_corrs);

//clusterer.cluster (clustered_corrs);

clusterer.recognize (rototranslations, clustered_corrs);

}

else // Using GeometricConsistency

{

pcl::GeometricConsistencyGrouping<PointType, PointType> gc_clusterer;

gc_clusterer.setGCSize (cg_size_);

gc_clusterer.setGCThreshold (cg_thresh_);

gc_clusterer.setInputCloud (model_keypoints);

gc_clusterer.setSceneCloud (scene_keypoints);

gc_clusterer.setModelSceneCorrespondences (model_scene_corrs);

//gc_clusterer.cluster (clustered_corrs);

gc_clusterer.recognize (rototranslations, clustered_corrs);

}

std::cout << "Model instances found: " << rototranslations.size () << std::endl;

for (std::size_t i = 0; i < rototranslations.size (); i)

{

std::cout << "\n Instance " << i 1 << ":" << std::endl;

std::cout << " Correspondences belonging to this instance: " << clustered_corrs[i].size () << std::endl;

// Print the rotation matrix and translation vector

Eigen::Matrix3f rotation = rototranslations[i].block<3,3>(0, 0);

Eigen::Vector3f translation = rototranslations[i].block<3,1>(0, 3);

printf ("\n");

printf (" | %6.3f %6.3f %6.3f | \n", rotation (0,0), rotation (0,1), rotation (0,2));

printf (" R = | %6.3f %6.3f %6.3f | \n", rotation (1,0), rotation (1,1), rotation (1,2));

printf (" | %6.3f %6.3f %6.3f | \n", rotation (2,0), rotation (2,1), rotation (2,2));

printf ("\n");

printf (" t = < %0.3f, %0.3f, %0.3f >\n", translation (0), translation (1), translation (2));

}

pcl::PointCloud<PointType>::Ptr rotated_model (new pcl::PointCloud<PointType> ());

pcl::transformPointCloud (*model, *rotated_model, rototranslations[0]);

And you can download the ply files too. https://www.uschovna.cz/zasilka/CK5T2STSWMPWR8TA-S8J/

I would like to ask you if you have any experiance with this problem or is it good way to fix it. Thank you very much.

CodePudding user response:

Correspondence grouping will probably not work well in your scenario (detecting a box on a table) because the object is too simple and doesn't have many unique features. Something that might work better (and faster): If you know that the box is always on a large table, you could first use a sample consensus method (e.g. RANSAC) to find the table plane and remove the table and everything below it. If you know that the box is the only object on the table, you are pretty much finished. You could compute the centroid or convex hull of the remaining points, depending on what you need exactly. You will find more examples in the other PCL tutorials.