I am running mongodb version 4.2.9 (same issue was there in 4.2.1) as well.

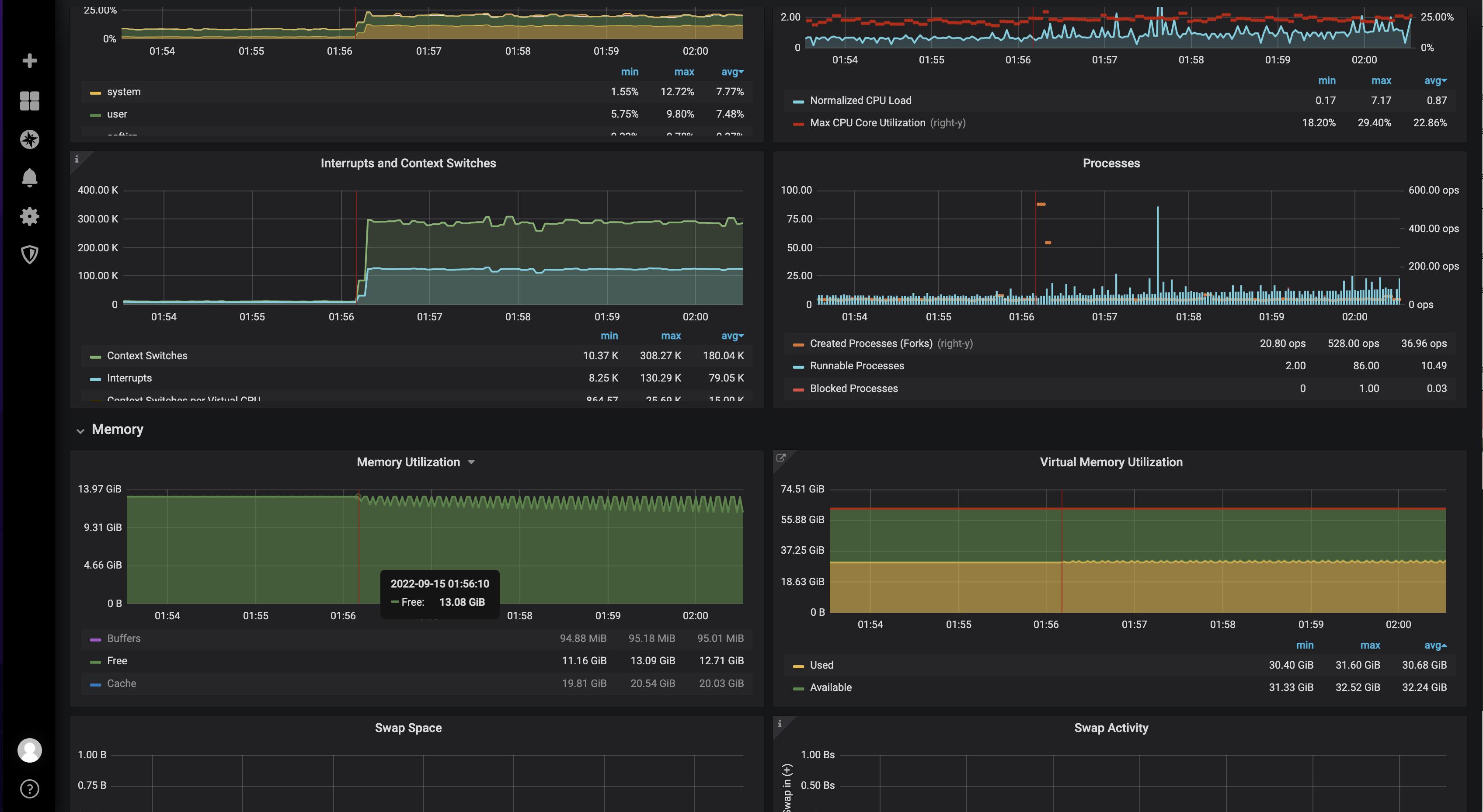

When we are doing testing with sustained load on mongodb, suddenly latencies start spiking and instance goes in bad state. This is happening at ~5k read qps and 50 write qps (these are get by primay key queries so access pattern for sure is not an issue). The active data set for read qps is <1 gb. And the wired tiger cache size if more than 30gb. Same question is asked on

a. Can someone please help me understand when,how mongodb forks a child process?

b. Can we limit the number of child process creation rate?

c. is there any doc around mongodb process management?

d. Is this fork cause or side effect of some other issue?

In our mongodb config we have set processManagement.fork: true if that matters.

Apparantly as par this question there is no way to limit the number of child process as well.

CodePudding user response:

There can be lots of reason why this could have happened. I am answering this based on what was our case. But some part of answer can be used to detect other issues.

Summary: there is a bug in oplog applier, due to linux libc library issue. MongoDB has done some workaround fix but that fix was not there in the MongoDB version we were using.

This bug is well documented here. You can update your DB version to get the fix.

a. Can someone please help me understand when, how mongodb forks a child process?

every new connection ends up creating a new process and new file descriptor.

Can we limit the number of child process creation rate?

We can limit net.maxIncomingConnections as par recommendation on this page

c. is there any doc around mongodb process management?

d. Is this fork cause or side effect of some other issue?

In my case this was side effect of this bug in MongoDB. Due to some bug in MongoDB engine (as there can be other issues apart from what was there in my case) some queries were not returned. Hence for the new requests application created more number of connections and hence more number of file descriptors.