im trying to get the most dominant colors from a video, when starting to play want to draw in real time the colors from the video, for example the 6 most dominant, 3, etc, i searched a lot but all tutorials they all detecting only three colors, red, blue and green, someone may be detecting a bit more because they are setting the values by themselves, using the hsv map to they can set which colors detect, mine problem is that is a video, so i wont know the ranges

while(True):

# Capture the video frame

# by frame

ret, frame = vid.read();

prev = time.time();

capture = cv.VideoCapture(args['file'])

img = cv.imread("./assets/taxi.jpeg");

rgb_color = cv.cvtColor(frame, cv.COLOR_BGR2RGB);

height, width, channel = rgb_color.shape;

histogram = cv.calcHist([frame],[0],None,[256],[0,256]);

plt.plot(histogram);

cv.imshow("histogram", plt);

for now just open the webcam and showing the histogram

CodePudding user response:

You can try to use kmeans clustering to get dominant colors:

- Convert an image to a list of pixels

.reshape(-1, 3) - Cluster pixels using kmeans

- Sort the clusters from largest to smallest

- Use the cluster center as a color

Code:

import numpy as np

import cv2

cap = cv2.VideoCapture("BigBuckBunny.mp4")

n_clusters = 5

while True:

status, image = cap.read()

if not status:

break

# to reduce complexity resize the image

data = cv2.resize(image, (100, 100)).reshape(-1, 3)

criteria = (cv2.TERM_CRITERIA_EPS cv2.TERM_CRITERIA_MAX_ITER, 10, 1.0)

flags = cv2.KMEANS_RANDOM_CENTERS

compactness, labels, centers = cv2.kmeans(data.astype(np.float32), n_clusters, None, criteria, 10, flags)

cluster_sizes = np.bincount(labels.flatten())

palette = []

for cluster_idx in np.argsort(-cluster_sizes):

palette.append(np.full((image.shape[0], image.shape[1], 3), fill_value=centers[cluster_idx].astype(int), dtype=np.uint8))

palette = np.hstack(palette)

sf = image.shape[1] / palette.shape[1]

out = np.vstack([image, cv2.resize(palette, (0, 0), fx=sf, fy=sf)])

cv2.imshow("dominant_colors", out)

cv2.waitKey(1)

Also you may consider using other distances and color spaces. For example the L2 distance with the LAB color space is better reflects how the person perceives the color similarity.

https://en.wikipedia.org/wiki/CIELAB_color_space#Perceptual_differences

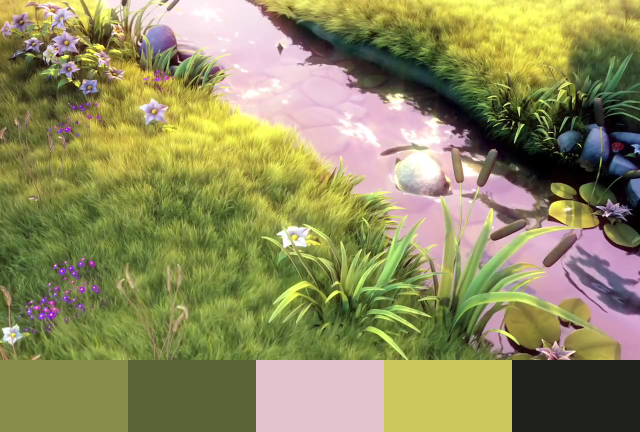

Images taken from the video "Big Buck Bunny": https://peach.blender.org/