I am going through Matillion Academy (Building a Data Warehouse). There is a slide deck to follow online and I am running my own instance of Matillion to recreate the building of the warehouse. My Matillion is on Azure, as is my Snowflake database. The training is AWS-based, but gives information about the adjustments needed for Azure or GS.

One of the steps shows how to Load data from blob storage. It is S3 based. For Azure different components need to be used (as the S3 ones don't exist there), and data needs to be loaded from azure storage instead of S3 storage. It also explains that for Snowflake on Azure yet another component needs to be used.

I have created a Stage in Snowflake:

CREATE STAGE "onlinemtlntrainingazure_flights"

URL='azure://onlinemtlntrainingazure.blob.core.windows.net/online-mtln-training-azure-flights'

The stage shows in Snowflake (external stage) and in Matillion (when using 'manage stages' on the database). The code is taken from the json file I imported to create the job to do this (see first step below).

I have created the target table in my database. It is accessible and visible in Matillion IDE.

The adjusted component I am to use is 'Azure Blob Storage Load'. According to the documentation, I will need:

For Snowflake on Azure: Create a Stage in Snowflake:

You should create a Stage in Snowflake which will be pointing to the public data we provide. Please, find below the .json file containing the job that will help you to do this. Don't forget to change the SQL Script for pointing to your own schema

After Creating the Stage in Snowflake:

You should use the 'Create Table' and the 'Azure Blob Storage Load' components individually as the 'Azure Blob Load Generator' won't let you to select the Stage previously created. We have attached below the Create Table metadata to save you some time.

'Azure Blob Storage Load' Settings:

Stage: onlinemtlntrainingazure_flights Pattern: training_azure_flights_2016.gz Target Table: training_flights Record Delimiter: 0x0a Skip Header: 1

The source data on Azure is located here:

Azure Blob Container (with flights data)

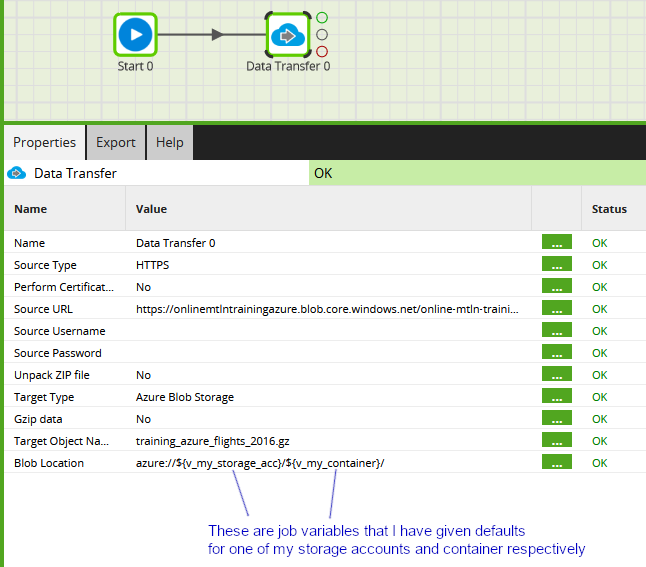

You already have the https:// source URLs for the three files, so:

- Set the source type to HTTPS (no username or password is needed)

- Add the source URL

- Set the target type to Azure Blob Storage

- In the example I used two variables, with defaults set to my storage account and container name

- Repeat for all three files

After running the Data Transfer three times, you will then be able to proceed with the Azure Blob Storage Load Generator, reading from your own copies of the files.