I am a MSc student looking for some help with my field data.

I have a dataset of atmospheric parameters where the gas sensors stored data at 5 second intervals. I connected a soil gas chamber - a bucket that when placed over the soil it is taking a flux measurement (concentration/time to get a rate). It had to be 'purged' out between measurements to clear the gas sensors before the next measurement to occur.

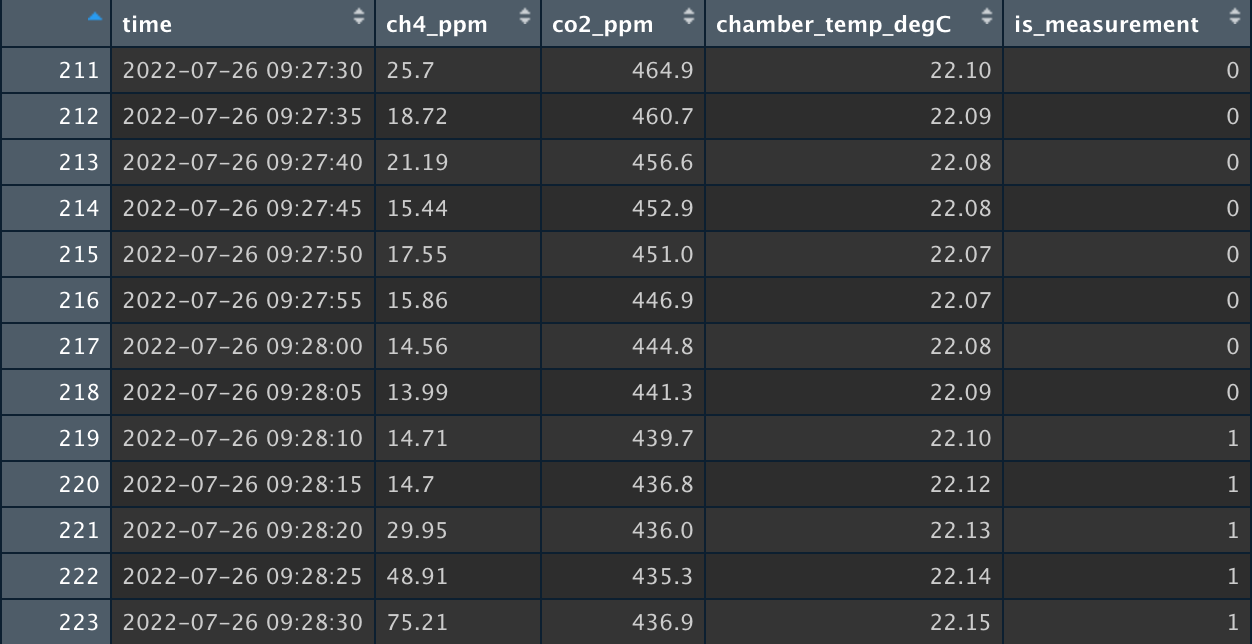

I used a 0/1 switch on the gas sensor box with a column in the dataset to indicate when I was taking a measurement, where 0 == no measurement (chamber open), 1 == measurement occurring (chamber closed). I attached an example of my dataset.

I timed these at 3 minutes per measurement, so I have start and end times but they are only accurate to the minute and the 0/1 column is accurate to the second. So I am looking to use the 0/1 to group the measurements

I'm looking to group the rows in each measurement using the 0/1 is_measurement column, and give each measurement an ID. I have some pseudocode of what I was thinking to do, using a loop to iterate through:

> if is_measurement == 0,

then do nothing; move to the next row,

> if is measurement == 1 & previous row == 0,

add following rows that == 1 to new measurement group,

> if is_measurement == 0 & last row is_measurement == 1,

finish the measurement group and go to next row and repeat

For the result, I would like a column including a 'measurement_id' in each row of data to show which measurement the row of data belongs. Ex: the first measurement, measurement_id = 1 in all rows, the second = 2.... where there is no measurement (is_measurement == 0), measurement_id == 0.

Thank you in advance!!

structure(list(time = c("2022-07-26 09:10:00", "2022-07-26 09:10:05", "2022-07-26 09:10:10", "2022-07-26 09:10:15", "2022-07-26 09:10:20", "2022-07-26 09:10:25", "2022-07-26 09:10:30", "2022-07-26 09:10:35", "2022-07-26 09:10:40", "2022-07-26 09:10:45", "2022-07-26 09:10:50", "2022-07-26 09:10:55", "2022-07-26 09:11:00", "2022-07-26 09:11:05", "2022-07-26 09:11:10", "2022-07-26 09:11:15", "2022-07-26 09:11:20", "2022-07-26 09:11:25", "2022-07-26 09:11:30", "2022-07-26 09:11:35", "2022-07-26 09:11:40", "2022-07-26 09:11:45", "2022-07-26 09:11:50", "2022-07-26 09:11:55", "2022-07-26 09:12:00", "2022-07-26 09:12:05", "2022-07-26 09:12:10", "2022-07-26 09:12:15", "2022-07-26 09:12:20", "2022-07-26 09:12:25", "2022-07-26 09:12:30", "2022-07-26 09:12:35", "2022-07-26 09:12:40", "2022-07-26 09:12:45", "2022-07-26 09:12:50", "2022-07-26 09:12:55", "2022-07-26 09:13:00", "2022-07-26 09:13:05", "2022-07-26 09:13:10", "2022-07-26 09:13:15", "2022-07-26 09:13:20", "2022-07-26 09:13:25", "2022-07-26 09:13:30", "2022-07-26 09:13:35", "2022-07-26 09:13:40", "2022-07-26 09:13:45", "2022-07-26 09:13:50", "2022-07-26 09:13:55", "2022-07-26 09:14:00", "2022-07-26 09:14:05", "2022-07-26 09:14:10", "2022-07-26 09:14:15", "2022-07-26 09:14:20", "2022-07-26 09:14:25", "2022-07-26 09:14:30", "2022-07-26 09:14:35", "2022-07-26 09:14:40", "2022-07-26 09:14:45", "2022-07-26 09:14:50", "2022-07-26 09:14:55", "2022-07-26 09:15:00"), ch4_ppm = c("2.102", "2.142", "2.16", "2.2", "2.366", "2.292", "2.464", "2.818", "2.614", "1.974", "2.314", "1.906", "2.252", "2.278", "1.9", "2.152", "2.818", "10.3", "22.95", "28.3", "27.75", "36.32", "51.63", "54.56", "92", "121.5", "127.6", "193.7", "189.3", "279.2", "323.2", "368.6", "426.1", "485.7", "545.7", "492.8", "548.1", "751.6", "820", "892", "959", "1018", "1080", "1152", "1214", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX", "MAX"), co2_ppm = c(419.1, 419.8,

420.1, 419.9, 420.7, 419.7, 420.6, 420.2, 420.7, 419.7, 419.8,

419.2, 421.1, 425.8, 432.4, 442.2, 452.3, 463.2, 472.4, 482.3, 491, 500.3, 509.1, 517.7, 526.6, 530.3, 534.7, 536.1, 539, 542.5,

549.8, 557.7, 570.5, 584.4, 602.6, 622.9, 648.3, 674.4, 705.5,

736.2, 772.3, 807, 846, 883, 927, 967, 1010, 1049, 1094, 1135, 1181, 1226, 1268, 1313, 1359, 1403, 1445, 1490, 1534, 1576, 1616 ), chamber_temp_degC = c(20.9, 20.89, 20.88, 20.89, 20.88, 20.88,

20.88, 20.87, 20.86, 20.86, 20.86, 20.87, 20.86, 20.86, 20.85,

20.84, 20.84, 20.84, 20.84, 20.85, 20.85, 20.86, 20.87, 20.88,

20.89, 20.9, 20.91, 20.91, 20.93, 20.94, 20.96, 20.96, 20.97,

20.98, 20.99, 20.99, 21, 21.01, 21.02, 21.03, 21.03, 21.05, 21.06,

21.06, 21.07, 21.08, 21.09, 21.09, 21.09, 21.1, 21.11, 21.11,

21.12, 21.13, 21.13, 21.14, 21.15, 21.14, 21.12, 21.12, 21.13 ), is_measurement = c(0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0)), row.names = c(NA, 61L), class = "data.frame")

CodePudding user response:

Here's a fairly simple solution in dplyr. We first create a column finding the first measurement in each measurement group (rows where is_measurement == 1 and the previous row is_measurement == 0). We do a cumulative sum of those to count groups, and multiply by is_measurement to 0 out the rows where is_measurement == 0:

library(dplyr)

df %>%

mutate(

is_first_measurement = is_measurement == 1 & lag(is_measurement == 0, default = 0),

measurement_id = cumsum(is_first_measurement) * is_measurement

)