I have a sample Spring Boot/Batch job I'm assembling together by adopting several online examples that is intended to simulate the generation of an

The record production keeps looping endlessly.

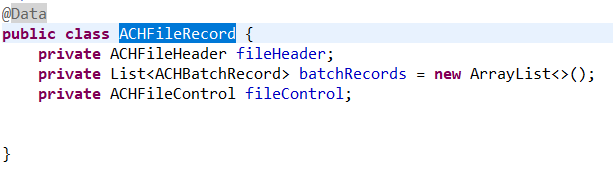

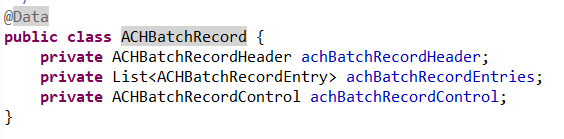

Here are the relevant configurations, in which it could be seen how the file output is facilitated by a set of ACH record type specific extractors and aggregators and their composition:

/**

*

*/

package com.***.nacha.producer.config;

import java.util.HashMap;

import java.util.Map;

import javax.annotation.PostConstruct;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.Step;

import org.springframework.batch.core.configuration.annotation.JobBuilderFactory;

import org.springframework.batch.core.configuration.annotation.JobScope;

import org.springframework.batch.core.configuration.annotation.StepBuilderFactory;

import org.springframework.batch.core.launch.support.RunIdIncrementer;

import org.springframework.batch.item.ItemReader;

import org.springframework.batch.item.file.FlatFileItemWriter;

import org.springframework.batch.item.file.builder.FlatFileItemWriterBuilder;

import org.springframework.batch.item.file.transform.BeanWrapperFieldExtractor;

import org.springframework.batch.item.file.transform.FieldExtractor;

import org.springframework.batch.item.file.transform.FormatterLineAggregator;

import org.springframework.batch.item.file.transform.LineAggregator;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.io.FileSystemResource;

import com.***.nacha.producer.domain.ACHBatchRecord;

import com.***.nacha.producer.domain.ACHBatchRecordControl;

import com.***.nacha.producer.domain.ACHBatchRecordEntry;

import com.***.nacha.producer.domain.ACHBatchRecordHeader;

import com.***.nacha.producer.domain.ACHFileControl;

import com.***.nacha.producer.domain.ACHFileHeader;

import com.***.nacha.producer.domain.ACHFileRecord;

import com.***.nacha.producer.domain.ACHRecord;

/**

* @author x123456

*

*/

@Configuration

public class JobConfiguration {

@Autowired

private JobBuilderFactory jobBuilderFactory;

@Autowired

private StepBuilderFactory stepBuilderFactory;

private Map<String, LineAggregator> aggregators = new HashMap<>();

@PostConstruct

private void init() {

aggregators.put("ACHFileHeader", JobConfiguration.this.achFileHeaderAggregator());

aggregators.put("ACHBatchRecordHeader", JobConfiguration.this.achBatchHeaderAggregator());

aggregators.put("ACHBatchRecordEntry", JobConfiguration.this.achBatchEntryAggregator());

aggregators.put("ACHBatchRecordControl", JobConfiguration.this.achBatchControlAggregator());

aggregators.put("ACHFileControl", JobConfiguration.this.achFileControlAggregator());

};

@Bean

public LineAggregator<ACHFileRecord> achLineAggregator() {

return new LineAggregator<ACHFileRecord>() {

private String aggregate(ACHRecord record) {

return aggregate(record, false);

}

@SuppressWarnings("unchecked")

private String aggregate(ACHRecord record, boolean isLastRecord) {

StringBuffer sb = new StringBuffer();

sb.append(JobConfiguration.this.aggregators.get(record.getClass().getSimpleName()).aggregate(record));

if (!isLastRecord) {

sb.append(System.getProperty("line.separator"));

}

return sb.toString();

}

@Override

public String aggregate(ACHFileRecord item) {

if (item == null) {

return "";

}

StringBuffer sb = new StringBuffer();

sb.append(this.aggregate(item.getFileHeader()));

for (ACHBatchRecord batch : item.getBatchRecords()) {

sb.append(this.aggregate(batch.getAchBatchRecordHeader()));

for (ACHBatchRecordEntry batchEntry : batch.getAchBatchRecordEntries()) {

sb.append(this.aggregate(batchEntry));

}

sb.append(this.aggregate(batch.getAchBatchRecordControl()));

}

sb.append(this.aggregate(item.getFileControl(), true));

return sb.toString();

}

};

}

@Bean

//@Scope("prototype")

public FormatterLineAggregator<ACHFileHeader> achFileHeaderAggregator() {

FormatterLineAggregator<ACHFileHeader> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFieldExtractor(achFileHeaderWrapperFieldExtractor());

lineAggregator.setFormat("%1.1s%2.2s.10s.10s%6.6s%4.4s%1.1sd%2.2s%1.1s%-23.23s%-23.23s%8.8s");

return lineAggregator;

}

@Bean

//@Scope("prototype")

public FieldExtractor<ACHFileHeader> achFileHeaderWrapperFieldExtractor() {

BeanWrapperFieldExtractor<ACHFileHeader> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {

"recordTypeCode", "priorityCode", "immediateDestination", "immediateOrigin", "fileCreationDate",

"fileCreationTime", "fileIdModifier", "recordSize", "blockingFactor", "formatCode",

"immediateDestinationName", "immediateOriginName", "referenceCode" });

return fieldExtractor;

}

//--

@Bean

//@Scope("prototype")

public FormatterLineAggregator<ACHBatchRecordHeader> achBatchHeaderAggregator() {

FormatterLineAggregator<ACHBatchRecordHeader> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFieldExtractor(achBatchHeaderWrapperFieldExtractor());

lineAggregator.setFormat("%1.1s%3.3s%-16.16s%-20.20sd%3.3s.10s%6.6s%6.6s%3.3s%1.1sdd");

return lineAggregator;

}

@Bean

//@Scope("prototype")

public FieldExtractor<ACHBatchRecordHeader> achBatchHeaderWrapperFieldExtractor() {

BeanWrapperFieldExtractor<ACHBatchRecordHeader> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {

"recordTypeCode", "serviceClassCode", "companyName", "companyDiscretionaryData", "companyId",

"standardEntryClassCode", "companyEntryDescription", "companyDescriptiveDate", "effectiveEntryDate",

"settlementDate", "originatorStatusCode", "originationDfiId", "batchNumber" });

return fieldExtractor;

}

//--

@Bean

//@Scope("prototype")

public FormatterLineAggregator<ACHBatchRecordEntry> achBatchEntryAggregator() {

FormatterLineAggregator<ACHBatchRecordEntry> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFieldExtractor(achBatchEntryWrapperFieldExtractor());

lineAggregator.setFormat("%1.1s-d

%-17d0d%-15.15s%-22.22s%2.2s%1.1s5d");

return lineAggregator;

}

@Bean

//@Scope("prototype")

public FieldExtractor<ACHBatchRecordEntry> achBatchEntryWrapperFieldExtractor() {

BeanWrapperFieldExtractor<ACHBatchRecordEntry> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {

"recordTypeCode", "transactionCode", "receivingDfiId", "checkDigit", "dfiAcctNbr", "amount",

"individualIdNbr", "individualName", "discretionaryData", "addendaRecordInd", "traceNumber" });

return fieldExtractor;

}

//---

@Bean

//@Scope("prototype")

public FormatterLineAggregator<ACHBatchRecordControl> achBatchControlAggregator() {

FormatterLineAggregator<ACHBatchRecordControl> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFieldExtractor(achBatchControlWrapperFieldExtractor());

lineAggregator.setFormat("%1.1s%3.3sd0d2d2dd.19s%6.6sdd");

return lineAggregator;

}

@Bean

//@Scope("prototype")

public FieldExtractor<ACHBatchRecordControl> achBatchControlWrapperFieldExtractor() {

BeanWrapperFieldExtractor<ACHBatchRecordControl> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {

"recordTypeCode", "serviceClassCode", "entryAddendaCount", "entryHash", "totDebitDollarAmt",

"totCreditDollarAmt", "companyId", "messageAuthCode", "reserved", "originatingDfiId", "batchNumber" });

return fieldExtractor;

}

//---

@Bean

//@Scope("prototype")

public FormatterLineAggregator<ACHFileControl> achFileControlAggregator() {

FormatterLineAggregator<ACHFileControl> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFieldExtractor(achFileControlWrapperFieldExtractor());

lineAggregator.setFormat("%1.1sddd0d2d2d%-39s");

return lineAggregator;

}

@Bean

//@Scope("prototype")

public FieldExtractor<ACHFileControl> achFileControlWrapperFieldExtractor() {

BeanWrapperFieldExtractor<ACHFileControl> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {

"recordTypeCode", "batchCount", "blockCount", "entryAddendaCount", "entryHash", "totDebitDollarAmt",

"totCreditDollarAmt", "reserved" });

return fieldExtractor;

}

//=======

@Bean

@JobScope

public FlatFileItemWriter<ACHFileRecord> achReportWriter() throws Exception {

String outFilePath = "result.out";

return new FlatFileItemWriterBuilder<ACHFileRecord>()

.name("ACHrecordWriter")

.lineAggregator(achLineAggregator())

.resource(new FileSystemResource(outFilePath))

.shouldDeleteIfExists(true)

.shouldDeleteIfEmpty(true)

.build();

}

@Bean

//@StepScope

public ItemReader<ACHFileRecord> itemReader() {

return new MockACHFileRecordReader();

}

@Bean

public Step step() throws Exception {

return this.stepBuilderFactory.get("step1")

.<ACHFileRecord, ACHFileRecord>chunk(1)

.reader(itemReader())

.writer(achReportWriter())

.build();

}

@Bean

public Job job() throws Exception {

return this.jobBuilderFactory.get("job")

.incrementer(new RunIdIncrementer())

.flow(step())

.end()

.build();

}

}

The main class just enables batch processing:

package com.***.nacha.producer;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.Step;

import org.springframework.batch.core.StepContribution;

import org.springframework.batch.core.configuration.annotation.EnableBatchProcessing;

import org.springframework.batch.core.configuration.annotation.JobBuilderFactory;

import org.springframework.batch.core.configuration.annotation.StepBuilderFactory;

import org.springframework.batch.core.scope.context.ChunkContext;

import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

import org.springframework.lang.Nullable;

@EnableBatchProcessing

@SpringBootApplication

public class NachaFileProductionApplication {

public static void main(String[] args) {

SpringApplication.run(NachaFileProductionApplication.class, args);

}

}

There's some circular references handling configured to be taken care of in application.properties:

spring.main.allow-circular-references=true

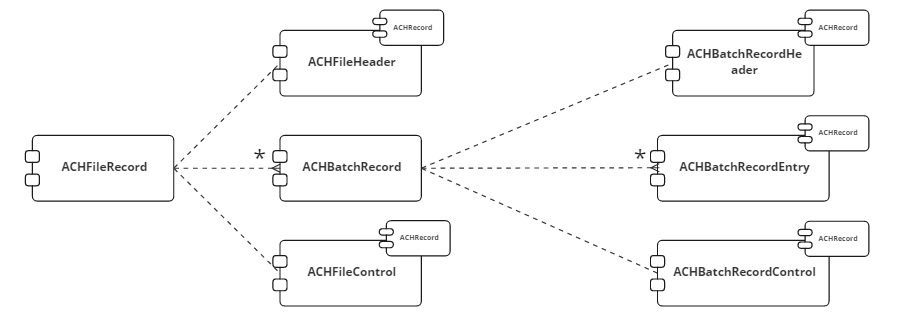

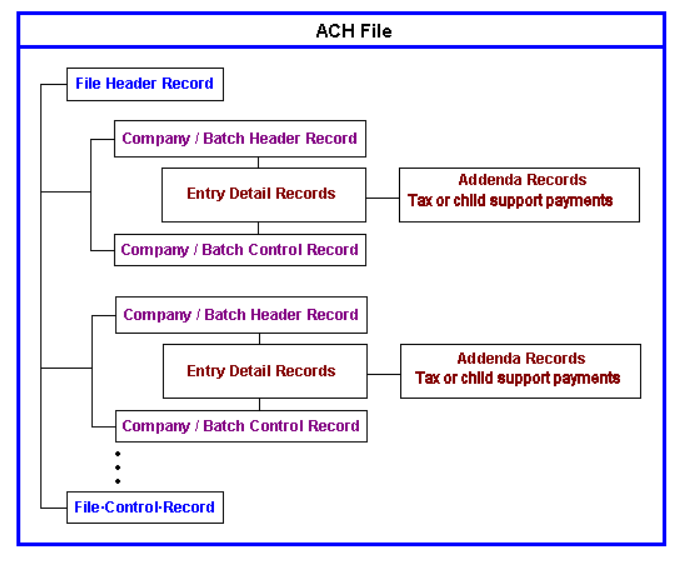

and is succinctly captured by the following diagram:

and is succinctly captured by the following diagram:

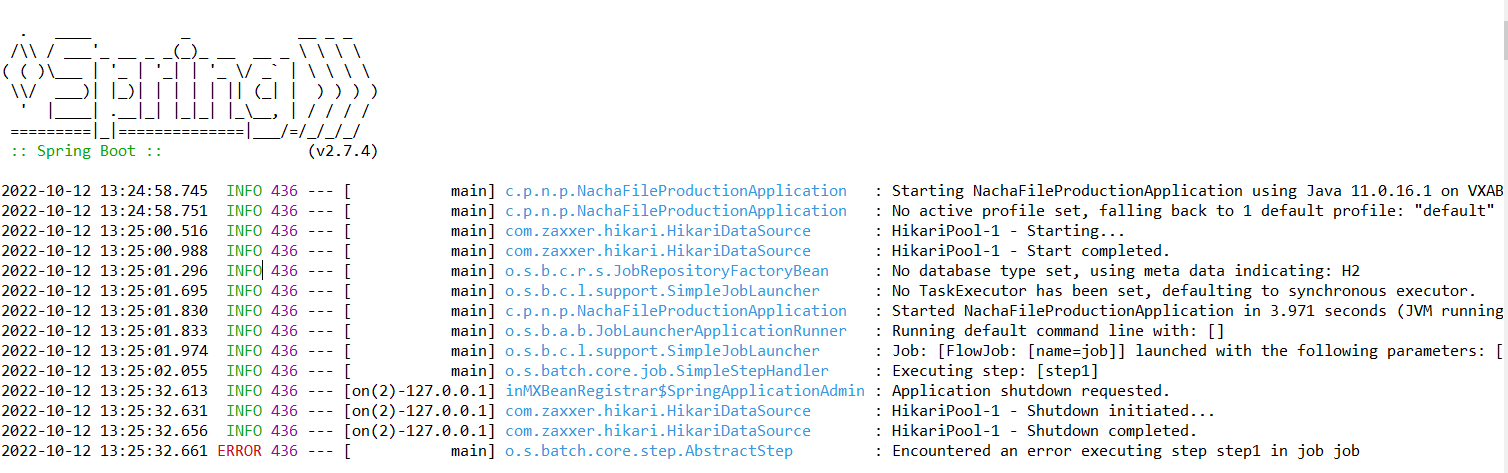

Here's the log prior to me forcing the job to end:

The first few lines of the resulting output file, (though it's expected to be much smaller and not to grow endlessly):

101

148443910

50331482322101213258094101Hodkiewicz-Boehm Bank Ankunding Inc National

5220Ritchie, Littel 1393007334PPD PAYROLL000000221012 1959495220000000

63452521467866465989 0000003935g18HIT965i7ETGWZ62tj1297ZxO8C68X4TRi9P30352152121328313

822066114085109536255975519170430576556960821393007334 959495220000000

9000001107580000000014851122706212626088862377085717968

101 227431075

21169773022101213253094101Gottlieb and Sons Bank Rau-Stoltenberg Nationa

5220Tremblay-Jacobi 1393007334CCD PAYROLL000000221012 1072720110000000

65229729587541518704 0000000365WCHFwlZZ6Fy12w42K804xanZbg9EQrECfqe14rU0569490215331309

822585225489033627844945756870343498946581941393007334 072720110000000

9000001643148000000029176494539715791229631730255483996

101 783912452 73446311022101213258094101Lemke LLC Bank Hartmann Group National

5220Osinski-Ankundin 1393007334PPDCHILDSUPPT000000221012 1580365930000000

6232362603079088008083 0000572321mFv70SwjST49Cn61oUmO0RGU1d6GpnF4QOTW4gO0429366510484932

822585780991958244236633184019954769241016981393007334 580365930000000

9000001979589000000034909147632858521223040297024641339

101540403835

7366241242210121325R094101Batz-Cartwright Bank Pagac, Lang and Cruicks

5220McLaughlin LLC 1393007334PPDTAX PAYMNT000000221012 1828735650000000

627763753739133560955 0215295332UHiB89JHp07Vz103Yk7ak9Uu7ZPU7h20T41lT080447310264016579

822524080519712860312177808514396808331431181393007334 828735650000000

9000001609029000000048083134068768951025970076873002811

101 805312607 9550255782210121325P094101Rolfson, Dickens and ThHickle-Nikolaus Nationa

5200Keebler, Beahan 1393007334CCDCHILDSUPPT000000221012 1411796930000000

6291620437707152548913 0000008009lRiDV09ImX56cFpLa9KH3EGm8pL1B7hXaQYGXJ40374388107061717

822547907303258414717623273017128715262420071393007334 411796930000000

9000001576193000000050395047885920832708971525878894430

101

774208747 81011974722101213252094101Hauck and Sons Bank Rutherford, Pouros and

5200Walsh, Kuphal an 1393007334PPDTAX PAYMNT000000221012 1712895590000000

6530996980344306789083232 00000092834z6K97b3oCEauKejv8tBAaDwdEc9247PIBqPucU0150021840389603

822555172665696286497679023639445794463558711393007334 712895590000000

9000001525909000000065651626863895985923314959004431940

101242422683

34477209222101213252094101Wuckert Inc Bank Weissnat Group National

5225Gulgowski-Daniel 1393007334CCDVENDOR PMT000000221012 1107267040000000

634411693383861813994 0000000155PlkhGzhoCKo69rLTVaHZrpk0DplI71HBUmj58490486801505961770

822596698363062230404866793850216599405859231393007334 107267040000000

9000001449423000000071233345724319150083033332491192973

101 737231663 06274701222101213250094101Kuhlman, D'Amore and SpKessler, Dare and Green

5225Bradtke-Bosco 1393007334PPDVENDOR PMT000000221012 1484640460000000

62296396681813672697 0000000299Yxfl1h7J19O4d5X64Z9ZFX1z104ooKtm2VpEx680274086060693183

822048481870472334414769663843886422630313501393007334 484640460000000

9000001337383000000087376356684923946485931889087698511

101

186612825

9468555472210121325B094101Okuneva Inc Bank Sipes-Durgan National B

5220Corkery, Keeling 1393007334PPD PAYROLL000000221012 1809674600000000

627715435715184430196 0000670806dBo50VgUra2qQDr87nyKtxIrttQVL54xPZ3HCmO0258779859548925

822575961454518765508879931641082490356796751393007334 809674600000000

9000001773785000000097303376120373730689833505074581396

101

643404173

Not sure what's causing this behavior. Maybe it's having to do with bean scoping? Perhaps someone can lead me onto a right track with resolving this. Also, perhaps there's a better Spring Batch-specific way to produce such fixed length mainframe-styled records?

Much appreciated!

CodePudding user response:

The ItemReader should return null when no more items remain to be processed, otherwise the Step will continue forever. So after the read() method returns the first time, it should return null the next time it is called.