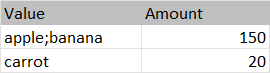

I have a dataframe df1 like this:

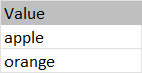

and another dataframe df2 like this:

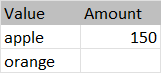

How could I join df2 with df1 using left join so that my output would look like the following?

CodePudding user response:

You can split values in df1 and explode them before the join.

df3 = df1.withColumn('Value', F.explode(F.split('Value', ';')))

df4 = df2.join(df3, 'Value', 'left')

Full example:

from pyspark.sql import functions as F

df1 = spark.createDataFrame([('apple;banana', 150), ('carrot', 20)], ['Value', 'Amount'])

df2 = spark.createDataFrame([('apple',), ('orange',)], ['Value'])

df3 = df1.withColumn('Value', F.explode(F.split('Value', ';')))

df4 = df2.join(df3, 'Value', 'left')

df4.show()

# ------ ------

# | Value|Amount|

# ------ ------

# | apple| 150|

# |orange| null|

# ------ ------

CodePudding user response:

Use SQL "like" operator in left outer join. Try this

//Input

spark.sql(" select 'apple;banana' value, 150 amount union all select 'carrot', 50 ").createOrReplaceTempView("df1")

spark.sql(" select 'apple' value union all select 'orange' ").createOrReplaceTempView("df2")

//Output

spark.sql("""

select a.value, b.amount

from df2 a

left join df1 b

on ';'||b.value||';' like '%;'||a.value||';%'

""").show(false)

------ ------

|value |amount|

------ ------

|apple |150 |

|orange|null |

------ ------