System Information:

OS: Ubuntu 20.04 LTS

System: 80 GB RAM, 1 TB SSD, i7-12700k

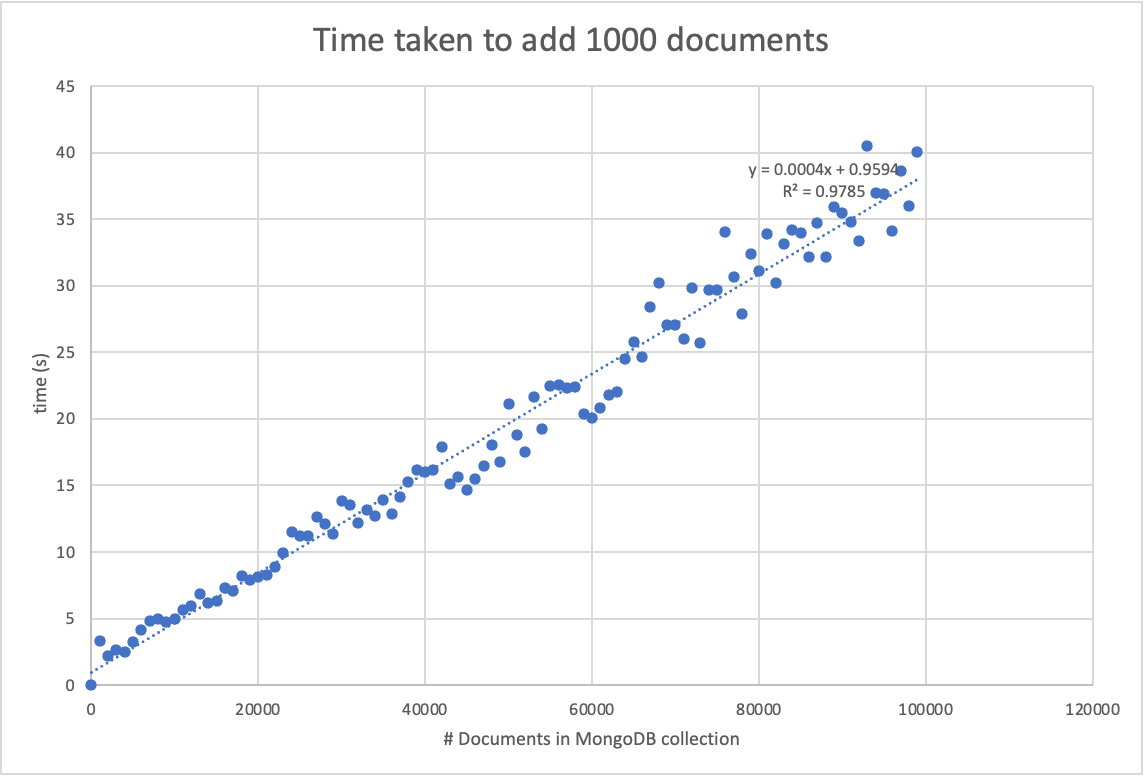

The documents in this collection are on average 16KB, and there are 500K documents in this collection. I noticed that as the collection grows larger, the time taken to insert documents also grows larger.

In what ways could I improve the speed of writes?

It is taking 10 Hours to insert 150k documents. Which is around what the graph predicted when we integrate the line:

def f(num):

return 0.0004*num 0.9594

sum=0

for i in range(500,650):

sum =f(i*1000)

>> sum/3600

>> 9.61497

Potential upgrades in my mind:

- Use the C mongo engine for writes

- Allocate more RAM to Mongod

Logs

iotop showing mongod using < 1% of the IO capacity with write speeds around 10-20 KB/s

htop showing the mongod is only using ~ 16GB of RAM \

Disks showing that some 300GB of SSD is free

EDIT:

Psudo code:

docs=[...]

for doc in docs:

doc["last_updated"]=str(datetime.now())

doc_from_db = collection.find_one({"key":doc["key"]})

new_dict = minify(doc)

if doc_from_db is None:

collection.insert_one(new_dict)

else:

collection.replace_one({"key":doc["key"]},new_dict,upsert=true)

CodePudding user response:

When it comes to writes there are a few things to consider, the most impactful one which I'm assuming is the issue here is index size / index complexity / unique indexes.

It's hard to give exact advice without more information so I'll detail the most common bottlenecks when it comes to writes from my experience.

As mentioned indexes, if you have too many indexes. unique indexes. or indexes on very large arrays (and the document you insert have large arrays) these all heavily impact insert performance. This behavior also correlates with the graph you provided as inserting becomes worse and worse the larger the index gets. There is no "real" solution to this issue, you should reconsider which indexes and which indexes cause the bottleneck (focus on unique /array indexes). For example if you have an index that enforces uniqueness then drop it and enforce uniqueness at the application level instead.

write concern and replication lag, if you are using a replica set and you require a majority write concern this can definitely cause issues due to the sync lag that happens and grows, usually this is a side affect of a different issues, for example because of #1 (large indexes) the insert takes too long which causes sync lag which delays even further the write concern.

unoptimized hardware (Assuming you're hosted on cloud), you'd be surprised how much you can optimize write performance by just changing the disk type and increasing IOPS. this will give immediate performance. obviously at the cost of $$$.

no code was provided so I would also check that, if it's a for loop then obviously you can parallelize the logic.

I recommend you test the same insert logic on an indexless collection to pinpoint the problem, i'd be glad to help think of other issues/solutions once you can provide more information.

EDIT:

Here is an example of how to avoid the for loop issue by using bulkWrite instead in python using pymongo.

from pymongo import InsertOne, DeleteOne, ReplaceOne

from pymongo.errors import BulkWriteError

docs = [... input documents ]

requests = []

for doc in docs:

requests.append({

ReplaceOne({"docId": doc["docID"]}, doc, { upsert: True})

})

try:

db.docs.bulk_write(requests, ordered=False)

except BulkWriteError as bwe:

pprint(bwe.details)

CodePudding user response:

You can enable profiling in Database, but according to previous comments and your code, just python code profiling may be enough, for example can you show the output of similar example? https://github.com/Tornike-Skhulukhia/cprofiler_python_example/blob/main/demo.py

But before that, please check that you have index on field that you are doing searches against using find_one command in current code, otherwise database may need to do full collection scan to just find 1 document, meaning if you have more documents, this time will also increase a lot.