I'm trying to send logs from my Databricks cluster to a Loki instance

init script

for f in /databricks/spark/dbconf/log4j/executor/log4j2.xml /databricks/spark/dbconf/log4j/driver/log4j2.xml; do

sed -i 's/<Configuration /<Configuration monitorInterval="30" /' "$f"

sed -i 's/packages="com.databricks.logging"/packages="pl.tkowalcz.tjahzi.log4j2, com.databricks.logging"/' "$f"

sed -i 's~<Appenders>~<Appenders>\n <Loki name="Loki" bufferSizeMegabytes="64">\n <host>loki.atops.abc.com</host>\n <port>3100</port>\n\n <ThresholdFilter level="ALL"/>\n <PatternLayout>\n <Pattern>%X{tid} [%t] %d{MM-dd HH:mm:ss.SSS} %5p %c{1} - %m%n%exception{full}</Pattern>\n </PatternLayout>\n\n <Header name="X-Scope-OrgID" value="ABC"/>\n <Label name="server" value="Databricks"/>\n <Label name="foo" value="bar"/>\n <Label name="system" value="abc"/>\n <LogLevelLabel>log_level</LogLevelLabel>\n </Loki>\n~' $f

sed -i 's/\(<Root.*\)/\1\n <AppenderRef ref="Loki"\/>/' "$f"

done

In stdout of the cluster I see following errors (which I suppose means the jar is somehow not in classpath, or it's looking for wrong class somehow):

ERROR Error processing element Loki ([Appenders: null]): CLASS_NOT_FOUND

ERROR Unable to locate appender "Loki" for logger config "root"

Also tried to add class name to Appender spec:

<Loki name="Loki" bufferSizeMegabytes="64">

instead of

<Loki name="Loki" bufferSizeMegabytes="64">

Same error.

find /databricks -name '*log4j2-appender-nodep*' -type f finds nothing.

I also tried downloading the jar file put it in dbfs and then install as a JAR library (instead of MAVEN library):

$ databricks fs cp local/log4j2-appender-nodep-0.9.23.jar dbfs:/Shared/log4j2-appender-nodep-0.9.23.jar

$ databricks libraries install --cluster-id 1-2-345 --jar "dbfs:/Shared/log4j2-appender-nodep-0.9.23.jar"

Same error.

I'm able to post from teh cluster to Loki intance using curl, I can see this log in Grafana GUI:

ds=$(date %s%N) && \

echo $ds && \

curl -v -H "Content-Type: application/json" -XPOST -s "http://loki.atops.abc.com:3100/loki/api/v1/push" --data-raw '{"streams": [{ "stream": { "foo": "bar2", "system": "abc" }, "values": [ [ "'$ds'", "'$ds': testing, testing" ] ] }]}'

This is what the conf file contents after init script: cat /databricks/spark/dbconf/log4j/driver/log4j2.xml

<?xml version="1.0" encoding="UTF-8"?><Configuration monitorInterval="30" status="INFO" packages="pl.tkowalcz.tjahzi.log4j2, com.databricks.logging" shutdownHook="disable">

<Appenders>

<Loki name="Loki" bufferSizeMegabytes="64">

<host>loki.atops.abc.com</host>

<port>3100</port>

<ThresholdFilter level="ALL"/>

<PatternLayout>

<Pattern>%X{tid} [%t] %d{MM-dd HH:mm:ss.SSS} %5p %c{1} - %m%n%exception{full}</Pattern>

</PatternLayout>

<Header name="X-Scope-OrgID" value="ABC"/>

<Label name="server" value="Databricks"/>

<Label name="foo" value="bar"/>

<Label name="system" value="abc"/>

<LogLevelLabel>log_level</LogLevelLabel>

</Loki>

<RollingFile name="publicFile.rolling" fileName="logs/log4j-active.log" filePattern="logs/log4j-%d{yyyy-MM-dd-HH}.log.gz" immediateFlush="true" bufferedIO="false" bufferSize="8192" createOnDemand="true">

<Policies>

<TimeBasedTriggeringPolicy/>

</Policies>

<PatternLayout pattern="%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n%ex"/>

</RollingFile>

---snip---

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Loki"/>

<AppenderRef ref="publicFile.rolling.rewrite"/>

</Root>

<Logger name="privateLog" level="INFO" additivity="false">

<AppenderRef ref="privateFile.rolling.rewrite"/>

</Logger>

---snip---

</Loggers>

</Configuration>

CodePudding user response:

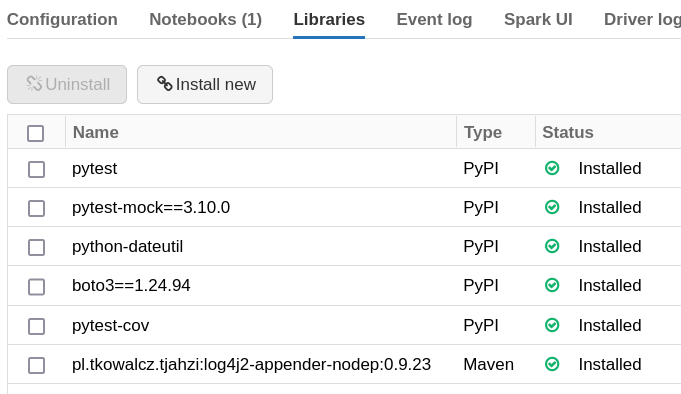

Most probably this happens because log4j is initialized when cluster starts, but libraries that you specify in the Libraries UI - they are installed after cluster starts, so your loki library isn't available during start.

The solution would be to install loki library from the same init script that you use for log4j configuration - just copy library & its dependencies to the /databricks/jars/ folder (for example from DBFS that will be available to script as /dbfs/...).

E.g. if you have uploaded your jar file to /dbfs/Shared/custom_jars/log4j2-appender-nodep-0.9.23.jar then add following to the init script:

#!/bin/bash

cp /dbfs/Shared/custom_jars/log4j2-appender-nodep-0.9.23.jar /databricks/jars/

# other code to update log4j2.xml etc...