I'm trying to concatenate a number to the last dimension of a (None, 10, 3) tensor to make it a (None, 10, 4) tensor using a custom layer. It seems impossible, because to concatenate, all the dimensions except for the one being merged on must be equal and we can't initialize a tensor with 'None' as the first dimension.

For example, the code below gives me this error:

ValueError: Shape must be rank 3 but is rank 2 for '{{node position_embedding_concat_37/concat}} = ConcatV2[N=2, T=DT_FLOAT, Tidx=DT_INT32](Placeholder, position_embedding_concat_37/concat/values_1, position_embedding_concat_37/concat/axis)' with input shapes: [?,10,3], [10,1], []

class PositionEmbeddingConcat(tf.keras.layers.Layer):

def __init__(self, sequence_length, **kwargs):

super(PositionEmbeddingConcat, self).__init__(**kwargs)

self.positional_embeddings_array = np.arange(sequence_length).reshape(sequence_length, 1)

def call(self, inputs):

outp = tf.concat([inputs, self.positional_embeddings_array], axis = 2)

return outp

seq_len = 10

input_layer = Input(shape = (seq_len, 3))

embedding_layer = PositionEmbeddingConcat(sequence_length = seq_len)

embeddings = embedding_layer(input_layer)

dense_layer = Dense(units = 1)

output = dense_layer(Flatten()(embeddings))

modelT = tf.keras.Model(input_layer, output)

Is there another way to do this?

CodePudding user response:

You have to add a batch-dimension as well, and then it can be possible, I have added a batch_dimension in the model, and then made a range matrix in the range seq-length, and then repeat the process as our batch-size is... check the code.

class PositionEmbeddingConcat(tf.keras.layers.Layer):

def __init__(self, sequence_length,batch_dims, **kwargs):

super(PositionEmbeddingConcat, self).__init__(**kwargs)

self.positional_embeddings_array = tf.cast(tf.reshape(tf.repeat(tf.range(sequence_length), batch_dims), (batch_dims,sequence_length,1)), dtype=tf.float32)

def call(self, inputs):

#self.positional_embeddings_array = self.positional_embeddings_array[tf.newaxis, :,]

print(inputs.shape, positional_embeddings.shape)

outp = tf.concat([inputs, self.positional_embeddings_array], axis = 2)

return outp

seq_len = 10

batch_dims = 10

input_layer = tf.keras.Input(shape = (seq_len, 3))

embedding_layer = PositionEmbeddingConcat(seq_len, batch_dims)

embeddings = embedding_layer(input_layer)

dense_layer = tf.keras.layers.Dense(units = 1)

output = dense_layer(tf.keras.layers.Flatten()(embeddings))

modelT = tf.keras.Model(input_layer, output)

modelT(np.random.randn(10, 10, 3))

Output:

<tf.Tensor: shape=(10, 1), dtype=float32, numpy=

array([[-0.36132658],

[ 0.41962504],

[-1.6736453 ],

[-0.4570936 ],

[-2.6110568 ],

[-4.331662 ],

[-4.2410316 ],

[-4.8578367 ],

[-5.099651 ],

[-5.942002 ]], dtype=float32)>

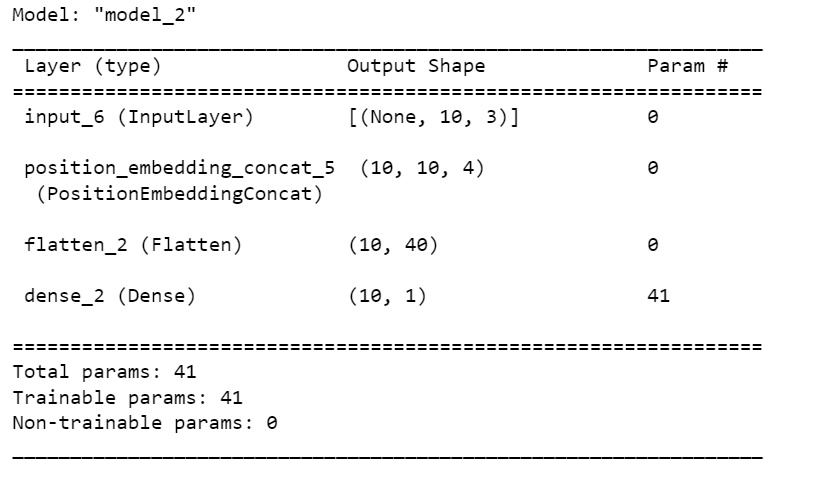

modelT.summary()

CodePudding user response:

your question is to concatenate of tensors shape [10, 3] and [10, 1] but you need to perform a Dense function with a specific number of units. You can remark multiplication to use tf.concatenate() only or you change the Dense function to the specific number of units.

Sample: Position embedding is not performed concatenate function, tails to the current dimension, or propagated results from both for domain result.

import tensorflow as tf

class MyPositionEmbeddedLayer( tf.keras.layers.Concatenate ):

def __init__( self, units ):

super(MyPositionEmbeddedLayer, self).__init__( units )

self.num_units = units

def build(self, input_shape):

self.kernel = self.add_weight("kernel",

shape=[int(input_shape[-1]),

self.num_units])

def call(self, inputs, tails):

### area to perform pre-calculation or custom algorithms ###

# #

# #

############################################################

temp = tf.keras.layers.Concatenate(axis=2)([inputs, tails])

temp = tf.matmul(temp, self.kernel)

temp = tf.squeeze( temp )

return temp

#####################################################

start = 3

limit = 93

delta = 3

sample = tf.range(start, limit, delta)

sample = tf.cast( sample, dtype=tf.float32 )

sample = tf.constant( sample, shape=( 10, 1, 3, 1 ) )

start = 3

limit = 33

delta = 3

tails = tf.range(start, limit, delta)

tails = tf.cast( tails, dtype=tf.float32 )

tails = tf.constant( tails, shape=( 10, 1, 1, 1 ) )

layer = MyPositionEmbeddedLayer(10)

print( layer(sample, tails) )

Output: You see it learning with Dense kernels, close neighbors frequencies aliases.

...

[[-26.67632 35.44779 23.239683 20.374893 -12.882696

54.963055 -18.531412 -4.589509 -21.722694 -43.44675 ]

[-27.629044 36.713783 24.069672 21.102568 -13.3427925

56.92602 -19.193249 -4.7534204 -22.498507 -44.99842 ]

[-28.58177 37.979774 24.89966 21.830242 -13.802889

58.88899 -19.855083 -4.917331 -23.274317 -46.55009 ]

[ -9.527256 12.6599245 8.299887 7.276747 -4.600963

19.629663 -6.6183615 -1.6391104 -7.7581053 -15.516697 ]]], shape=(10, 4, 10), dtype=float32)