I was using the copy activity for updating rows to azure table storage. Currently the pipeline fails if there are any errors in updating any of the rows/batches.

Is there a way to gracefully handle the failed rows and continue with the copy activity for the rest of the data?

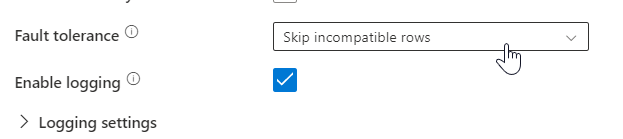

I already tried the Fault Tolerance option which the copy activity provides but that does not solve this case.

CodePudding user response:

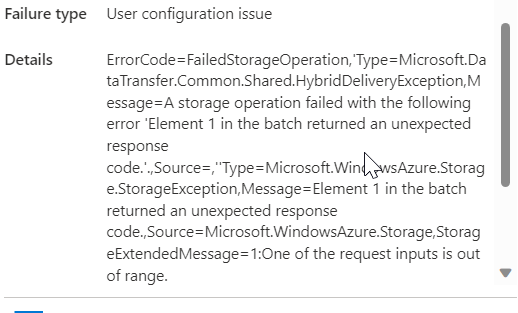

- I have repro'd the same and got the same error when mapping the column containing special character data to RowKey column in Table Storage.

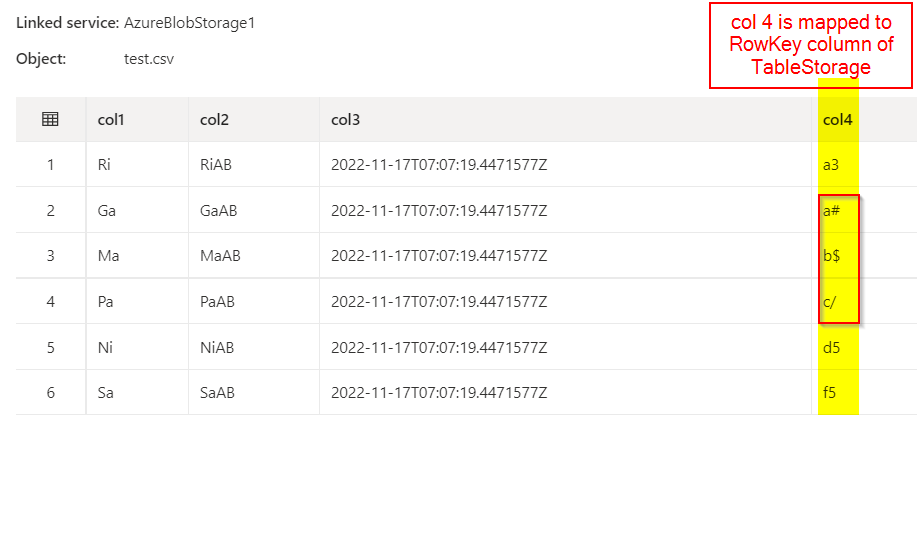

Source dataset

Fault tolerance settings

Error Message:

In copy activity, it is not possible to skip the incompatible rows other than using fault tolerance. Workaround is to use dataflow activity and separate the compatible rows and incompatible rows and then copy the compatible data using copy activity. Below is the approach.

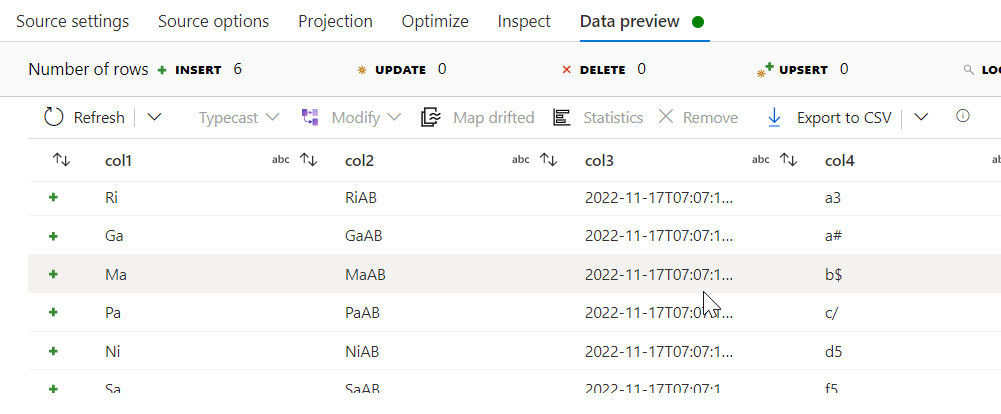

- Source is taken as in below image.

- Since

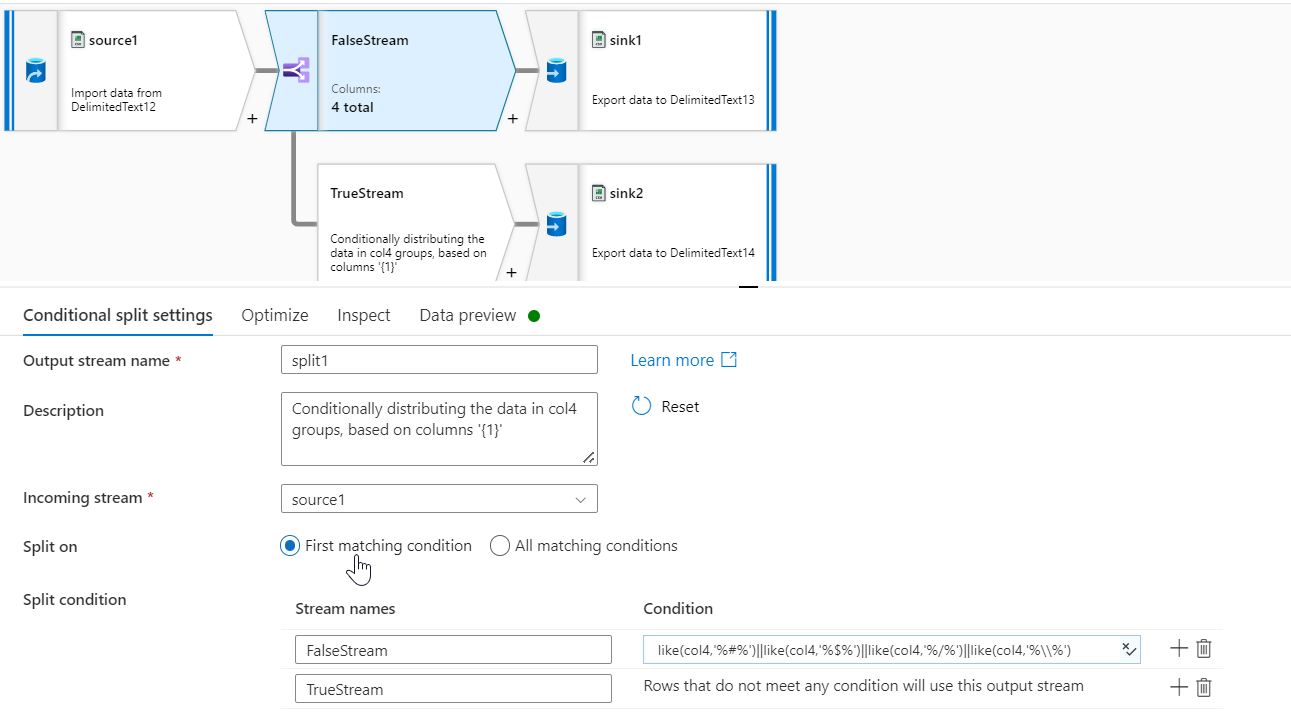

col4needs to be checked before loading to Table storage, Condition is given on col4 data using condition activity. Conditional split Transformation is added after source transformation. Condition is given as, FalseStream :like(col4,'%#%')||like(col4,'%$%')||like(col4,'%/%')||like(col4,'%\\%')

**Sample characters are given in the above condition. **

True Stream will be the rows which do not match the above condition.

- Both False and true Streams are added to Sink1 and sink2 respectively to copy the data to blob storage.

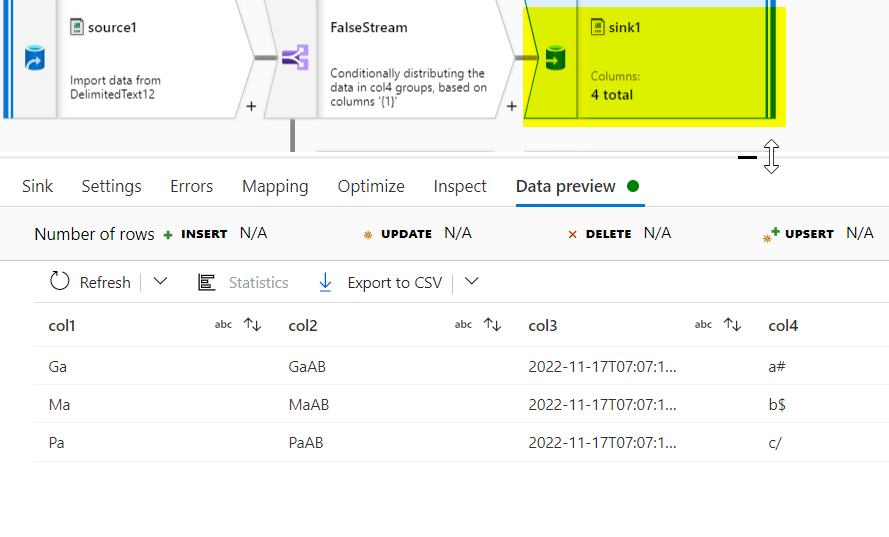

Output:

False Stream:

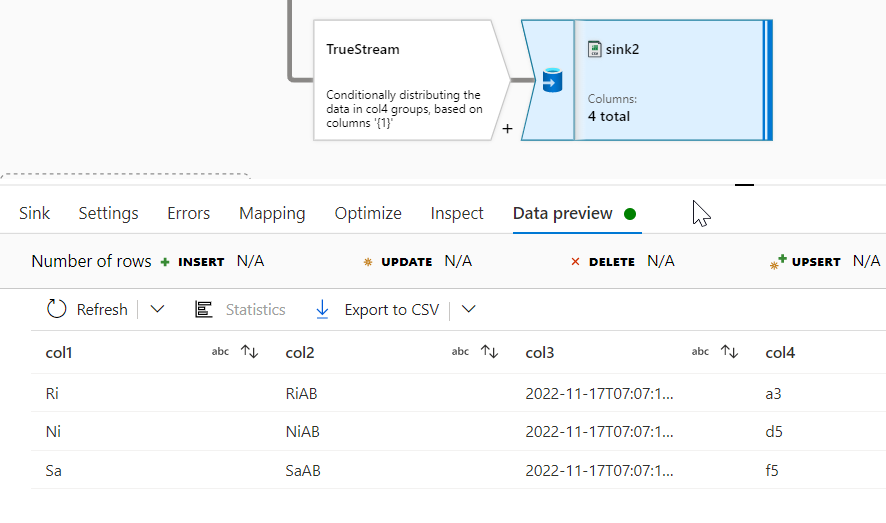

True Stream Data:

- Once compatible data are copied to Blob, they can be copied to Table Storage using copy activity.