I have a Databricks workspace through Azure, and I use the Databricks Job API to run some python scripts that create files.

I want to know if I can retrieve these files created after the job is finished running.

- In Azure, I see a storage account that is associated with the databricks workspace, in the containers, there's a 'job' entry. When I try to access this, I get a

DenyAssignmentAuthorizationFailederror. I am organisation admin, so getting the right permissions shouldn't be a problem, although I wouldn't know why I don't have access already and presume it's a databricks thing. - I tried googling and looking through Azure's docs, but there's surprisingly little documentation on Databricks Jobs or its data storage.

Below you find our Job code. This is called over the Jobs API.

# Parse job info

info = dbutils.notebook.entry_point.getDbutils().notebook().getContext().toJson()

info = json.loads(info)

RUN_ID = info.get("tags").get("multitaskParentRunId", "no_run_id")

# Create directory

run_directory = f"/databricks/driver/training_runs/{RUN_ID}"

dbutils.fs.mkdirs(f"file:{run_directory}")

with open(f"{run_directory}/file.txt", "w") as file_:

file_.write("Hello world :)")

CodePudding user response:

From the given code, I can see that the path in which you are creating a directory and then writing a file, is not DBFS but general storage with path

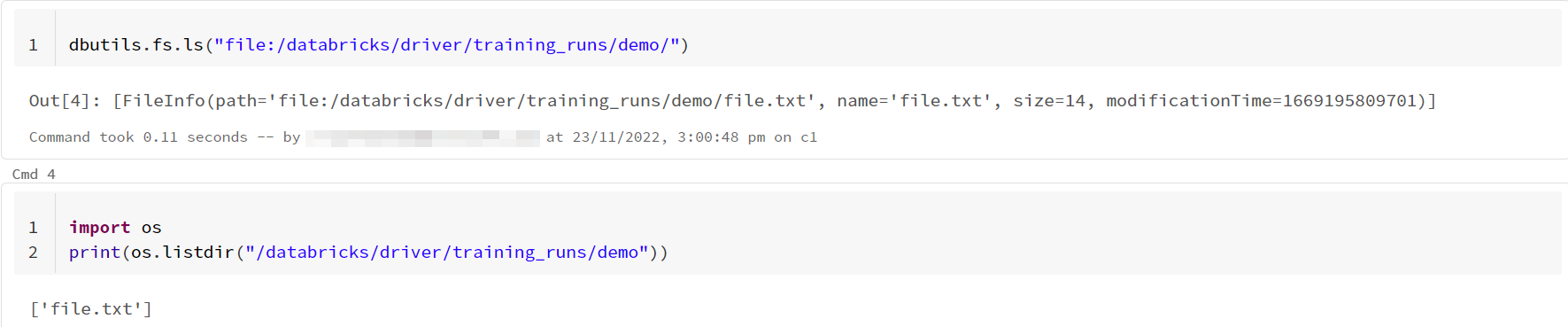

file:/databricks/driver/training_runs.To get the contents of the above path, there is no UI supported in Databricks. You can list the contents using either of the following:

dbutils.fs.ls("file:/databricks/driver/training_runs/<your_run_id>/")

#OR

#import os

#print(os.listdir("/databricks/driver/training_runs/demo"))

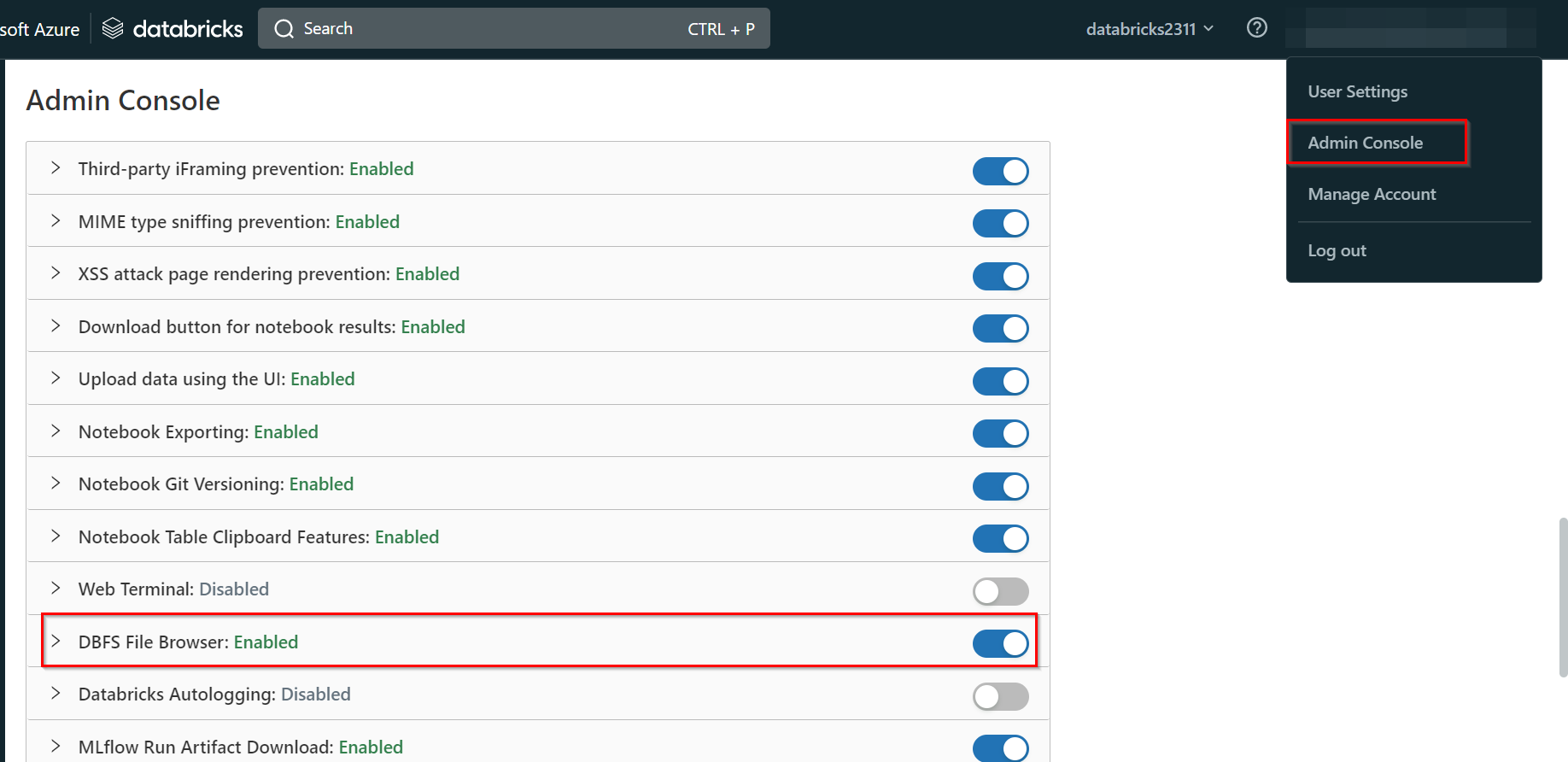

- If you want to view these files in UI, then you can try writing the files to DBFS instead. You first have to enable the DBFS browser. Navigate to path

Admin Console ->Workspace settings -> DBFS File Browserand enable it. Refresh the workspace.

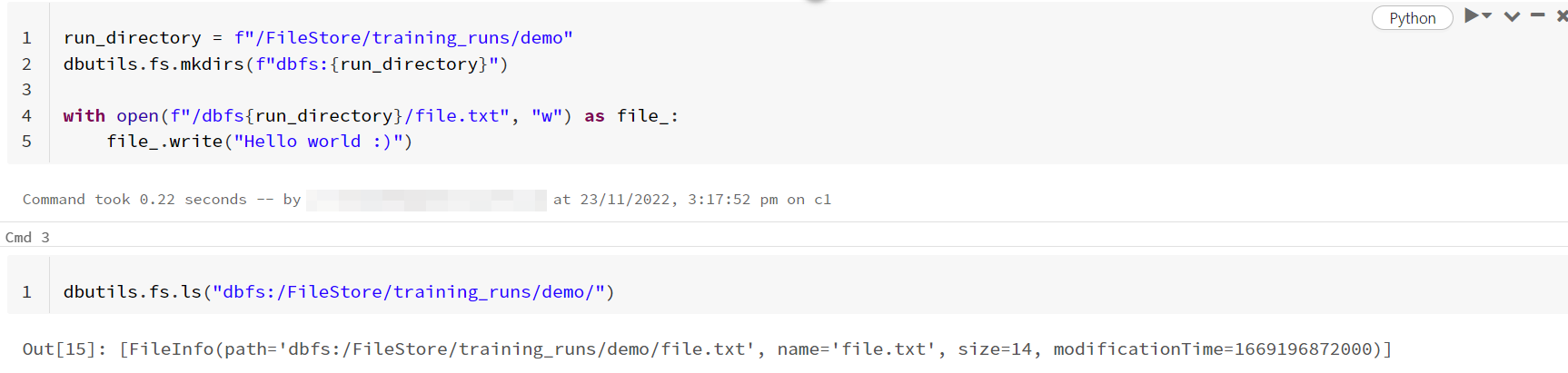

- Now instead of using path as

file:/databricks/driver/training_runs, you can use path as shown below:

run_directory = f"/FileStore/training_runs/demo"

dbutils.fs.mkdirs(f"dbfs:{run_directory}")

with open(f"/dbfs{run_directory}/file.txt", "w") as file_:

file_.write("Hello world :)")

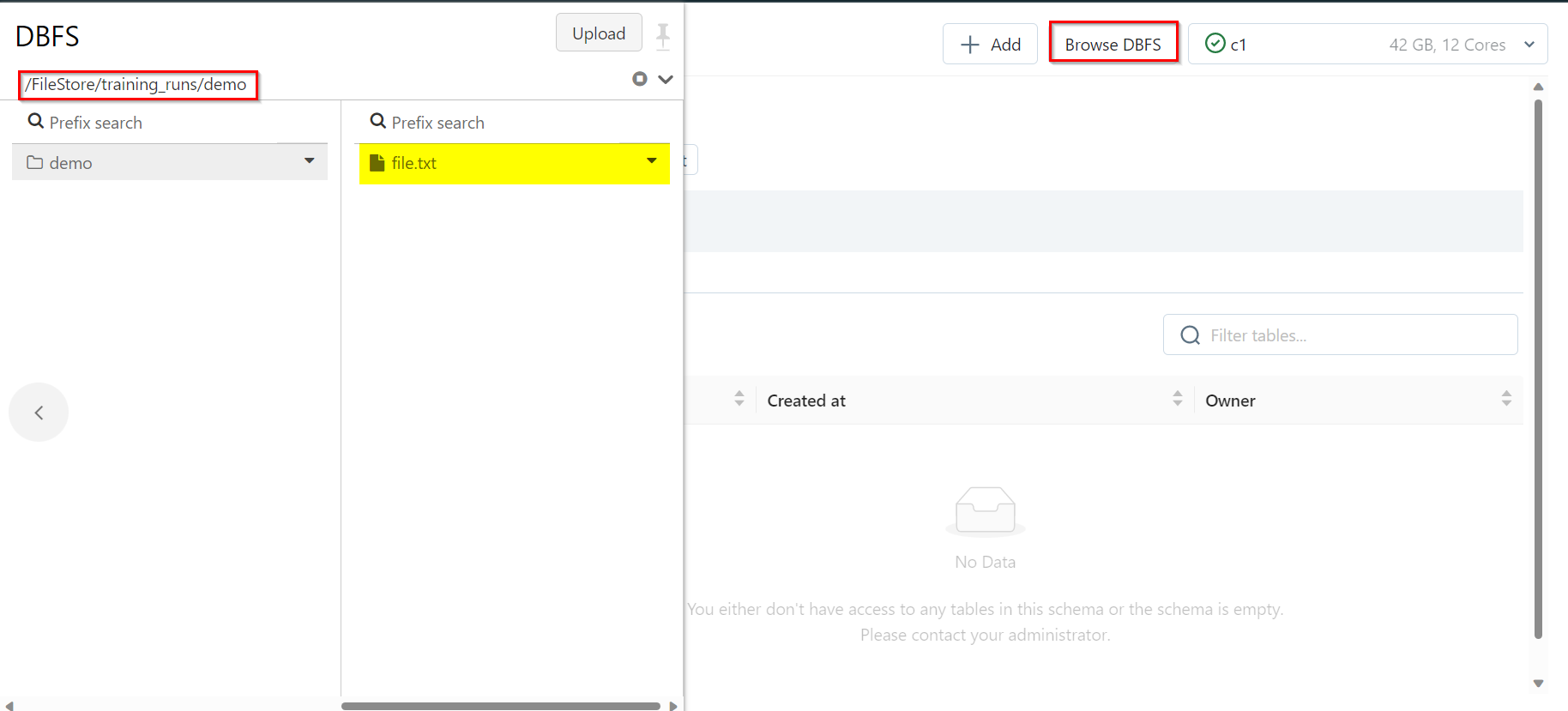

- Now you can navigate to

Data -> browse DBFS -> FileStoreand click on the required folder that you want to browse.

CodePudding user response:

Suggest creating a storage account separate from DBFS to house newly created files or External files. You can modify the script to write to that particular storage account or copy after its creation. This is just how we use it. Nothing wrong in writing to DBFS.

In any case, please refer to the link to connect to Azure ADLS/BLOB Storage.

https://docs.databricks.com/external-data/azure-storage.html

It lists several ways to authentication, evaluate which you feel is the safest and proceed with that option.

Hope it helps...