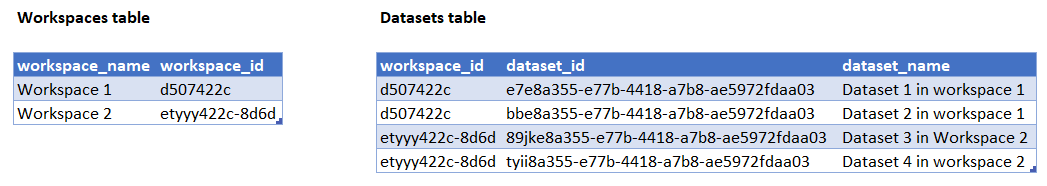

I have a nested Python dictionary that I want to convert into a relational model. I am struggling to parse the dictionary into two related tables: a "workspace" table and a "datasets" table - joined by the key workspace_id

simplified_dict ={

"workspaces":[

{

"workspace_id":"d507422c",

"workspace_name":"Workspace 1",

"datasets":[

{

"dataset_id":"e7e8a355",

"dataset_name":"Dataset 1 in workspace 1"

},

{

"dataset_id":"bbe8a355",

"dataset_name":"Dataset 2 in workspace 1"

}

]

},

{

"workspace_id":"etyyy422c-8d6d",

"workspace_name":"Workspace 2",

"datasets":[

{

"dataset_id":"89jke8a355",

"dataset_name":"Dataset 3 in Workspace 2"

},

{

"dataset_id":"tyii8a355",

"dataset_name":"Dataset 4 in workspace 2"

}

]

}

],

"datasourceInstances":[

]

}

I can create a table containing workspace information using the pandas json_normalize function.

import pandas as pd

df_workspaces = pd.json_normalize(simplified_dict, record_path=['workspaces'])

df_workspaces

However, when I try and create the second table "datasets" using the same function, I get a dataframe, but it doesn't have a workspace key, that allows me to join the two tables.

df_datasets_in_workspaces = pd.json_normalize(simplified_dict, record_path=['workspaces','datasets'])

df_datasets_in_workspaces

Is there a way to add the workspace key to the this datasets table, to enable the join, while still using the json_normalize function?

If possible I would prefer a solution using json_normalize, rather than using a loop or comprehension, as the json_normalize allows me to easily convert any layer of my real data (with 5 levels of nesting) into a dataframe. With my real datset, I will be looking to generate circa 15 tables, so a low code, very intuitive approach is prefered.

Copy of a google colab notebook with the code is accessible here

Any help would be appreciated.

CodePudding user response:

workspace_df = pd.json_normalize(data=simplified_dict, record_path=["workspaces"]).drop(columns="datasets")

print(workspace_df)

datasets_df = pd.json_normalize(data=simplified_dict["workspaces"], meta=["workspace_id"], record_path=["datasets"])

print(datasets_df)

Outputs:

workspace_id workspace_name

0 d507422c Workspace 1

1 etyyy422c-8d6d Workspace 2

dataset_id dataset_name workspace_id

0 e7e8a355 Dataset 1 in workspace 1 d507422c

1 bbe8a355 Dataset 2 in workspace 1 d507422c

2 89jke8a355 Dataset 3 in Workspace 2 etyyy422c-8d6d

3 tyii8a355 Dataset 4 in workspace 2 etyyy422c-8d6d