Assume that I have a PySpark DataFrame. Some of the cells contain only special characters.

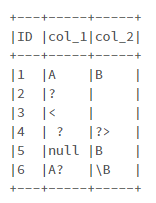

Sample dataset:

import pandas as pd

data = {'ID': [1, 2, 3, 4, 5, 6],

'col_1': ['A', '?', '<', ' ?', None, 'A?'],

'col_2': ['B', ' ', '', '?>', 'B', '\B']

}

pdf = pd.DataFrame(data)

df = spark.createDataFrame(pdf)

I want to count the number of rows which contain only special characters (except blank cells). Values like 'A?' and '\B' and blank cells are not counted.

The expected output will be:

{'ID': 0, 'col_1': 3, 'col_2': 1}

Is there anyway to do that?

CodePudding user response:

Taking your sample dataset, this should do:

import pandas as pd

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, when

spark = SparkSession.builder.getOrCreate()

import pandas as pd

data = {'ID': [1, 2, 3, 4, 5, 6],

'col_1': ['A', '?', '<', ' ?', None, 'A?'],

'col_2': ['B', ' ', '', '?>', 'B', '\B']

}

pdf = pd.DataFrame(data)

df = spark.createDataFrame(pdf)

res = {}

for col_name in df.columns:

df = df.withColumn('matched', when((col(col_name).rlike('[^A-Za-z0-9\s]')) & ~(col(col_name).rlike('[A-Za-z0-9]')), True).otherwise(False))

res[col_name] = df.select('ID').where(df.matched).count()

print(res)

The trick is to use regular expressions with two conditions to filter the cells that are valid according to your logic.