I am training a binary classifier model that classifies between disease and non-disease.

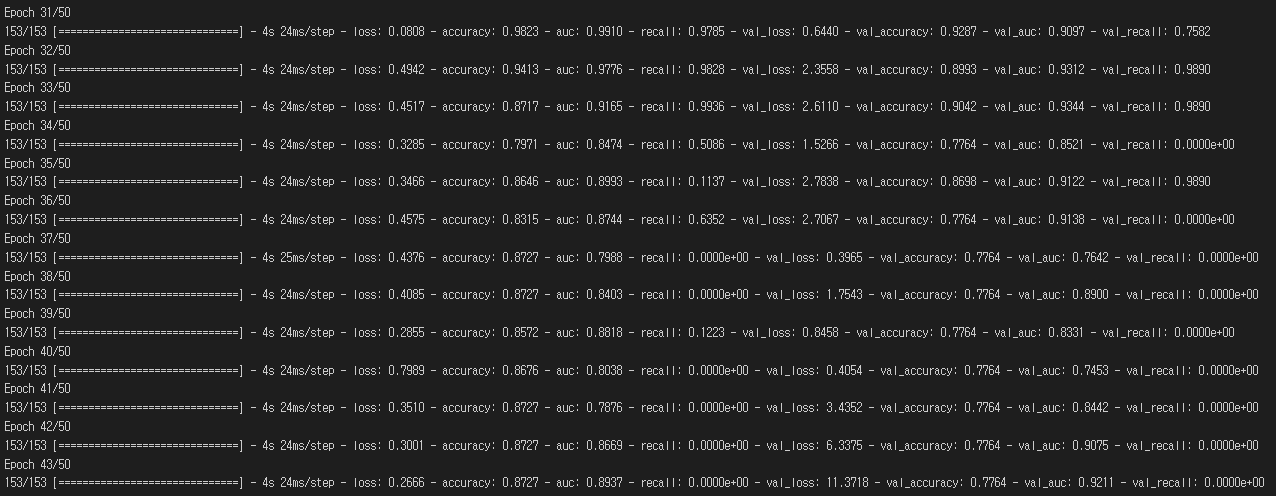

When I run the model, training loss decreased and auc, acc, get increased.

But, after certain epoch train loss increased and auc, acc were decreased.

I don't know why training performance got decreased after certain epoch.

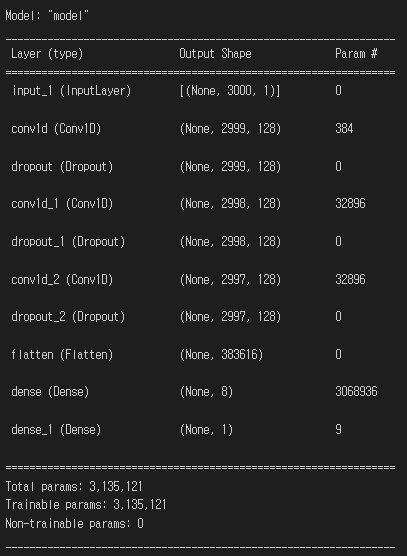

I used general 1d cnn model and methods, details here:

I tried already to:

- batch shuffle

- introduce class weights

- loss change (binary_crossentropy > BinaryFocalLoss)

- learning_rate change

CodePudding user response:

Two questions for you going forward.

- Does the training and validation accuracy keep dropping - when you would just let it run for let's say 100 epochs? Definitely something I would try.

- Which optimizer are you using? SGD? ADAM?

It is probably the optimizer

As you do not seem to augment (this could be a potential issue if you do by accident break some label affiliation) your data, each epoch should see similar gradients. Thus I guess, at this point in your optimization process, the learning rate and thus the update step is not adjusted properly - hence not allowing to further progress into that local optimum, and rather overstepping the minimum while at the same time decreasing training and validation performance.

This is an intuitive explanation and the next things I would try are:

- Scheduling the learning rate

- Using a more sophisticated optimizer (starting with ADAM if you are not already using it)

CodePudding user response:

Your model is overfitting. This is why your accuracy increases and then begins decreasing. You need to implement Early Stopping to stop at the Epoch with the best results. You should also implement dropout layers.