I'm attempting to implement a Gaussian smoothing/flattening function in my Python 3.10 script to flatten a set of XY-points. For each data point, I'm creating a Y buffer and a Gaussian kernel, which I use to flatten each one of the Y-points based on it's neighbours.

Here are some sources on the Gaussian-smoothing method:

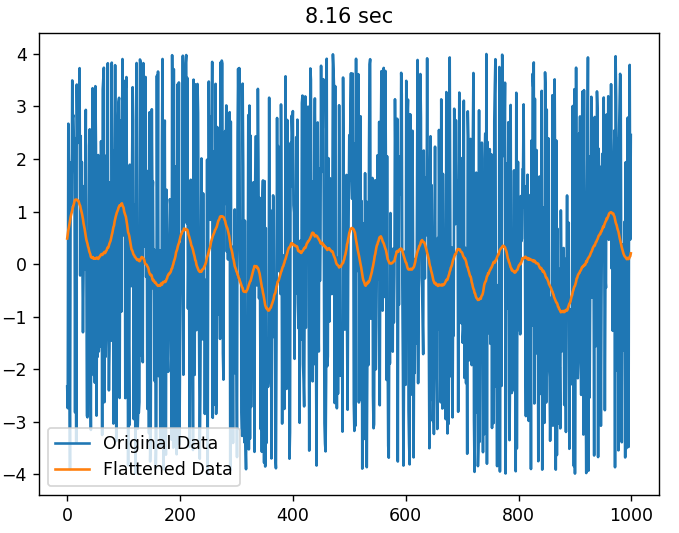

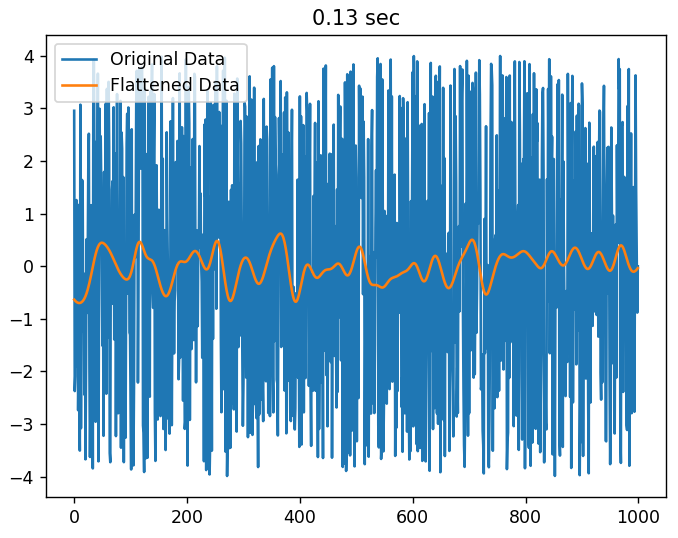

Blue being the original data, and Orange being the flattened data.

However, it takes a surprisingly long amount of time to smoothen even smaller amounts of data. In the example above I generated 1,000 data points, and it takes ~8 seconds to flatten that. With datasets exceeding 10,000 in number, it can easily take over 10 minutes.

Since this is a very popular and known way of smoothening data, I was wondering why this script ran so slow. I originally had this implemented with standard Pythons

Listswith callingappend, however it was extremely slow. I hoped that using theNumPyarrays instead without calling theappendfunction would make it faster, but that is not really the case.Is there a way to speed up this process? Is there a Gaussian-smoothing function that already exists out there, that takes in the same arguments, and that could do the job faster?

Thanks for reading my post, any guidance is appreciated.

CodePudding user response:

You have a number of loops - those tend to slow you down.

Here are two examples. Refactoring

GetClosestNumto this:def GetClosestNum(base, nums): nums = np.array(nums) diffs = np.abs(nums - base) return nums[np.argmin(diffs)]and refactoring

FindInArrayto this:def FindInArray(arr, value): res = np.where(np.array(arr) - value == 0)[0] if res.size > 0: return res[0] else: return -1lets me process 5000 datapoints in 1.5s instead of the 54s it took with your original code.

Numpy lets you do a lot of powerful stuff without looping - Jake Vanderplas has a few really good (oldie but goodie) videos on using numpy constructs in place of loops to massively increase speed -

It also takes ~1.75 seconds to process the 10,000 points.

Thanks for the feedback everyone, cheers!