I am trying to scrape Oddsportal and the below code however when I run it, I get IndexError

Code:

import threading

from datetime import datetime

from math import nan

from multiprocessing.pool import ThreadPool

import pandas as pd

from bs4 import BeautifulSoup as bs

from selenium import webdriver

class Driver:

def __init__(self):

options = webdriver.ChromeOptions()

options.add_argument("--headless")

# Un-comment next line to supress logging:

options.add_experimental_option('excludeSwitches', ['enable-logging'])

self.driver = webdriver.Chrome(options=options)

def __del__(self):

self.driver.quit() # clean up driver when we are cleaned up

# print('The driver has been "quitted".')

threadLocal = threading.local()

def create_driver():

the_driver = getattr(threadLocal, 'the_driver', None)

if the_driver is None:

the_driver = Driver()

setattr(threadLocal, 'the_driver', the_driver)

return the_driver.driver

class GameData:

def __init__(self):

self.date = []

self.time = []

self.game = []

self.score = []

self.home_odds = []

self.draw_odds = []

self.away_odds = []

self.country = []

self.league = []

def generate_matches(pgSoup, defaultVal=None):

evtSel = {

'time': 'p.whitespace-nowrap',

'game': 'a div:has(>a[title])',

'score': 'a:has(a[title]) div.hidden',

'home_odds': 'a:has(a[title])~div:not(.hidden)',

'draw_odds': 'a:has(a[title])~div:not(.hidden) div:nth-last-of-type(3)',

'away_odds': 'a:has(a[title])~div:nth-last-of-type(2)',

}

events, current_group = [], {}

for evt in pgSoup.select('div[set]>div:last-child'):

if evt.parent.select(f':scope>div:first-child div div'):

cgVals = [v.get_text(' ').strip() if v else defaultVal for v in [

evt.parent.select_one(s) for s in

[':scope>div:first-child div>div:first-child',

':scope>div:first-child>a:nth-of-type(2):nth-last-of-type(2)',

':scope>div:first-child>a:nth-of-type(3):last-of-type']]]

current_group = dict(zip(['date', 'country', 'league'], cgVals))

evtRow = {'date': current_group.get('date', defaultVal)}

for k, v in evtSel.items():

v = evt.select_one(v).get_text(' ') if evt.select_one(v) else defaultVal

evtRow[k] = ' '.join(v.split()) if isinstance(v, str) else v

evtRow['country'] = current_group.get('country', defaultVal)

evtRow['league'] = current_group.get('league', defaultVal)

events.append(evtRow)

return events

def parse_data(url, return_urls=False):

browser = create_driver()

browser.get(url)

browser.implicitly_wait(25)

soup = bs(browser.page_source, "lxml")

game_data = GameData()

game_keys = [a for a, av in game_data.__dict__.items() if isinstance(av, list)]

for row in generate_matches(soup, defaultVal=nan):

for k in game_keys: getattr(game_data, k).append(row.get(k, nan))

if return_urls:

span = soup.find('span', {'class': 'next-games-date'})

a_tags = soup.select('span.next-games-date a[href]') # Instead of a_tags = soup.select('span.next-games-date a[href]')

urls = ['https://www.oddsportal.com' a_tag['href'] for a_tag in a_tags]

return game_data, urls

return game_data

if __name__ == '__main__':

games = None

pool = ThreadPool(5) # We will be getting, however, 7 URLs

# Get today's data and the Urls for the other days:

game_data_today, urls = pool.apply(parse_data, args=('https://www.oddsportal.com/matches/soccer', True))

urls.pop(1) # Remove url for today: We already have the data for that

game_data_results = pool.imap(parse_data, urls)

for i in range(8):

try:

game_data = game_data_today if i == 1 else next(game_data_results)

result = pd.DataFrame(game_data.__dict__)

if games is None:

games = result

else:

games = games.append(result, ignore_index=True)

except ValueError:

game_data = game_data_today if i == 1 else next(game_data_results)

result = pd.DataFrame(game_data.__dict__)

if games is None:

games = result

else:

games = games.append(result, ignore_index=True)

finally:

pass

# print(games)

# ensure all the drivers are "quitted":

del threadLocal

import gc

gc.collect() # a little extra insurance

Error:

File "C:\Users\User\AppData\Roaming\JetBrains\PyCharmCE2022.1\scratches\scratch_1.py", line 114, in <module>

urls.pop(1) # Remove url for today: We already have the data for that

IndexError: pop from empty list

While the looping is to scrape between dates available, I am unable to get it to work in entirety.

I dont want to check for a_tags = [] if span is None else span.find_all('a') as it does not serve my purpose

This part of the code:

def generate_matches(pgSoup, defaultVal=None):

evtSel = {

'time': 'p.whitespace-nowrap',

'game': 'a div:has(>a[title])',

'score': 'a:has(a[title]) div.hidden',

'home_odds': 'a:has(a[title])~div:not(.hidden)',

'draw_odds': 'a:has(a[title])~div:not(.hidden) div:nth-last-of-type(3)',

'away_odds': 'a:has(a[title])~div:nth-last-of-type(2)',

}

events, current_group = [], {}

for evt in pgSoup.select('div[set]>div:last-child'):

if evt.parent.select(f':scope>div:first-child div div'):

cgVals = [v.get_text(' ').strip() if v else defaultVal for v in [

evt.parent.select_one(s) for s in

[ ':scope>div:first-child div>div:first-child',

':scope>div:first-child>a:nth-of-type(2):nth-last-of-type(2)',

':scope>div:first-child>a:nth-of-type(3):last-of-type' ]]]

current_group = dict(zip(['date', 'country', 'league'], cgVals))

evtRow = {'date': current_group.get('date', defaultVal)}

for k, v in evtSel.items():

v = evt.select_one(v).get_text(' ') if evt.select_one(v) else defaultVal

evtRow[k] = ' '.join(v.split()) if isinstance(v, str) else v

evtRow['country'] = current_group.get('country', defaultVal)

evtRow['league'] = current_group.get('league', defaultVal)

events.append(evtRow)

return events

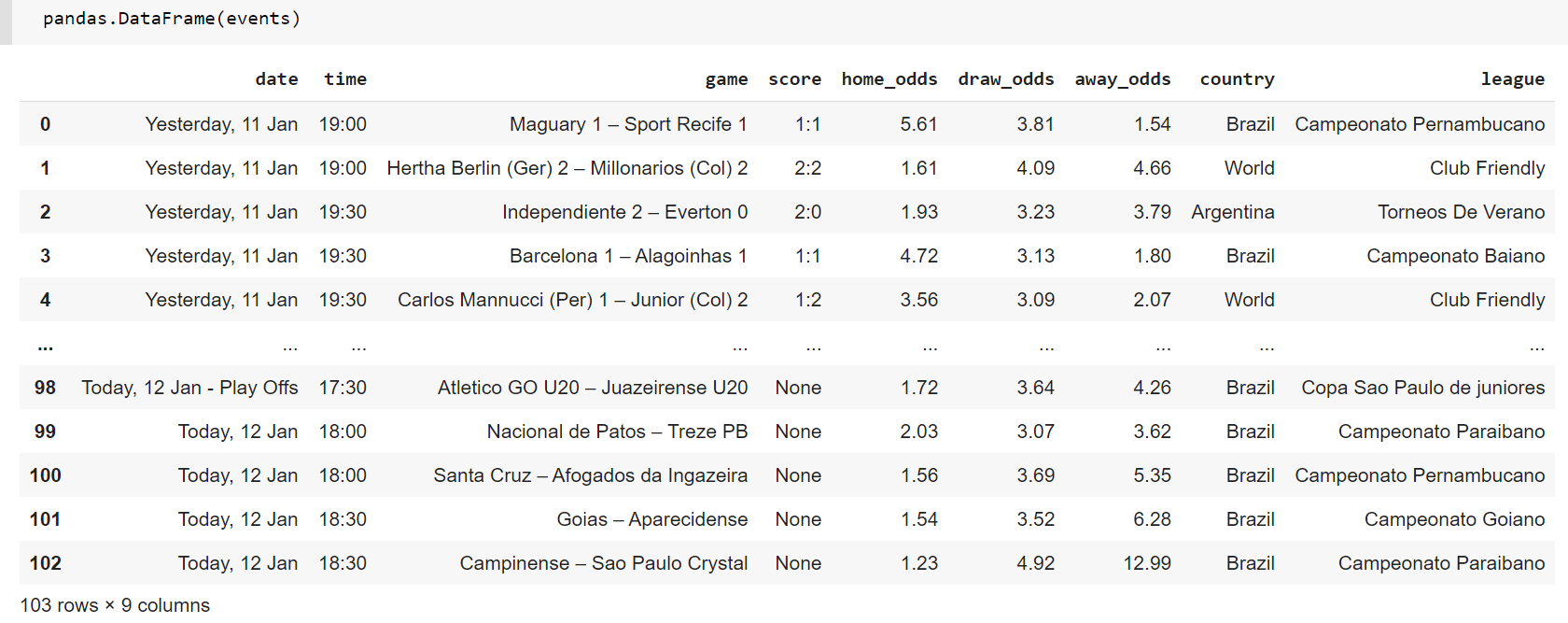

collects

Edit: Changed a_tags = soup.select('span.next-games-date a[href]') to a_tags = soup.select('span.next-games-date a[href]')

CodePudding user response:

span = soup.find('span', {'class': 'next-games-date'}) a_tags = span.findAll('a')

a_tags = span.findAll('a') AttributeError: 'NoneType' object has no attribute 'findAll'

You can avoid raising the error by just checking for None like a_tags = [] if span is None else span.findAll('a') [this is something you should do habitually when with .find/.select_one btw], but you will always get an empty list, due to the reasons explained below.

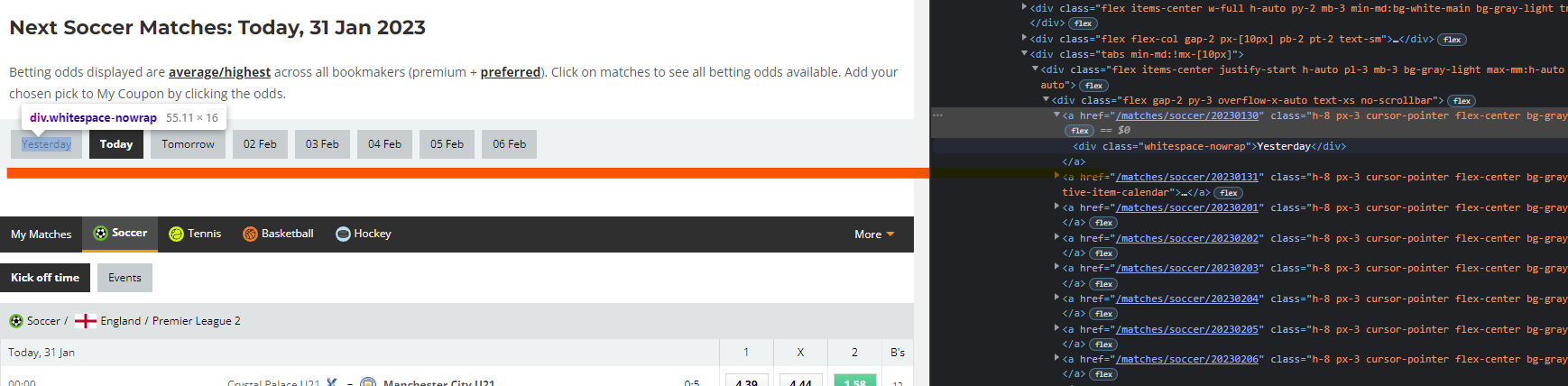

Why are you using .find('span', {'class': 'next-games-date'}) when [as evident in the last screenshot in your q] the links are not contained in a span tag, and [as far as I could see from inspecting] there are no elements at all with the next-games-date class?? Considering this, it should not be surprising that .find... returned None and the next line raised the error.

Given that the screenshot of the inspect tab shows that one of the ancestor is a div with a tabs class, and that all the a tags have an h-8 class, you could use something like

if return_urls:

a_cont = soup.find('div', {'class': 'tabs'})

if a_cont is None: a_tags = [] # check for None to be safe

else: a_tags = a_cont.find_all('a', {'class':'h-8', 'href':True})

urls = [

'https://www.oddsportal.com' a_tag['href'] for a_tag in a_tags

if not a_tag['href'].startswith('#') # sections in current page

and 'active-item-calendar' not in a_tag['class'] # current page

]

or, with .select:

if return_urls:

a_sel = 'div.tabs a.h-8:not([href^="#"]):not(.active-item-calendar)'

urls = ['https://www.oddsportal.com' a['href']

for a in soup.select(a_sel)]

[Since you've already filtered out current page (which should be that day's) with 'active-item-calendar' not in a_tag['class'] or :not(.active-item-calendar), there will be no need to pop any items...]

CodePudding user response:

The Traceback literally says where and why your code is erroring out. In the parse_data fnc, soup.find('span', {'class': 'next-games-date'}) failed to find given class. As per the BeautifulSoup documentation, "If find() can’t find anything, it returns None:", thus span is assigned NoneType obj. When the the following line-- a_tags = span.findAll('a')--is ran, an AtrributeError occurs because NoneType objects don't have a findAll method.

There are 2 additional mistakes with code:

1.) it should be span.find_all('a) not span.findAll('a')

2.) There is no class attribute next-games-date (at least not that I could find) on the website.

For some reason the select method is not finding the div with class .flex gap-2 py-3 overflow-x-auto text-xs no-scrollbar, but I was able to find it with the find method and make a list of urls. Heres the code:

from selenium import webdriver

from bs4 import BeautifulSoup

from time import sleep

def get_page_source(url):

try:

driver = webdriver.Chrome()

driver.get(url)

sleep(3)

return driver.page_source

finally: driver.quit()

def grab_button_urls(html, tag, attr):

soup = BeautifulSoup(html, 'html.parser')

a_tags = soup.find(tag, attr)\

.find_all('a')

return [f"https://www.oddsportal.com{tag['href']}" for tag in a_tags]

if __name__ == '__main__':

website = 'https://www.oddsportal.com/matches/soccer'

# get page source

pg_html = get_page_source(website)

# get urls from date buttons

tag, class_attr = 'div', {'class':'flex gap-2 py-3 overflow-x-auto text-xs no-scrollbar'}

tags = grab_button_urls(pg_html, tag, class_attr)

print(tags)