I tried downloading pdf files from the website, which is contained in a table with pagination. I can download the pdf files from the first page, but it is not fetching the pdf from all the 4000 pages. When I tried understanding the logic by observing the URL request, it seems static with out any additional value get appended on it during pagination and I couldn't figure out the way to fetch all pdfs from the table using BeautifulSoup.

Hereby attached the code that I am using to download pdf file from the table in website,

# Import libraries

import requests

from bs4 import BeautifulSoup

import re

import requests, json

# URL from which pdfs to be downloaded

url="https://loksabha.nic.in/Questions/Qtextsearch.aspx"

# Requests URL and get response object

response = requests.get(url)

# Parse text obtained

span = soup.find("span", id="ContentPlaceHolder1_lblfrom")

Total_pages = re.findall(r'\d ', span.text)

print(Total_pages[0])

# Find all hyperlinks present on webpage

# links = soup.find_all('a')

i = 0

# From all links check for pdf link and

# if present download file

# for link in links:

for link in table1.find_all('a'):

if ('.pdf' in link.get('href', [])):

list2 = re.findall('CalenderUploading', link.get('href', []))

if len(list2)==0:

# url = re.findall('hindi', link.get('href', []))

print(link.get('href', []))

i = 1

# Get response object for link

response = requests.get(link.get('href'))

# Write content in pdf file

pdf = open("pdf" str(i) ".pdf", 'wb')

pdf.write(response.content)

pdf.close()

print("File ", i, " downloaded")

print("All PDF files downloaded")

CodePudding user response:

- Firstly

you need to establish a session first time you call to store cookie values

sess=requests.session()

and use sess.get subsequently instead of requests.get

- Secondly:

its not static... its not get request for subsequent pages

its a post request with : ctl00$ContentPlaceHolder1$txtpage="2" for page 2

make a session with requests capture the view parameters after first request using BeautifulSoup

the value of __VIEWSTATE, __VIEWSTATEGENERATOR and __EVENTVALIDATION

etc are in a <div >

when you request for tthe page for first time...

for subsequent pages you will have to pass these parameters along with page number in post parameter like this ... ctl00$ContentPlaceHolder1$txtpage="2"

using "POST" and not "GET"

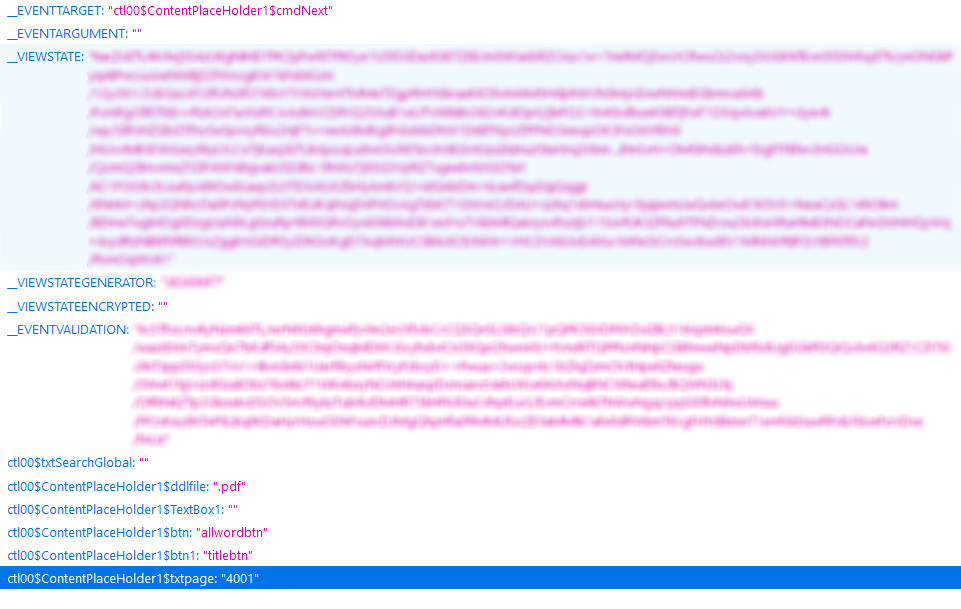

this is what is sent by post request for eg. for page 4001 page here on the loksabha site

workout other parts .. dont expect complete solution here :-)

sess=requests.session()

resp=sess.get('https://loksabha.nic.in/Questions/Qtextsearch.aspx')

soup=bs(resp.content)

vstat=soup.find('input',{'name':'__VIEWSTATE'})['value']

vstatgen=soup.find('input',{'name':'__VIEWSTATEGENERATOR'})['value']

vstatenc=soup.find('input',{'name':'__VIEWSTATEENCRYPTED'})['value']

eventval=soup.find('input',{'name':'__EVENTVALIDATION'})['value']

for pagenum in range(4000): # change as per your old code

postback={'__EVENTTARGET': 'ctl00$ContentPlaceHolder1$cmdNext',

'__EVENTARGUMENT': '',

'__VIEWSTATE':vstat,

'__VIEWSTATEGENERATOR': vstatgen,

'__VIEWSTATEENCRYPTED': vstatenc,

'__EVENTVALIDATION': eventval,

'ctl00$txtSearchGlobal': '',

'ctl00$ContentPlaceHolder1$ddlfile': '.pdf',

'ctl00$ContentPlaceHolder1$TextBox1': '',

'ctl00$ContentPlaceHolder1$btn': 'allwordbtn',

'ctl00$ContentPlaceHolder1$btn1': 'titlebtn',

'ctl00$ContentPlaceHolder1$txtpage': str(pagenum) }

resp=sess.post('https://loksabha.nic.in/Questions/Qtextsearch.aspx',data=postback)

soup=bs(resp.content)

vstat=soup.find('input',{'name':'__VIEWSTATE'})['value']

vstatgen=soup.find('input',{'name':'__VIEWSTATEGENERATOR'})['value']

vstatenc=soup.find('input',{'name':'__VIEWSTATEENCRYPTED'})['value']

eventval=soup.find('input',{'name':'__EVENTVALIDATION'})['value']

### process next page...extract pdfs here

###

###

####