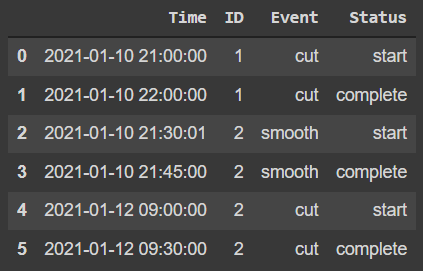

Suppose the below dataframe df:

import pandas as pd

data = {"Time":["2021-01-10 21:00:00", "2021-01-10 22:00:00",

"2021-01-10 21:30:01", "2021-01-10 21:45:00",

"2021-01-12 09:00:00", "2021-01-12 09:30:00"],

"ID":["1","1","2","2","2","2"],

"Event":["cut","cut", "smooth","smooth","cut","cut"],

"Status":["start", "complete", "start", "complete","start", "complete",]}

df = pd.DataFrame(data)

df["Time"] = pd.to_datetime(df["Time"])

df["ID"] = df["ID"].astype("int")

df

My final goal is to calculate the total production time per unique 'ID', without taking into account any potential time breaks between each time interval. The start time for each ID is the 1st instance of "start" Status, and the end production time is the last instance of "complete" Status per ID. E.g., for ID==1 this is 1h (3600s), while for ID==2 is about 45min (15min in the 1st, and 30min in the 2nd time interval).

Since I would also be interested in capturing the time intervals per unique ID (e.g., ID==1 has only 1 interval which coincides with its total production time, ID==2 has 2 pairs of start-complete statuses, and hence 2 intervals), what I thought to do is create two dictionaries: 'time_diff_dict', and 'cumulativeSumId':

- 'time_diff_dict': key:unique ID, values: the time intervals

- 'cumulativeSumId': key: unique ID, values: the cumulative sum of the time intervals above

In this way, in the 'cumulativeSumId' dictionary, the last key value per each key (ID) would be equal to its total production time.

However, imagine that the real df has about 180,000 rows with about 3000 unique IDs, and it takes about 10min to terminate the below code. Probably I will have to use iterations methods like the ones described

, where e.g. for ID==1, the total production time is 3600sec, and for ID==2 is 2699sec because this is the last instance in its cumulative sum time dictionary.

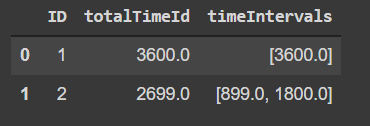

After that, I create a new df with: unique IDs, "totalTimeId", and "timeIntervals":

'''

* create list of lists

* every sublist is a dataframe per unique ID

'''

lists_of_IDdfs =[]

for id, df_id in df.groupby("ID"):

lists_of_IDdfs.append(df_id)

data = []

for df in range(len(lists_of_IDdfs)):

data.append((lists_of_IDdfs[df].ID.iloc[-1], lists_of_IDdfs[df].Cumulative_Time.iloc[-1]))

df_ID_TotalTime = pd.DataFrame(data, columns= ["ID", "totalTimeId"])

'''add the respective time interval data points per unique ID'''

df_ID_TotalTime["timeIntervals"] = df_ID_TotalTime["ID"].map(time_diff_dict)

df_ID_TotalTime

Final desired result:

I would appreciate any thoughts and help! Thank you!

CodePudding user response:

You can reshape your dataframe using pivot, compute the difference between the two datetimes and groupby "ID" to aggregate the data:

# pre-requisite ensure that Time is of datetime type

df['Time'] = pd.to_datetime(df['Time'])

(df.pivot(index=['ID', 'Event'], columns='Status', values='Time')

.assign(time=lambda d: d['complete']-d['start'])

.groupby('ID')['time'].sum()

)

output:

ID

1 0 days 00:30:00

2 0 days 00:24:58

To get the output in seconds:

(df.pivot(index=['ID', 'Event'], columns='Status', values='Time')

.assign(time=lambda d: d['complete']-d['start'])

.groupby('ID')['time'].sum()

.dt.total_seconds()

)

output:

ID

1 1800.0

2 1498.0

alternative output:

(df.pivot(index=['ID', 'Event'], columns='Status', values='Time')

.assign(time=lambda d: (d['complete']-d['start']).dt.total_seconds())

.groupby('ID')['time'].agg(totalTimeId='sum', timeIntervals=list)

)

output:

totalTimeId timeIntervals

ID

1 3600.0 [3600.0]

2 2699.0 [1800.0, 899.0]

edit how to handle duplicates:

You need to add a secondary index that is unique (ID2)

(df.assign(ID2=df.groupby(['ID', 'Event', 'Status']).cumcount())

.pivot(index=['ID', 'ID2', 'Event'], columns='Status', values='Time')

.assign(time=lambda d: (d['complete']-d['start']).dt.total_seconds())

.groupby('ID')['time'].agg(totalTimeId='sum', timeIntervals=list)

)

input:

Time ID Event Status

0 2021-01-10 21:00:00 1 cut start

1 2021-01-10 22:00:00 1 cut complete

2 2021-01-10 21:30:01 2 smooth start

3 2021-01-10 21:45:00 2 smooth complete

4 2021-01-12 09:00:00 2 cut start

5 2021-01-12 09:30:00 2 cut complete

6 2021-01-12 09:30:00 2 cut start

7 2021-01-12 09:35:00 2 cut complete

intermediate:

Status complete start

ID ID2 Event

1 0 cut 2021-01-10 22:00:00 2021-01-10 21:00:00

2 0 cut 2021-01-12 09:30:00 2021-01-12 09:00:00

smooth 2021-01-10 21:45:00 2021-01-10 21:30:01

1 cut 2021-01-12 09:35:00 2021-01-12 09:30:00

output:

totalTimeId timeIntervals

ID

1 3600.0 [3600.0]

2 2999.0 [1800.0, 899.0, 300.0]

CodePudding user response:

You can groupby ID and then calculate timedeltas:

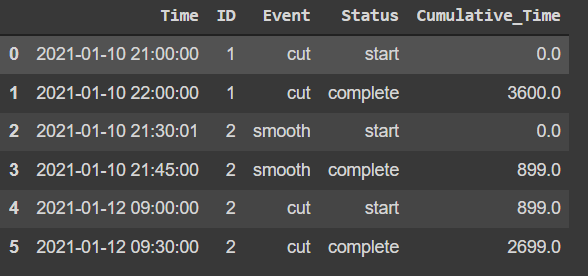

df['Cumulative_Time'] = df.groupby('ID')['Time'].apply(lambda x: x - x.min()).dt.total_seconds()

and in order to get your desired output you can do the following, inspired by @mozway's answer.

(df.groupby(['ID','Event'])['Time']

.apply(lambda x: x.max() - x.min()).dt.total_seconds()

.groupby('ID')

.agg(totalTimeId='sum', timeIntervals=list))