This is similar to previous questions about how to expand a list-based column across several columns, but the solutions I'm seeing don't seem to work for Dask. Note, that the true DFs I'm working with are too large to hold in memory, so converting to pandas first is not an option.

I have a df with column that contains lists:

df = pd.DataFrame({'a': [np.random.randint(100, size=4) for _ in range(20)]})

dask_df = dd.from_pandas(df, chunksize=10)

dask_df['a'].compute()

0 [52, 38, 59, 78]

1 [79, 71, 13, 63]

2 [15, 81, 79, 76]

3 [53, 4, 94, 62]

4 [91, 34, 26, 92]

5 [96, 1, 69, 27]

6 [84, 91, 96, 68]

7 [93, 56, 45, 40]

8 [54, 1, 96, 76]

9 [27, 11, 79, 7]

10 [27, 60, 78, 23]

11 [56, 61, 88, 68]

12 [81, 10, 79, 65]

13 [34, 49, 30, 3]

14 [32, 46, 53, 62]

15 [20, 46, 87, 31]

16 [89, 9, 11, 4]

17 [26, 46, 19, 27]

18 [79, 44, 45, 56]

19 [22, 18, 31, 90]

Name: a, dtype: object

mini_dfs = [*test_df.groupby('a')['a'].apply(lambda x: create_df_row(x))]

result = dd.concat(mini_dfs)

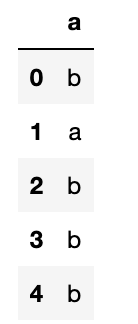

result.compute().head()

But not sure if this solves the in-memory issue as now i'm holding a list of groupby results.