I am performing a copy activity to bring in data into the Azure data lake using Azure data factory. The file format is compressed(.gz) format.

I want to copy those files but want to change the format to .json instead of copying in the same original format(the .gz file contains inside a .json file).

Is there a mechanism to get this done in Azure data factory? I want to perform this because in further ETL process i will face issues with .gz format.

Any help would be great. Thank you.

CodePudding user response:

Step1: Create Copy Activity.

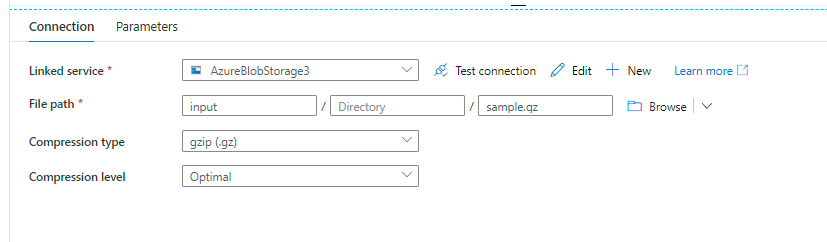

Step2: Select .gz file as Source

Step3: Select gzip(.gz) as Compression type and Compression level as Optimal.

Step4: Select Sink as blob storage and run pipeline.

This will unzip your .gz file.