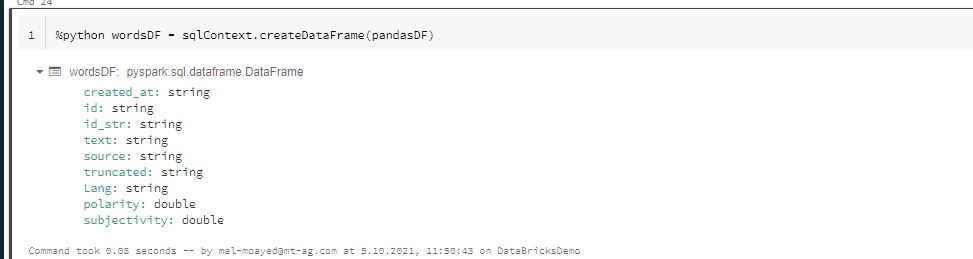

under Databricks, I am using this command to create Dataframe from Python dataframe

%python wordsDF = sqlContext.createDataFrame(pandasDF).

I want to send the data back to Azure Datalake Gen2, and I want to use Scala dataframe.

How can I get back/convert the Dataframe from Pyspark to scala Dataframe?

CodePudding user response:

Using PySpark, you can create a local table in Databricks - see here: Databases and tables - Create a local table

Then, you can create a Scala DataFrame from that local table.

%python

wordsDF.createOrReplaceTempView("wordsDF")

%scala

val wordsDF = table("wordsDF")

CodePudding user response:

Well, thank you. I tried this command also from before, but it is not working %python wordsDF.createOrReplaceTempView("wordsDF")

Yesterday I tried another way where I can share it with you. First I executed this command

%python wordsDF.createOrReplaceTempView("wordsDF") Then %python wordsDF.createOrReplaceTempView("wordsDF") Then create table %python sqlContext.registerDataFrameAsTable(wordsDF, "pandasTAB") Then %sql select * from pandasTAB finally val sparkDF = spark.sql("select created_at,id,text,Lang,polarity,subjectivity from pandasTAB")