I have the following df:

df = pd.DataFrame({"values":[1,5,7,3,0,9,8,8,7,5,8,1,0,0,0,0,2,5],"signal":['L_exit',None,None,'R_entry','R_exit',None,'L_entry','L_exit',None,'R_entry','R_exit','R_entry','R_exit','L_entry','L_exit','L_entry','R_exit',None]})

df

values signal

0 1 L_exit

1 5 None

2 7 None

3 3 R_entry

4 0 R_exit

5 9 None

6 8 L_entry

7 8 L_exit

8 7 None

9 5 R_entry

10 8 R_exit

11 1 R_entry

12 0 R_exit

13 0 L_entry

14 0 L_exit

15 0 L_entry

16 2 R_exit

17 5 None

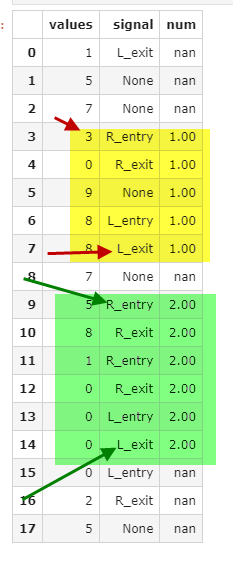

My goal is to add a tx column like this:

values signal num

0 1 L_exit nan

1 5 None nan

2 7 None nan

3 3 R_entry 1.00

4 0 R_exit 1.00

5 9 None 1.00

6 8 L_entry 1.00

7 8 L_exit 1.00

8 7 None nan

9 5 R_entry 2.00

10 8 R_exit 2.00

11 1 R_entry 2.00

12 0 R_exit 2.00

13 0 L_entry 2.00

14 0 L_exit 2.00

15 0 L_entry nan

16 2 R_exit nan

17 5 None nan

Business logic: when there's a signal of R_entry we group a tx until there's L_exit (if theres another R_entry - ignore it)

What have I tried?

g = ( df['signal'].eq('R_entry') | df_tx['signal'].eq('L_exit') ).cumsum()

df['tx'] = g.where(df['signal'].eq('R_entry')).groupby(g).ffill()

problem is that it increments every time it has 'R_entry'

CodePudding user response:

Let's try (hopefully self-explained):

signals = df['signal']

after_entry = signals.where(signals.eq('R_entry')).ffill().eq('R_entry')

before_exit = signals.where(signals.eq('L_exit')).bfill().eq('L_exit')

valids = after_entry & before_exit

blocks = signals.where(valids).ffill()[::-1].eq('L_exit').cumsum()[::-1]

valid_blocks = (blocks.groupby(blocks).transform('size') > 2)

valid_entries = valid_blocks & (~blocks.duplicated())

df.loc[valid_blocks, 'num'] = valid_entries.cumsum()

Output:

values signal num

0 1 L_exit NaN

1 5 None NaN

2 7 None NaN

3 3 R_entry 1.0

4 0 R_exit 1.0

5 9 None 1.0

6 8 L_entry 1.0

7 8 L_exit 1.0

8 7 None NaN

9 5 R_entry 2.0

10 8 R_exit 2.0

11 1 R_entry 2.0

12 0 R_exit 2.0

13 0 L_entry 2.0

14 0 L_exit 2.0

15 0 L_entry NaN

16 2 R_exit NaN

17 5 None NaN

CodePudding user response:

You can first create a mask to get the contiguous R_entries up to reaching to L_exit.

Then get the first R_entry per group (by comparing to the next value) and apply a cumsum.

# keep only 'R_entry'/'L_exit' and get groups

mask = df['signal'].where(df['signal'].isin(['R_entry', 'L_exit'])).ffill().eq('R_entry')

# get groups and extend to next value (the L_exit)

df['num'] = (mask.ne(mask.shift())&mask).cumsum().where(mask).ffill(limit=1)

output:

values signal num

0 1 L_exit NaN

1 5 None NaN

2 7 None NaN

3 3 R_entry 1.0

4 0 R_exit 1.0

5 9 None 1.0

6 8 L_entry 1.0

7 8 L_exit 1.0

8 7 None NaN

9 5 R_entry 2.0

10 8 R_exit 2.0

11 1 R_entry 2.0

12 0 R_exit 2.0

13 0 L_entry 2.0

14 0 L_exit 2.0

15 0 L_entry NaN

16 2 R_exit NaN

17 5 None NaN

breaking down how it works

Here are the intermediate steps:

df['isin ffill'] = df['signal'].where(df['signal'].isin(['R_entry', 'L_exit'])).ffill()

df['mask'] = df['isin ffill'].eq('R_entry')

df['first_of_group'] = (mask.ne(mask.shift())&mask)

df['cumsum'] = df['first_of_group'].cumsum().where(mask)

df['num'] = df['cumsum'].ffill(limit=1)

values signal isin ffill mask first_of_group cumsum num

0 1 L_exit L_exit False False NaN NaN

1 5 None L_exit False False NaN NaN

2 7 None L_exit False False NaN NaN

3 3 R_entry R_entry True True 1.0 1.0

4 0 R_exit R_entry True False 1.0 1.0

5 9 None R_entry True False 1.0 1.0

6 8 L_entry R_entry True False 1.0 1.0

7 8 L_exit L_exit False False NaN 1.0

8 7 None L_exit False False NaN NaN

9 5 R_entry R_entry True True 2.0 2.0

10 8 R_exit R_entry True False 2.0 2.0

11 1 R_entry R_entry True False 2.0 2.0

12 0 R_exit R_entry True False 2.0 2.0

13 0 L_entry R_entry True False 2.0 2.0

14 0 L_exit L_exit False False NaN 2.0

15 0 L_entry L_exit False False NaN NaN

16 2 R_exit L_exit False False NaN NaN

17 5 None L_exit False False NaN NaN

CodePudding user response:

Maybe this will work

df["exits"] = (df.signal == "L_exit").shift(1).cumsum()

df["entries"] = df.groupby("exits").signal.apply(lambda x: (~(x == "R_entry").duplicated()) & (x == "R_entry")).cumsum()

df["grp"] = pd.NA

df.loc[df.exits == df.entries, "grp"] = df.exits[df.exits == df.entries]

df

# values signal exits entries grp

# 0 1 L_exit NaN NaN <NA>

# 1 5 None 1.0 0.0 <NA>

# 2 7 None 1.0 0.0 <NA>

# 3 3 R_entry 1.0 1.0 1.0

# 4 0 R_exit 1.0 1.0 1.0

# 5 9 None 1.0 1.0 1.0

# 6 8 L_entry 1.0 1.0 1.0

# 7 8 L_exit 1.0 1.0 1.0

# 8 7 None 2.0 1.0 <NA>

# 9 5 R_entry 2.0 2.0 2.0

# 10 8 R_exit 2.0 2.0 2.0

# 11 1 R_entry 2.0 2.0 2.0

# 12 0 R_exit 2.0 2.0 2.0

# 13 0 L_entry 2.0 2.0 2.0

# 14 0 L_exit 2.0 2.0 2.0

# 15 0 L_entry 3.0 2.0 <NA>

# 16 2 R_exit 3.0 2.0 <NA>

# 17 5 None 3.0 2.0 <NA>