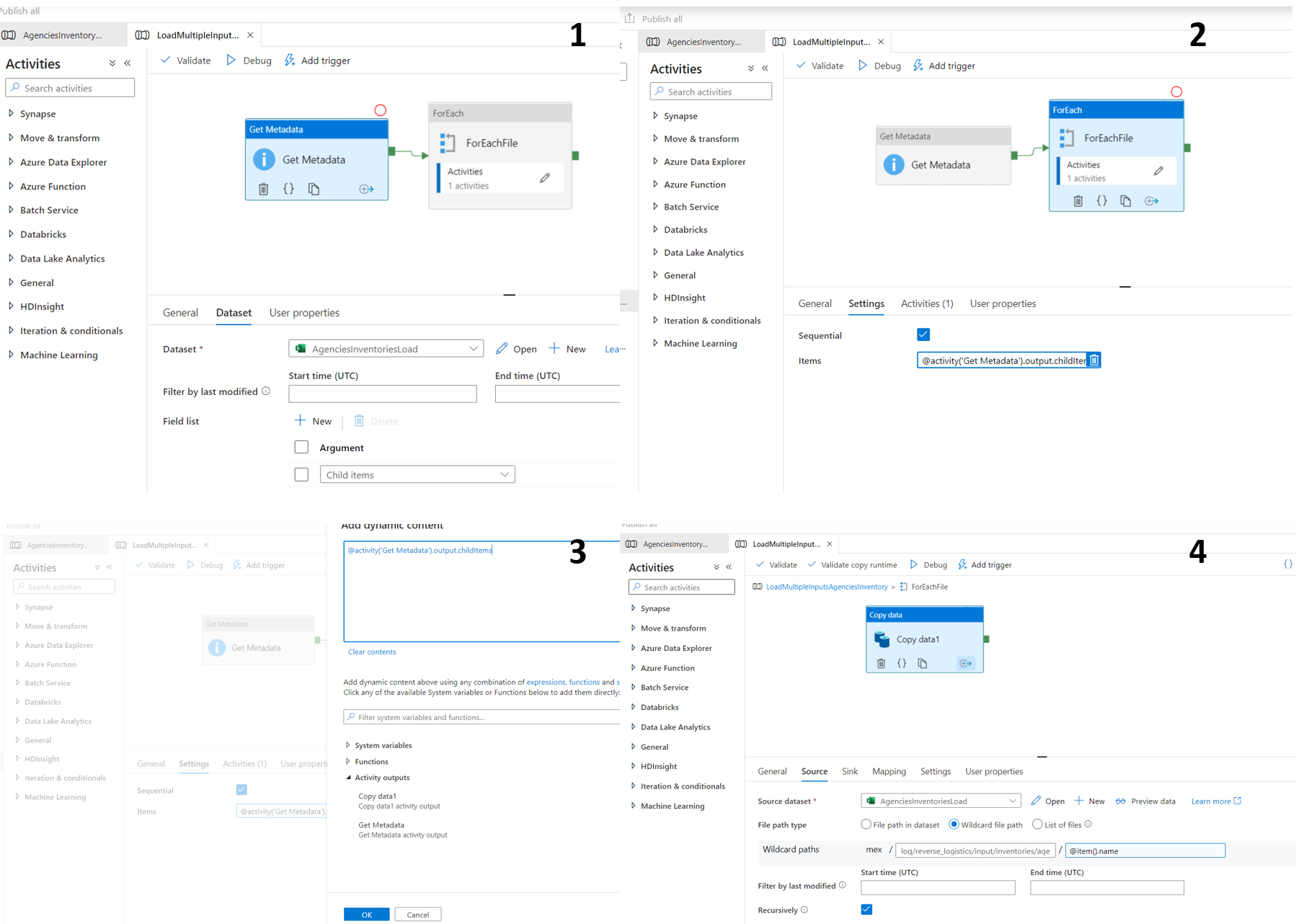

I have pipeline executes with a trigger every time that a blob storage is created. Sometimes the process needs to execute many files at once, so I created in my pipeline a 'For Each' activity as follow, in order to load data when multiple blob storages are created:

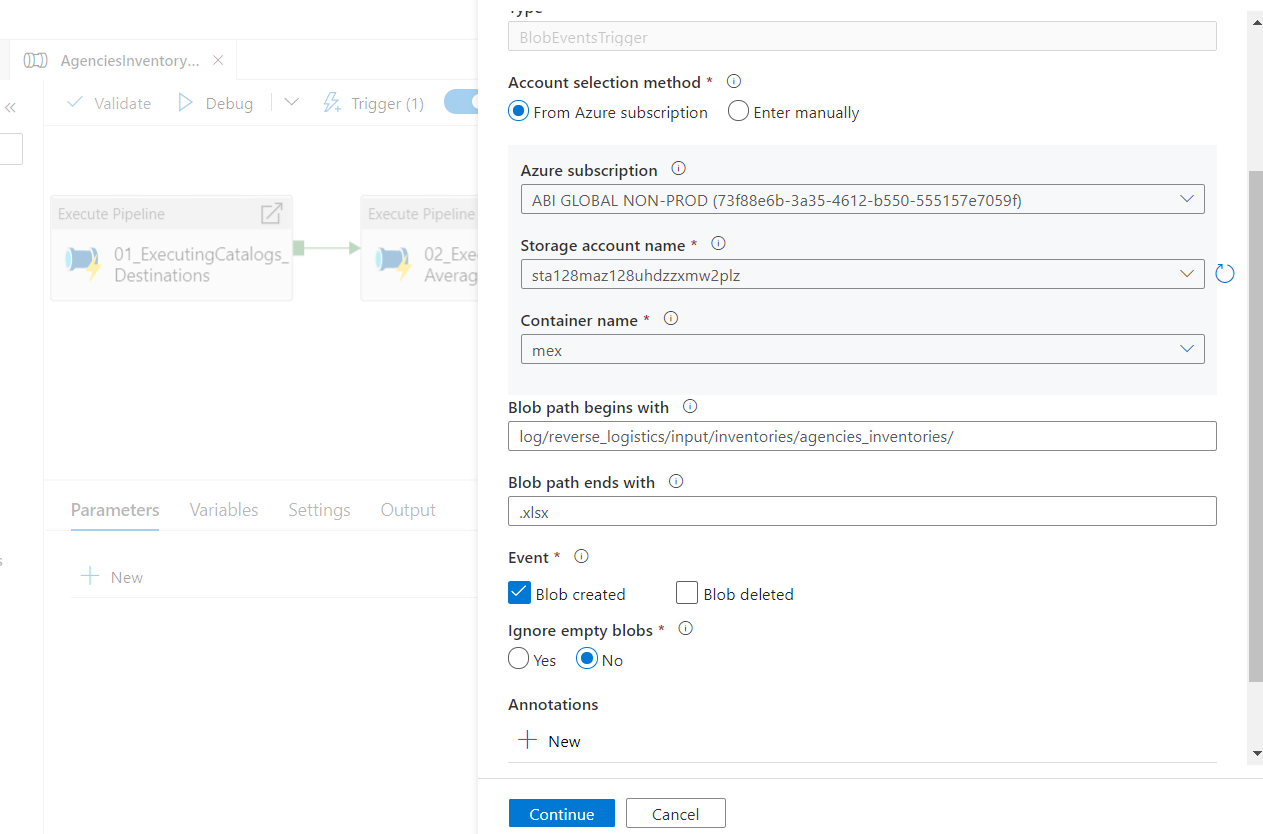

That part of the pipeline uploads the data of every blob in the container to a SQL Data Base, and here is the problem, when I execute manually everything is fine, but when the trigger is executed, it executes many times as the number of blob storages in the container, and load the data multiple times no matter what (down bellow is the trigger configuration).

What I'm doing wrong? Is there any way to execute just one time the pipeline by using a trigger when a blob storage is created no matter how many files are in the container?

Thanks by the way, best regards.

CodePudding user response:

Your solution triggers on a storage event. So that part is working.

When triggered, it retrieves all files in the container and processes every blob in that container. Not working as intended.

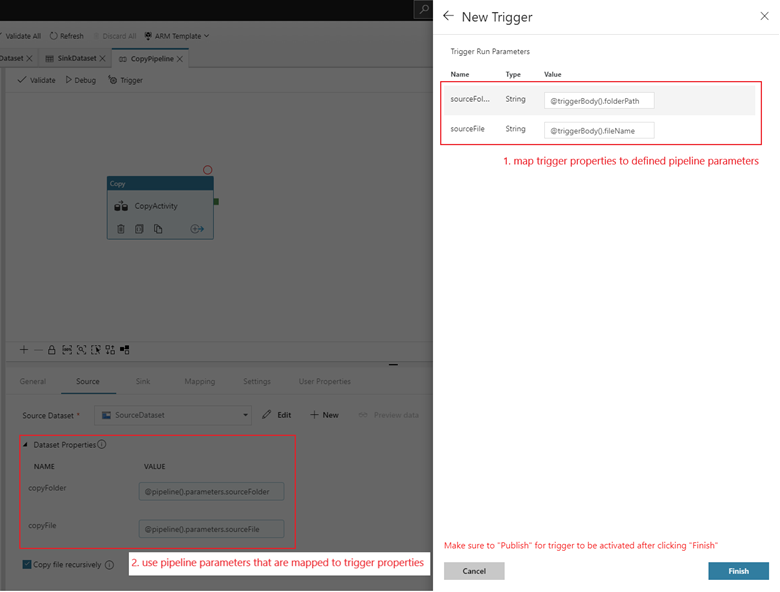

I think you have a few options here. You may want to follow

The other options is to aggregate all blob storage events and use a batch proces to do the operation.

I would first try the simple one-on-one processing option first.